Web Scraping Python Tutorial – How to Scrape Data From A Website

Python is a beautiful language to code in. It has a great package ecosystem, there's much less noise than you'll find in other languages, and it is super easy to use.

Python is used for a number of things, from data analysis to server programming. And one exciting use-case of Python is Web Scraping.

In this article, we will cover how to use Python for web scraping. We'll also work through a complete hands-on classroom guide as we proceed.

Note: We will be scraping a webpage that I host, so we can safely learn scraping on it. Many companies do not allow scraping on their websites, so this is a good way to learn. Just make sure to check before you scrape.

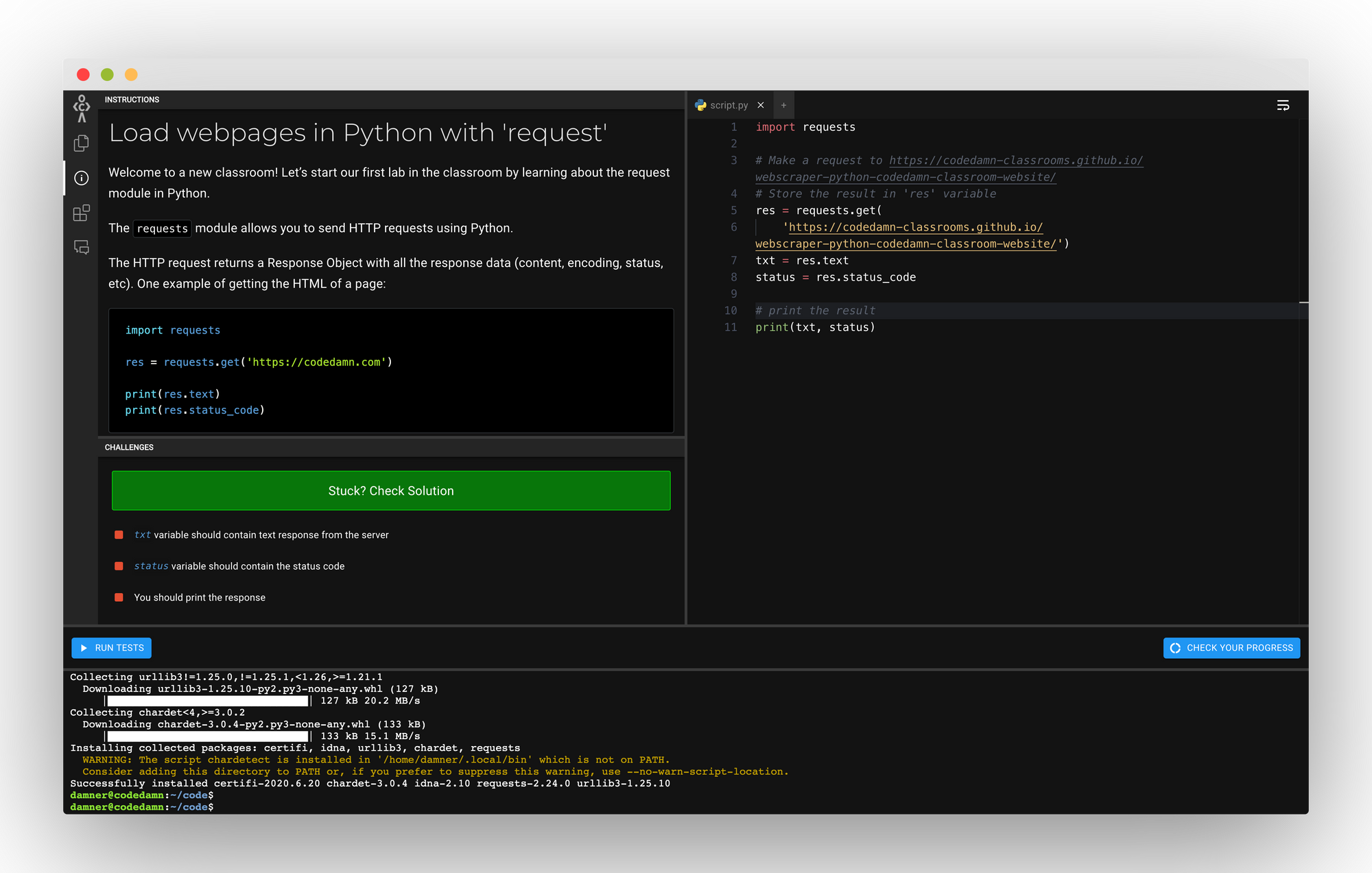

Introduction to Web Scraping classroom

If you want to code along, you can use this free codedamn classroom that consists of multiple labs to help you learn web scraping. This will be a practical hands-on learning exercise on codedamn, similar to how you learn on freeCodeCamp.

In this classroom, you'll be using this page to test web scraping: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

This classroom consists of 7 labs, and you'll solve a lab in each part of this blog post. We will be using Python 3.8 + BeautifulSoup 4 for web scraping.

Part 1: Loading Web Pages with 'request'

This is the link to this lab .

The requests module allows you to send HTTP requests using Python.

The HTTP request returns a Response Object with all the response data (content, encoding, status, and so on). One example of getting the HTML of a page:

Passing requirements:

- Get the contents of the following URL using requests module: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

- Store the text response (as shown above) in a variable called txt

- Store the status code (as shown above) in a variable called status

- Print txt and status using print function

Once you understand what is happening in the code above, it is fairly simple to pass this lab. Here's the solution to this lab:

Let's move on to part 2 now where you'll build more on top of your existing code.

Part 2: Extracting title with BeautifulSoup

In this whole classroom, you’ll be using a library called BeautifulSoup in Python to do web scraping. Some features that make BeautifulSoup a powerful solution are:

- It provides a lot of simple methods and Pythonic idioms for navigating, searching, and modifying a DOM tree. It doesn't take much code to write an application

- Beautiful Soup sits on top of popular Python parsers like lxml and html5lib, allowing you to try out different parsing strategies or trade speed for flexibility.

Basically, BeautifulSoup can parse anything on the web you give it.

Here’s a simple example of BeautifulSoup:

- Use the requests package to get title of the URL: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

- Use BeautifulSoup to store the title of this page into a variable called page_title

Looking at the example above, you can see once we feed the page.content inside BeautifulSoup, you can start working with the parsed DOM tree in a very pythonic way. The solution for the lab would be:

This was also a simple lab where we had to change the URL and print the page title. This code would pass the lab.

Part 3: Soup-ed body and head

In the last lab, you saw how you can extract the title from the page. It is equally easy to extract out certain sections too.

You also saw that you have to call .text on these to get the string, but you can print them without calling .text too, and it will give you the full markup. Try to run the example below:

Let's take a look at how you can extract out body and head sections from your pages.

- Repeat the experiment with URL: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

- Store page title (without calling .text) of URL in page_title

- Store body content (without calling .text) of URL in page_body

- Store head content (without calling .text) of URL in page_head

When you try to print the page_body or page_head you'll see that those are printed as strings . But in reality, when you print(type page_body) you'll see it is not a string but it works fine.

The solution of this example would be simple, based on the code above:

Part 4: select with BeautifulSoup

Now that you have explored some parts of BeautifulSoup, let's look how you can select DOM elements with BeautifulSoup methods.

Once you have the soup variable (like previous labs), you can work with .select on it which is a CSS selector inside BeautifulSoup. That is, you can reach down the DOM tree just like how you will select elements with CSS. Let's look at an example:

.select returns a Python list of all the elements. This is why you selected only the first element here with the [0] index.

- Create a variable all_h1_tags . Set it to empty list.

- Use .select to select all the <h1> tags and store the text of those h1 inside all_h1_tags list.

- Create a variable seventh_p_text and store the text of the 7th p element (index 6) inside.

The solution for this lab is:

Let's keep going.

Part 5: Top items being scraped right now

Let's go ahead and extract the top items scraped from the URL: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

If you open this page in a new tab, you’ll see some top items. In this lab, your task is to scrape out their names and store them in a list called top_items . You will also extract out the reviews for these items as well.

To pass this challenge, take care of the following things:

- Use .select to extract the titles. (Hint: one selector for product titles could be a.title )

- Use .select to extract the review count label for those product titles. (Hint: one selector for reviews could be div.ratings ) Note: this is a complete label (i.e. 2 reviews ) and not just a number.

- Create a new dictionary in the format:

- Note that you are using the strip method to remove any extra newlines/whitespaces you might have in the output. This is important to pass this lab.

- Append this dictionary in a list called top_items

- Print this list at the end

There are quite a few tasks to be done in this challenge. Let's take a look at the solution first and understand what is happening:

Note that this is only one of the solutions. You can attempt this in a different way too. In this solution:

- First of all you select all the div.thumbnail elements which gives you a list of individual products

- Then you iterate over them

- Because select allows you to chain over itself, you can use select again to get the title.

- Note that because you're running inside a loop for div.thumbnail already, the h4 > a.title selector would only give you one result, inside a list. You select that list's 0th element and extract out the text.

- Finally you strip any extra whitespace and append it to your list.

Straightforward right?

Part 6: Extracting Links

So far you have seen how you can extract the text, or rather innerText of elements. Let's now see how you can extract attributes by extracting links from the page.

Here’s an example of how to extract out all the image information from the page:

In this lab, your task is to extract the href attribute of links with their text as well. Make sure of the following things:

- You have to create a list called all_links

- In this list, store all link dict information. It should be in the following format:

- Make sure your text is stripped of any whitespace

- Make sure you check if your .text is None before you call .strip() on it.

- Store all these dicts in the all_links

You are extracting the attribute values just like you extract values from a dict, using the get function. Let's take a look at the solution for this lab:

Here, you extract the href attribute just like you did in the image case. The only thing you're doing is also checking if it is None. We want to set it to empty string, otherwise we want to strip the whitespace.

Part 7: Generating CSV from data

Finally, let's understand how you can generate CSV from a set of data. You will create a CSV with the following headings:

- Product Name

- Description

- Product Image

These products are located in the div.thumbnail . The CSV boilerplate is given below:

You have to extract data from the website and generate this CSV for the three products.

Passing Requirements:

- Product Name is the whitespace trimmed version of the name of the item (example - Asus AsusPro Adv..)

- Price is the whitespace trimmed but full price label of the product (example - $1101.83)

- The description is the whitespace trimmed version of the product description (example - Asus AsusPro Advanced BU401LA-FA271G Dark Grey, 14", Core i5-4210U, 4GB, 128GB SSD, Win7 Pro)

- Reviews are the whitespace trimmed version of the product (example - 7 reviews)

- Product image is the URL (src attribute) of the image for a product (example - /webscraper-python-codedamn-classroom-website/cart2.png)

- The name of the CSV file should be products.csv and should be stored in the same directory as your script.py file

Let's see the solution to this lab:

The for block is the most interesting here. You extract all the elements and attributes from what you've learned so far in all the labs.

When you run this code, you end up with a nice CSV file. And that's about all the basics of web scraping with BeautifulSoup!

I hope this interactive classroom from codedamn helped you understand the basics of web scraping with Python.

If you liked this classroom and this blog, tell me about it on my twitter and Instagram . Would love to hear feedback!

Independent developer, security engineering enthusiast, love to build and break stuff with code, and JavaScript <3

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

Beautiful Soup: Build a Web Scraper With Python

Table of Contents

Reasons for Web Scraping

Challenges of web scraping, an alternative to web scraping: apis, scrape the fake python job site, explore the website, decipher the information in urls, inspect the site using developer tools, static websites, hidden websites, dynamic websites, find elements by id, find elements by html class name, extract text from html elements, find elements by class name and text content, pass a function to a beautiful soup method, identify error conditions, access parent elements, extract attributes from html elements, keep practicing.

Watch Now This tutorial has a related video course created by the Real Python team. Watch it together with the written tutorial to deepen your understanding: Web Scraping With Beautiful Soup and Python

The incredible amount of data on the Internet is a rich resource for any field of research or personal interest. To effectively harvest that data, you’ll need to become skilled at web scraping . The Python libraries requests and Beautiful Soup are powerful tools for the job. If you like to learn with hands-on examples and have a basic understanding of Python and HTML, then this tutorial is for you.

In this tutorial, you’ll learn how to:

- Decipher data encoded in URLs

- Use requests and Beautiful Soup for scraping and parsing data from the Web

- Step through a web scraping pipeline from start to finish

- Build a script that fetches job offers from the Web and displays relevant information in your console

Working through this project will give you the knowledge of the process and tools you need to scrape any static website out there on the World Wide Web. You can download the project source code by clicking on the link below:

Get Sample Code: Click here to get the sample code you’ll use for the project and examples in this tutorial.

Let’s get started!

What Is Web Scraping?

Web scraping is the process of gathering information from the Internet. Even copying and pasting the lyrics of your favorite song is a form of web scraping! However, the words “web scraping” usually refer to a process that involves automation. Some websites don’t like it when automatic scrapers gather their data , while others don’t mind.

If you’re scraping a page respectfully for educational purposes, then you’re unlikely to have any problems. Still, it’s a good idea to do some research on your own and make sure that you’re not violating any Terms of Service before you start a large-scale project.

Say you’re a surfer, both online and in real life, and you’re looking for employment. However, you’re not looking for just any job. With a surfer’s mindset, you’re waiting for the perfect opportunity to roll your way!

There’s a job site that offers precisely the kinds of jobs you want. Unfortunately, a new position only pops up once in a blue moon, and the site doesn’t provide an email notification service. You think about checking up on it every day, but that doesn’t sound like the most fun and productive way to spend your time.

Thankfully, the world offers other ways to apply that surfer’s mindset! Instead of looking at the job site every day, you can use Python to help automate your job search’s repetitive parts. Automated web scraping can be a solution to speed up the data collection process. You write your code once, and it will get the information you want many times and from many pages.

In contrast, when you try to get the information you want manually, you might spend a lot of time clicking, scrolling, and searching, especially if you need large amounts of data from websites that are regularly updated with new content. Manual web scraping can take a lot of time and repetition.

There’s so much information on the Web, and new information is constantly added. You’ll probably be interested in at least some of that data, and much of it is just out there for the taking. Whether you’re actually on the job hunt or you want to download all the lyrics of your favorite artist, automated web scraping can help you accomplish your goals.

The Web has grown organically out of many sources. It combines many different technologies, styles, and personalities, and it continues to grow to this day. In other words, the Web is a hot mess! Because of this, you’ll run into some challenges when scraping the Web:

Variety: Every website is different. While you’ll encounter general structures that repeat themselves, each website is unique and will need personal treatment if you want to extract the relevant information.

Durability: Websites constantly change. Say you’ve built a shiny new web scraper that automatically cherry-picks what you want from your resource of interest. The first time you run your script , it works flawlessly. But when you run the same script only a short while later, you run into a discouraging and lengthy stack of tracebacks !

Unstable scripts are a realistic scenario, as many websites are in active development. Once the site’s structure has changed, your scraper might not be able to navigate the sitemap correctly or find the relevant information. The good news is that many changes to websites are small and incremental, so you’ll likely be able to update your scraper with only minimal adjustments.

However, keep in mind that because the Internet is dynamic, the scrapers you’ll build will probably require constant maintenance. You can set up continuous integration to run scraping tests periodically to ensure that your main script doesn’t break without your knowledge.

Some website providers offer application programming interfaces (APIs) that allow you to access their data in a predefined manner. With APIs, you can avoid parsing HTML. Instead, you can access the data directly using formats like JSON and XML. HTML is primarily a way to present content to users visually.

When you use an API, the process is generally more stable than gathering the data through web scraping. That’s because developers create APIs to be consumed by programs rather than by human eyes.

The front-end presentation of a site might change often, but such a change in the website’s design doesn’t affect its API structure. The structure of an API is usually more permanent, which means it’s a more reliable source of the site’s data.

However, APIs can change as well. The challenges of both variety and durability apply to APIs just as they do to websites. Additionally, it’s much harder to inspect the structure of an API by yourself if the provided documentation lacks quality.

The approach and tools you need to gather information using APIs are outside the scope of this tutorial. To learn more about it, check out API Integration in Python .

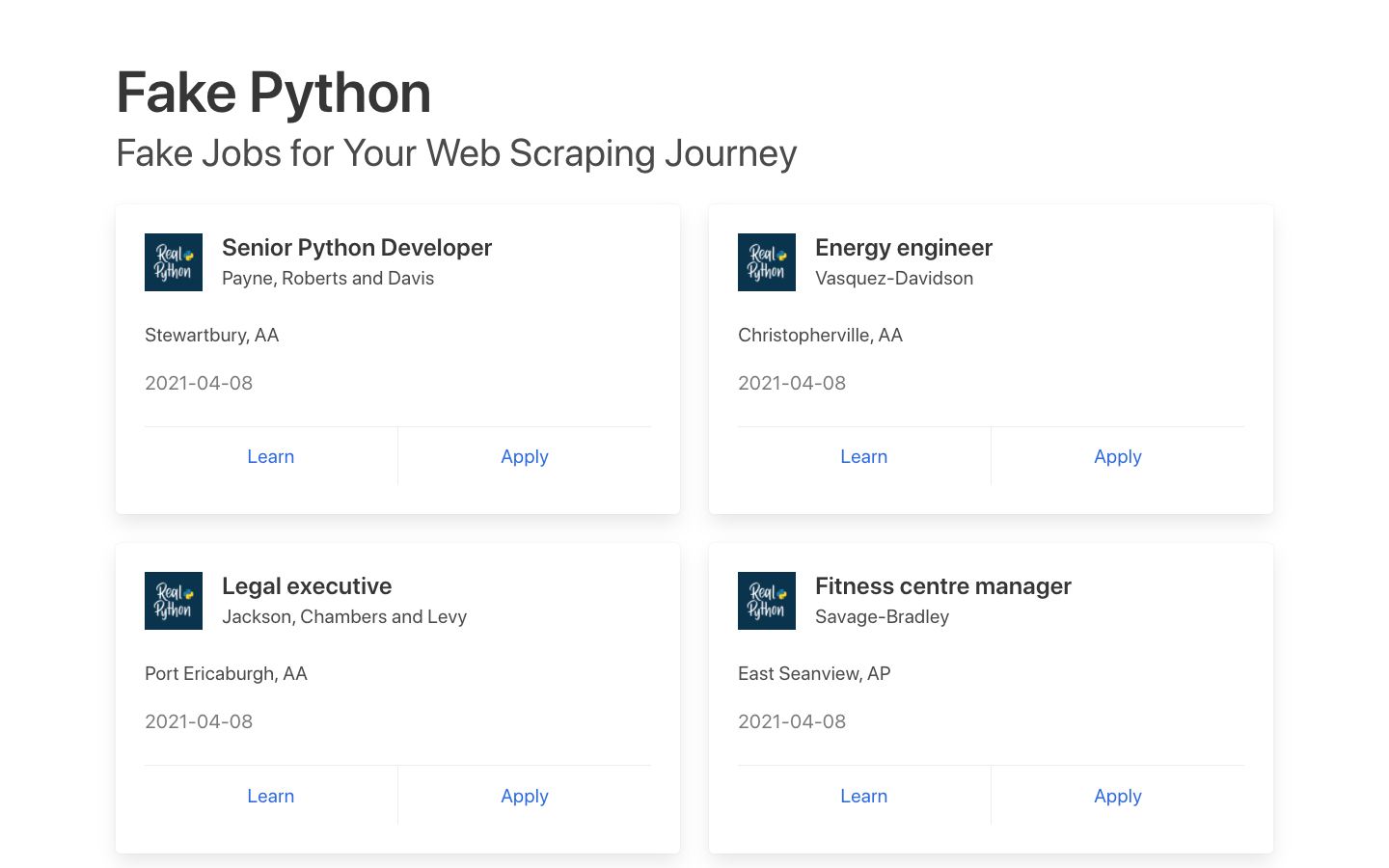

In this tutorial, you’ll build a web scraper that fetches Python software developer job listings from the Fake Python Jobs site. It’s an example site with fake job postings that you can freely scrape to train your skills. Your web scraper will parse the HTML on the site to pick out the relevant information and filter that content for specific words.

Note: A previous version of this tutorial focused on scraping the Monster job board, which has since changed and doesn’t provide static HTML content anymore. The updated version of this tutorial focuses on a self-hosted static site that is guaranteed to stay the same and gives you a reliable playground to practice the skills you need for web scraping.

You can scrape any site on the Internet that you can look at, but the difficulty of doing so depends on the site. This tutorial offers you an introduction to web scraping to help you understand the overall process. Then, you can apply this same process for every website you’ll want to scrape.

Throughout the tutorial, you’ll also encounter a few exercise blocks . You can click to expand them and challenge yourself by completing the tasks described there.

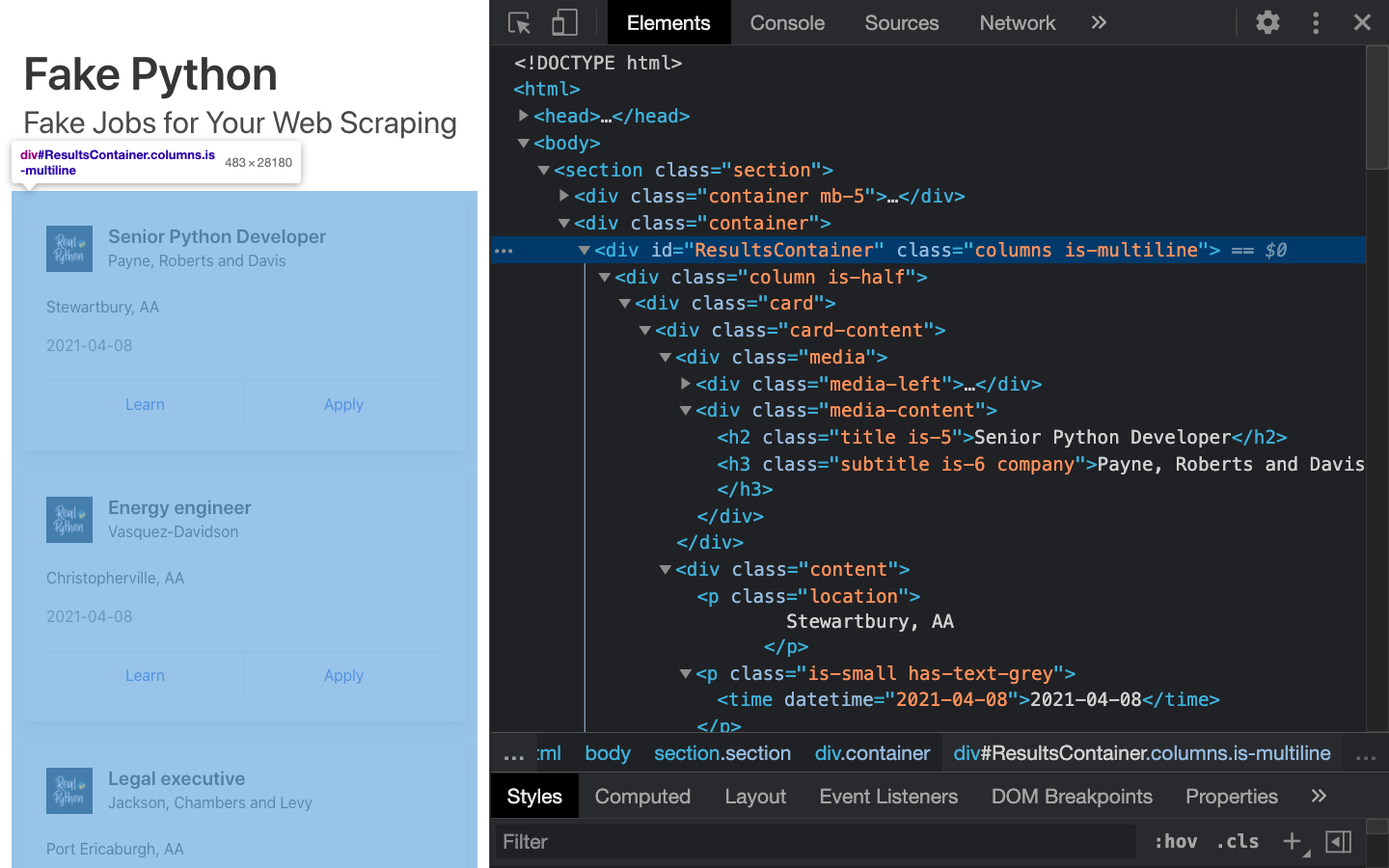

Step 1: Inspect Your Data Source

Before you write any Python code, you need to get to know the website that you want to scrape. That should be your first step for any web scraping project you want to tackle. You’ll need to understand the site structure to extract the information that’s relevant for you. Start by opening the site you want to scrape with your favorite browser.

Click through the site and interact with it just like any typical job searcher would. For example, you can scroll through the main page of the website:

You can see many job postings in a card format, and each of them has two buttons. If you click Apply , then you’ll see a new page that contains more detailed descriptions of the selected job. You might also notice that the URL in your browser’s address bar changes when you interact with the website.

A programmer can encode a lot of information in a URL. Your web scraping journey will be much easier if you first become familiar with how URLs work and what they’re made of. For example, you might find yourself on a details page that has the following URL:

You can deconstruct the above URL into two main parts:

- The base URL represents the path to the search functionality of the website. In the example above, the base URL is https://realpython.github.io/fake-jobs/ .

- The specific site location that ends with .html is the path to the job description’s unique resource.

Any job posted on this website will use the same base URL. However, the unique resources’ location will be different depending on what specific job posting you’re viewing.

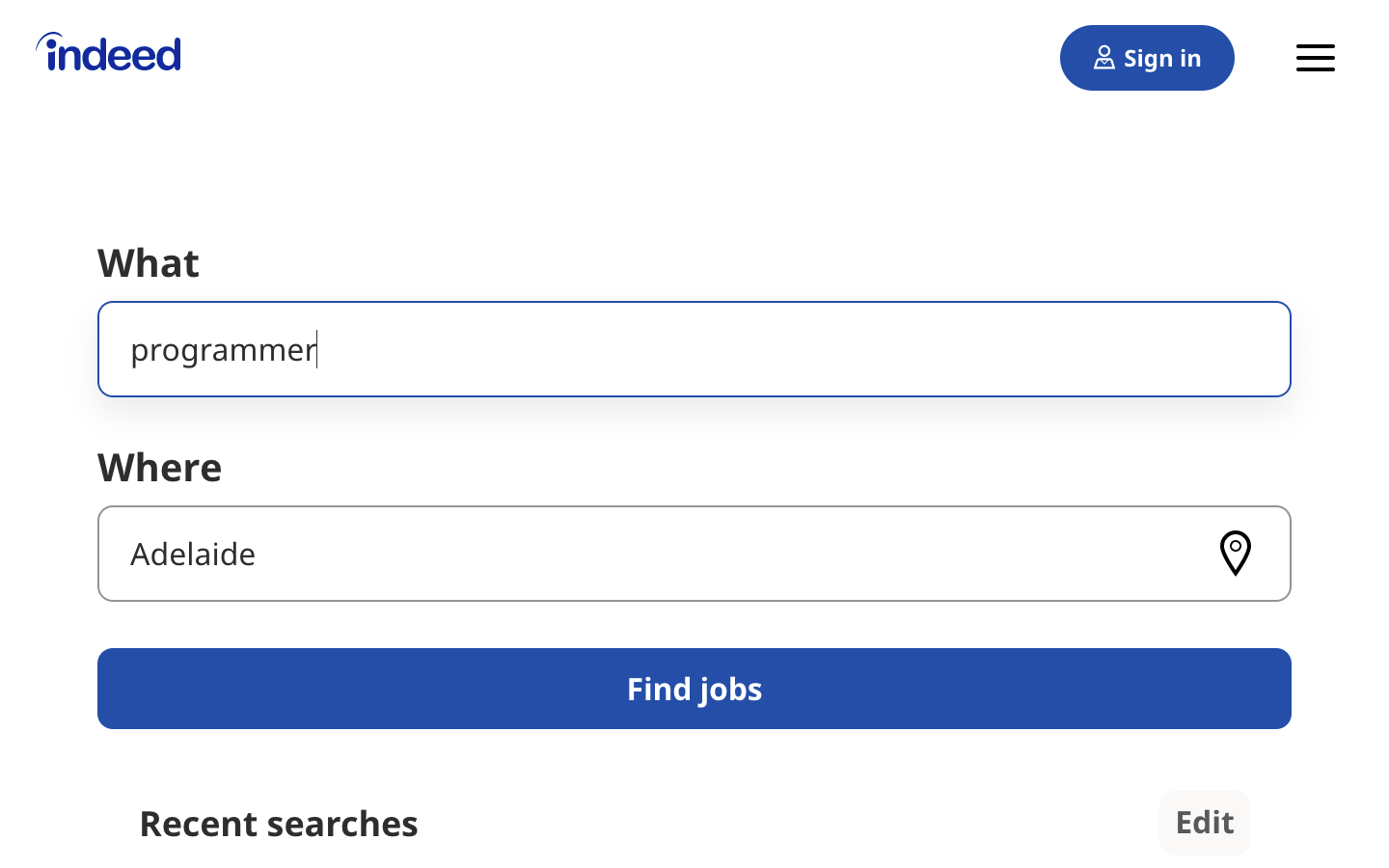

URLs can hold more information than just the location of a file. Some websites use query parameters to encode values that you submit when performing a search. You can think of them as query strings that you send to the database to retrieve specific records.

You’ll find query parameters at the end of a URL. For example, if you go to Indeed and search for “software developer” in “Australia” through their search bar, you’ll see that the URL changes to include these values as query parameters:

The query parameters in this URL are ?q=software+developer&l=Australia . Query parameters consist of three parts:

- Start: The beginning of the query parameters is denoted by a question mark ( ? ).

- Information: The pieces of information constituting one query parameter are encoded in key-value pairs, where related keys and values are joined together by an equals sign ( key=value ).

- Separator: Every URL can have multiple query parameters, separated by an ampersand symbol ( & ).

Equipped with this information, you can pick apart the URL’s query parameters into two key-value pairs:

- q=software+developer selects the type of job.

- l=Australia selects the location of the job.

Try to change the search parameters and observe how that affects your URL. Go ahead and enter new values in the search bar up top:

Next, try to change the values directly in your URL. See what happens when you paste the following URL into your browser’s address bar:

If you change and submit the values in the website’s search box, then it’ll be directly reflected in the URL’s query parameters and vice versa. If you change either of them, then you’ll see different results on the website.

As you can see, exploring the URLs of a site can give you insight into how to retrieve data from the website’s server.

Head back to Fake Python Jobs and continue exploring it. This site is a purely static website that doesn’t operate on top of a database, which is why you won’t have to work with query parameters in this scraping tutorial.

Next, you’ll want to learn more about how the data is structured for display. You’ll need to understand the page structure to pick what you want from the HTML response that you’ll collect in one of the upcoming steps.

Developer tools can help you understand the structure of a website. All modern browsers come with developer tools installed. In this section, you’ll see how to work with the developer tools in Chrome. The process will be very similar to other modern browsers.

In Chrome on macOS, you can open up the developer tools through the menu by selecting View → Developer → Developer Tools . On Windows and Linux, you can access them by clicking the top-right menu button ( ⋮ ) and selecting More Tools → Developer Tools . You can also access your developer tools by right-clicking on the page and selecting the Inspect option or using a keyboard shortcut :

- Mac: Cmd + Alt + I

- Windows/Linux: Ctrl + Shift + I

Developer tools allow you to interactively explore the site’s document object model (DOM) to better understand your source. To dig into your page’s DOM, select the Elements tab in developer tools. You’ll see a structure with clickable HTML elements. You can expand, collapse, and even edit elements right in your browser:

You can think of the text displayed in your browser as the HTML structure of that page. If you’re interested, then you can read more about the difference between the DOM and HTML on CSS-TRICKS .

When you right-click elements on the page, you can select Inspect to zoom to their location in the DOM. You can also hover over the HTML text on your right and see the corresponding elements light up on the page.

Click to expand the exercise block for a specific task to practice using your developer tools:

Exercise: Explore the HTML Show/Hide

Find a single job posting. What HTML element is it wrapped in, and what other HTML elements does it contain?

Play around and explore! The more you get to know the page you’re working with, the easier it will be to scrape it. However, don’t get too overwhelmed with all that HTML text. You’ll use the power of programming to step through this maze and cherry-pick the information that’s relevant to you.

Step 2: Scrape HTML Content From a Page

Now that you have an idea of what you’re working with, it’s time to start using Python. First, you’ll want to get the site’s HTML code into your Python script so that you can interact with it. For this task, you’ll use Python’s requests library.

Create a virtual environment for your project before you install any external package. Activate your new virtual environment, then type the following command in your terminal to install the external requests library:

Then open up a new file in your favorite text editor . All you need to retrieve the HTML are a few lines of code:

This code issues an HTTP GET request to the given URL. It retrieves the HTML data that the server sends back and stores that data in a Python object.

If you print the .text attribute of page , then you’ll notice that it looks just like the HTML that you inspected earlier with your browser’s developer tools. You successfully fetched the static site content from the Internet! You now have access to the site’s HTML from within your Python script.

The website that you’re scraping in this tutorial serves static HTML content . In this scenario, the server that hosts the site sends back HTML documents that already contain all the data that you’ll get to see as a user.

When you inspected the page with developer tools earlier on, you discovered that a job posting consists of the following long and messy-looking HTML:

It can be challenging to wrap your head around a long block of HTML code. To make it easier to read, you can use an HTML formatter to clean it up automatically. Good readability helps you better understand the structure of any code block. While it may or may not help improve the HTML formatting, it’s always worth a try.

Note: Keep in mind that every website will look different. That’s why it’s necessary to inspect and understand the structure of the site you’re currently working with before moving forward.

The HTML you’ll encounter will sometimes be confusing. Luckily, the HTML of this job board has descriptive class names on the elements that you’re interested in:

- class="title is-5" contains the title of the job posting.

- class="subtitle is-6 company" contains the name of the company that offers the position.

- class="location" contains the location where you’d be working.

In case you ever get lost in a large pile of HTML, remember that you can always go back to your browser and use the developer tools to further explore the HTML structure interactively.

By now, you’ve successfully harnessed the power and user-friendly design of Python’s requests library. With only a few lines of code, you managed to scrape static HTML content from the Web and make it available for further processing.

However, there are more challenging situations that you might encounter when you’re scraping websites. Before you learn how to pick the relevant information from the HTML that you just scraped, you’ll take a quick look at two of these more challenging situations.

Some pages contain information that’s hidden behind a login. That means you’ll need an account to be able to scrape anything from the page. The process to make an HTTP request from your Python script is different from how you access a page from your browser. Just because you can log in to the page through your browser doesn’t mean you’ll be able to scrape it with your Python script.

However, the requests library comes with the built-in capacity to handle authentication . With these techniques, you can log in to websites when making the HTTP request from your Python script and then scrape information that’s hidden behind a login. You won’t need to log in to access the job board information, which is why this tutorial won’t cover authentication.

In this tutorial, you’ll learn how to scrape a static website. Static sites are straightforward to work with because the server sends you an HTML page that already contains all the page information in the response. You can parse that HTML response and immediately begin to pick out the relevant data.

On the other hand, with a dynamic website , the server might not send back any HTML at all. Instead, you could receive JavaScript code as a response. This code will look completely different from what you saw when you inspected the page with your browser’s developer tools.

Note: In this tutorial, the term dynamic website refers to a website that doesn’t return the same HTML that you see when viewing the page in your browser.

Many modern web applications are designed to provide their functionality in collaboration with the clients’ browsers. Instead of sending HTML pages, these apps send JavaScript code that instructs your browser to create the desired HTML. Web apps deliver dynamic content in this way to offload work from the server to the clients’ machines as well as to avoid page reloads and improve the overall user experience.

What happens in the browser is not the same as what happens in your script. Your browser will diligently execute the JavaScript code it receives from a server and create the DOM and HTML for you locally. However, if you request a dynamic website in your Python script, then you won’t get the HTML page content.

When you use requests , you only receive what the server sends back. In the case of a dynamic website, you’ll end up with some JavaScript code instead of HTML. The only way to go from the JavaScript code you received to the content that you’re interested in is to execute the code, just like your browser does. The requests library can’t do that for you, but there are other solutions that can.

For example, requests-html is a project created by the author of the requests library that allows you to render JavaScript using syntax that’s similar to the syntax in requests . It also includes capabilities for parsing the data by using Beautiful Soup under the hood.

Note: Another popular choice for scraping dynamic content is Selenium . You can think of Selenium as a slimmed-down browser that executes the JavaScript code for you before passing on the rendered HTML response to your script.

You won’t go deeper into scraping dynamically-generated content in this tutorial. For now, it’s enough to remember to look into one of the options mentioned above if you need to scrape a dynamic website.

Step 3: Parse HTML Code With Beautiful Soup

You’ve successfully scraped some HTML from the Internet, but when you look at it, it just seems like a huge mess. There are tons of HTML elements here and there, thousands of attributes scattered around—and wasn’t there some JavaScript mixed in as well? It’s time to parse this lengthy code response with the help of Python to make it more accessible and pick out the data you want.

Beautiful Soup is a Python library for parsing structured data . It allows you to interact with HTML in a similar way to how you interact with a web page using developer tools. The library exposes a couple of intuitive functions you can use to explore the HTML you received. To get started, use your terminal to install Beautiful Soup:

Then, import the library in your Python script and create a Beautiful Soup object:

When you add the two highlighted lines of code, you create a Beautiful Soup object that takes page.content , which is the HTML content you scraped earlier, as its input.

Note: You’ll want to pass page.content instead of page.text to avoid problems with character encoding. The .content attribute holds raw bytes, which can be decoded better than the text representation you printed earlier using the .text attribute.

The second argument, "html.parser" , makes sure that you use the appropriate parser for HTML content.

In an HTML web page, every element can have an id attribute assigned. As the name already suggests, that id attribute makes the element uniquely identifiable on the page. You can begin to parse your page by selecting a specific element by its ID.

Switch back to developer tools and identify the HTML object that contains all the job postings. Explore by hovering over parts of the page and using right-click to Inspect .

Note: It helps to periodically switch back to your browser and interactively explore the page using developer tools. This helps you learn how to find the exact elements you’re looking for.

The element you’re looking for is a <div> with an id attribute that has the value "ResultsContainer" . It has some other attributes as well, but below is the gist of what you’re looking for:

Beautiful Soup allows you to find that specific HTML element by its ID:

For easier viewing, you can prettify any Beautiful Soup object when you print it out. If you call .prettify() on the results variable that you just assigned above, then you’ll see all the HTML contained within the <div> :

When you use the element’s ID, you can pick out one element from among the rest of the HTML. Now you can work with only this specific part of the page’s HTML. It looks like the soup just got a little thinner! However, it’s still quite dense.

You’ve seen that every job posting is wrapped in a <div> element with the class card-content . Now you can work with your new object called results and select only the job postings in it. These are, after all, the parts of the HTML that you’re interested in! You can do this in one line of code:

Here, you call .find_all() on a Beautiful Soup object, which returns an iterable containing all the HTML for all the job listings displayed on that page.

Take a look at all of them:

That’s already pretty neat, but there’s still a lot of HTML! You saw earlier that your page has descriptive class names on some elements. You can pick out those child elements from each job posting with .find() :

Each job_element is another BeautifulSoup() object. Therefore, you can use the same methods on it as you did on its parent element, results .

With this code snippet, you’re getting closer and closer to the data that you’re actually interested in. Still, there’s a lot going on with all those HTML tags and attributes floating around:

Next, you’ll learn how to narrow down this output to access only the text content you’re interested in.

You only want to see the title, company, and location of each job posting. And behold! Beautiful Soup has got you covered. You can add .text to a Beautiful Soup object to return only the text content of the HTML elements that the object contains:

Run the above code snippet, and you’ll see the text of each element displayed. However, it’s possible that you’ll also get some extra whitespace . Since you’re now working with Python strings , you can .strip() the superfluous whitespace. You can also apply any other familiar Python string methods to further clean up your text:

The results finally look much better:

That’s a readable list of jobs that also includes the company name and each job’s location. However, you’re looking for a position as a software developer, and these results contain job postings in many other fields as well.

Not all of the job listings are developer jobs. Instead of printing out all the jobs listed on the website, you’ll first filter them using keywords.

You know that job titles in the page are kept within <h2> elements. To filter for only specific jobs, you can use the string argument :

This code finds all <h2> elements where the contained string matches "Python" exactly. Note that you’re directly calling the method on your first results variable. If you go ahead and print() the output of the above code snippet to your console, then you might be disappointed because it’ll be empty:

There was a Python job in the search results, so why is it not showing up?

When you use string= as you did above, your program looks for that string exactly . Any differences in the spelling, capitalization, or whitespace will prevent the element from matching. In the next section, you’ll find a way to make your search string more general.

In addition to strings, you can sometimes pass functions as arguments to Beautiful Soup methods. You can change the previous line of code to use a function instead:

Now you’re passing an anonymous function to the string= argument. The lambda function looks at the text of each <h2> element, converts it to lowercase, and checks whether the substring "python" is found anywhere. You can check whether you managed to identify all the Python jobs with this approach:

Your program has found 10 matching job posts that include the word "python" in their job title!

Finding elements depending on their text content is a powerful way to filter your HTML response for specific information. Beautiful Soup allows you to use either exact strings or functions as arguments for filtering text in Beautiful Soup objects.

This seems like a good moment to run your for loop and print the title, location, and company information of the Python jobs you identified:

However, when you try to run your scraper to print out the information of the filtered Python jobs, you’ll run into an error:

This message is a common error that you’ll run into a lot when you’re scraping information from the Internet. Inspect the HTML of an element in your python_jobs list. What does it look like? Where do you think the error is coming from?

When you look at a single element in python_jobs , you’ll see that it consists of only the <h2> element that contains the job title:

When you revisit the code you used to select the items, you’ll see that that’s what you targeted. You filtered for only the <h2> title elements of the job postings that contain the word "python" . As you can see, these elements don’t include the rest of the information about the job.

The error message you received earlier was related to this:

You tried to find the job title, the company name, and the job’s location in each element in python_jobs , but each element contains only the job title text.

Your diligent parsing library still looks for the other ones, too, and returns None because it can’t find them. Then, print() fails with the shown error message when you try to extract the .text attribute from one of these None objects.

The text you’re looking for is nested in sibling elements of the <h2> elements your filter returned. Beautiful Soup can help you to select sibling, child, and parent elements of each Beautiful Soup object.

One way to get access to all the information you need is to step up in the hierarchy of the DOM starting from the <h2> elements that you identified. Take another look at the HTML of a single job posting. Find the <h2> element that contains the job title as well as its closest parent element that contains all the information that you’re interested in:

The <div> element with the card-content class contains all the information you want. It’s a third-level parent of the <h2> title element that you found using your filter.

With this information in mind, you can now use the elements in python_jobs and fetch their great-grandparent elements instead to get access to all the information you want:

You added a list comprehension that operates on each of the <h2> title elements in python_jobs that you got by filtering with the lambda expression. You’re selecting the parent element of the parent element of the parent element of each <h2> title element. That’s three generations up!

When you were looking at the HTML of a single job posting, you identified that this specific parent element with the class name card-content contains all the information you need.

Now you can adapt the code in your for loop to iterate over the parent elements instead:

When you run your script another time, you’ll see that your code once again has access to all the relevant information. That’s because you’re now looping over the <div class="card-content"> elements instead of just the <h2> title elements.

Using the .parent attribute that each Beautiful Soup object comes with gives you an intuitive way of stepping through your DOM structure and addressing the elements you need. You can also access child elements and sibling elements in a similar manner. Read up on navigating the tree for more information.

At this point, your Python script already scrapes the site and filters its HTML for relevant job postings. Well done! However, what’s still missing is the link to apply for a job.

While you were inspecting the page, you found two links at the bottom of each card. If you handle the link elements in the same way as you handled the other elements, you won’t get the URLs that you’re interested in:

If you run this code snippet, then you’ll get the link texts Learn and Apply instead of the associated URLs.

That’s because the .text attribute leaves only the visible content of an HTML element. It strips away all HTML tags, including the HTML attributes containing the URL, and leaves you with just the link text. To get the URL instead, you need to extract the value of one of the HTML attributes instead of discarding it.

The URL of a link element is associated with the href attribute. The specific URL that you’re looking for is the value of the href attribute of the second <a> tag at the bottom the HTML of a single job posting:

Start by fetching all the <a> elements in a job card. Then, extract the value of their href attributes using square-bracket notation:

In this code snippet, you first fetched all links from each of the filtered job postings. Then you extracted the href attribute, which contains the URL, using ["href"] and printed it to your console.

In the exercise block below, you can find instructions for a challenge to refine the link results that you’ve received:

Exercise: Refine Your Results Show/Hide

Each job card has two links associated with it. You’re looking for only the second link. How can you edit the code snippet shown above so that you always collect only the URL of the second link?

Click on the solution block to read up on a possible solution for this exercise:

Solution: Refine Your Results Show/Hide

To fetch the URL of just the second link for each job card, you can use the following code snippet:

You’re picking the second link element from the results of .find_all() through its index ( [1] ). Then you’re directly extracting the URL using the square-bracket notation and addressing the href attribute ( ["href"] ).

You can use the same square-bracket notation to extract other HTML attributes as well.

If you’ve written the code alongside this tutorial, then you can run your script as is, and you’ll see the fake job information pop up in your terminal. Your next step is to tackle a real-life job board ! To keep practicing your new skills, revisit the web scraping process using any or all of the following sites:

- Remote(dot)co

The linked websites return their search results as static HTML responses, similar to the Fake Python job board. Therefore, you can scrape them using only requests and Beautiful Soup.

Start going through this tutorial again from the top using one of these other sites. You’ll see that each website’s structure is different and that you’ll need to rebuild the code in a slightly different way to fetch the data you want. Tackling this challenge is a great way to practice the concepts that you just learned. While it might make you sweat every so often, your coding skills will be stronger for it!

During your second attempt, you can also explore additional features of Beautiful Soup. Use the documentation as your guidebook and inspiration. Extra practice will help you become more proficient at web scraping using Python, requests , and Beautiful Soup.

To wrap up your journey into web scraping, you could then give your code a final makeover and create a command-line interface (CLI) app that scrapes one of the job boards and filters the results by a keyword that you can input on each execution. Your CLI tool could allow you to search for specific types of jobs or jobs in particular locations.

If you’re interested in learning how to adapt your script as a command-line interface, then check out How to Build Command-Line Interfaces in Python With argparse .

The requests library gives you a user-friendly way to fetch static HTML from the Internet using Python. You can then parse the HTML with another package called Beautiful Soup. Both packages are trusted and helpful companions for your web scraping adventures. You’ll find that Beautiful Soup will cater to most of your parsing needs, including navigation and advanced searching .

In this tutorial, you learned how to scrape data from the Web using Python, requests , and Beautiful Soup. You built a script that fetches job postings from the Internet and went through the complete web scraping process from start to finish.

You learned how to:

- Inspect the HTML structure of your target site with your browser’s developer tools

- Decipher the data encoded in URLs

- Download the page’s HTML content using Python’s requests library

- Parse the downloaded HTML with Beautiful Soup to extract relevant information

With this broad pipeline in mind and two powerful libraries in your tool kit, you can go out and see what other websites you can scrape. Have fun, and always remember to be respectful and use your programming skills responsibly.

Take the Quiz: Test your knowledge with our interactive “Beautiful Soup: Build a Web Scraper With Python” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

In this quiz, you'll revisit the main steps of the web scraping process. You'll learn how to write a script that uses Python's Requests library to scrape data from a website. You'll also use Beautiful Soup to extract the specific pieces of information that you're interested in.

You can download the source code for the sample script that you built in this tutorial by clicking the link below:

🐍 Python Tricks 💌

Get a short & sweet Python Trick delivered to your inbox every couple of days. No spam ever. Unsubscribe any time. Curated by the Real Python team.

About Martin Breuss

Martin likes automation, goofy jokes, and snakes, all of which fit into the Python community. He enjoys learning and exploring and is up for talking about it, too. He writes and records content for Real Python and CodingNomads.

Each tutorial at Real Python is created by a team of developers so that it meets our high quality standards. The team members who worked on this tutorial are:

Master Real-World Python Skills With Unlimited Access to Real Python

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

What Do You Think?

What’s your #1 takeaway or favorite thing you learned? How are you going to put your newfound skills to use? Leave a comment below and let us know.

Commenting Tips: The most useful comments are those written with the goal of learning from or helping out other students. Get tips for asking good questions and get answers to common questions in our support portal . Looking for a real-time conversation? Visit the Real Python Community Chat or join the next “Office Hours” Live Q&A Session . Happy Pythoning!

Keep Learning

Related Topics: intermediate data-science tools web-scraping

Recommended Video Course: Web Scraping With Beautiful Soup and Python

Keep reading Real Python by creating a free account or signing in:

Already have an account? Sign-In

Almost there! Complete this form and click the button below to gain instant access:

Beautiful Soup (Sample Code)

🔒 No spam. We take your privacy seriously.

What Is Web Scraping? A Complete Beginner’s Guide

As the digital economy expands, the role of web scraping becomes ever more important. Read on to learn what web scraping is, how it works, and why it’s so important for data analytics.

The amount of data in our lives is growing exponentially. With this surge, data analytics has become a hugely important part of the way organizations are run. And while data has many sources, its biggest repository is on the web. As the fields of big data analytics , artificial intelligence , and machine learning grow, companies need data analysts who can scrape the web in increasingly sophisticated ways.

This beginner’s guide offers a total introduction to web scraping, what it is, how it’s used, and what the process involves. We’ll cover:

- What is web scraping?

- What is web scraping used for?

- How does a web scraper function?

- How to scrape the web (step-by-step)

- What tools can you use to scrape the web?

- What else do you need to know about web scraping?

Before we get into the details, though, let’s start with the simple stuff…

1. What is web scraping?

Web scraping (or data scraping) is a technique used to collect content and data from the internet. This data is usually saved in a local file so that it can be manipulated and analyzed as needed. If you’ve ever copied and pasted content from a website into an Excel spreadsheet, this is essentially what web scraping is, but on a very small scale.

However, when people refer to ‘web scrapers,’ they’re usually talking about software applications. Web scraping applications (or ‘bots’) are programmed to visit websites, grab the relevant pages and extract useful information. By automating this process, these bots can extract huge amounts of data in a very short time. This has obvious benefits in the digital age, when big data—which is constantly updating and changing—plays such a prominent role. You can learn more about the nature of big data in this post.

What kinds of data can you scrape from the web?

If there’s data on a website, then in theory, it’s scrapable! Common data types organizations collect include images, videos, text, product information, customer sentiments and reviews (on sites like Twitter, Yell, or Tripadvisor), and pricing from comparison websites. There are some legal rules about what types of information you can scrape, but we’ll cover these later on.

2. What is web scraping used for?

Web scraping has countless applications, especially within the field of data analytics. Market research companies use scrapers to pull data from social media or online forums for things like customer sentiment analysis. Others scrape data from product sites like Amazon or eBay to support competitor analysis.

Meanwhile, Google regularly uses web scraping to analyze, rank, and index their content. Web scraping also allows them to extract information from third-party websites before redirecting it to their own (for instance, they scrape e-commerce sites to populate Google Shopping).

Many companies also carry out contact scraping, which is when they scrape the web for contact information to be used for marketing purposes. If you’ve ever granted a company access to your contacts in exchange for using their services, then you’ve given them permission to do just this.

There are few restrictions on how web scraping can be used. It’s essentially down to how creative you are and what your end goal is. From real estate listings, to weather data, to carrying out SEO audits, the list is pretty much endless!

However, it should be noted that web scraping also has a dark underbelly. Bad players often scrape data like bank details or other personal information to conduct fraud, scams, intellectual property theft, and extortion. It’s good to be aware of these dangers before starting your own web scraping journey. Make sure you keep abreast of the legal rules around web scraping. We’ll cover these a bit more in section six.

3. How does a web scraper function?

So, we now know what web scraping is, and why different organizations use it. But how does a web scraper work? While the exact method differs depending on the software or tools you’re using, all web scraping bots follow three basic principles:

Step 1: Making an HTTP request to a server

- Step 2: Extracting and parsing (or breaking down) the website’s code

Step 3: Saving the relevant data locally

Now let’s take a look at each of these in a little more detail.

As an individual, when you visit a website via your browser, you send what’s called an HTTP request. This is basically the digital equivalent of knocking on the door, asking to come in. Once your request is approved, you can then access that site and all the information on it. Just like a person, a web scraper needs permission to access a site. Therefore, the first thing a web scraper does is send an HTTP request to the site they’re targeting.

Step 2: Extracting and parsing the website’s code

Once a website gives a scraper access, the bot can read and extract the site’s HTML or XML code. This code determines the website’s content structure. The scraper will then parse the code (which basically means breaking it down into its constituent parts) so that it can identify and extract elements or objects that have been predefined by whoever set the bot loose! These might include specific text, ratings, classes, tags, IDs, or other information.

Once the HTML or XML has been accessed, scraped, and parsed, the web scraper will then store the relevant data locally. As mentioned, the data extracted is predefined by you (having told the bot what you want it to collect). Data is usually stored as structured data, often in an Excel file, such as a .csv or .xls format.

With these steps complete, you’re ready to start using the data for your intended purposes. Easy, eh? And it’s true…these three steps do make data scraping seem easy. In reality, though, the process isn’t carried out just once, but countless times. This comes with its own swathe of problems that need solving. For instance, badly coded scrapers may send too many HTTP requests, which can crash a site. Every website also has different rules for what bots can and can’t do. Executing web scraping code is just one part of a more involved process. Let’s look at that now.

4. How to scrape the web (step-by-step)

OK, so we understand what a web scraping bot does. But there’s more to it than simply executing code and hoping for the best! In this section, we’ll cover all the steps you need to follow. The exact method for carrying out these steps depends on the tools you’re using, so we’ll focus on the (non-technical) basics.

Step one: Find the URLs you want to scrape

It might sound obvious, but the first thing you need to do is to figure out which website(s) you want to scrape. If you’re investigating customer book reviews, for instance, you might want to scrape relevant data from sites like Amazon, Goodreads, or LibraryThing.

Step two: Inspect the page

Before coding your web scraper, you need to identify what it has to scrape. Right-clicking anywhere on the frontend of a website gives you the option to ‘inspect element’ or ‘view page source.’ This reveals the site’s backend code, which is what the scraper will read.

Step three: Identify the data you want to extract

If you’re looking at book reviews on Amazon, you’ll need to identify where these are located in the backend code. Most browsers automatically highlight selected frontend content with its corresponding code on the backend. Your aim is to identify the unique tags that enclose (or ‘nest’) the relevant content (e.g. <div> tags).

Step four: Write the necessary code

Once you’ve found the appropriate nest tags, you’ll need to incorporate these into your preferred scraping software. This basically tells the bot where to look and what to extract. It’s commonly done using Python libraries, which do much of the heavy lifting. You need to specify exactly what data types you want the scraper to parse and store. For instance, if you’re looking for book reviews, you’ll want information such as the book title, author name, and rating.

Step five: Execute the code

Once you’ve written the code, the next step is to execute it. Now to play the waiting game! This is where the scraper requests site access, extracts the data, and parses it (as per the steps outlined in the previous section).

Step six: Storing the data

After extracting, parsing, and collecting the relevant data, you’ll need to store it. You can instruct your algorithm to do this by adding extra lines to your code. Which format you choose is up to you, but as mentioned, Excel formats are the most common. You can also run your code through a Python Regex module (short for ‘regular expressions’) to extract a cleaner set of data that’s easier to read.

Now you’ve got the data you need, you’re free to play around with it.Of course, as we often learn in our explorations of the data analytics process , web scraping isn’t always as straightforward as it at first seems. It’s common to make mistakes and you may need to repeat some steps. But don’t worry, this is normal, and practice makes perfect!

5. What tools can you use to scrape the web?

We’ve covered the basics of how to scrape the web for data, but how does this work from a technical standpoint? Often, web scraping requires some knowledge of programming languages, the most popular for the task being Python . Luckily, Python comes with a huge number of open-source libraries that make web scraping much easier. These include:

BeautifulSoup

BeautifulSoup is another Python library, commonly used to parse data from XML and HTML documents. Organizing this parsed content into more accessible trees, BeautifulSoup makes navigating and searching through large swathes of data much easier. It’s the go-to tool for many data analysts.

Scrapy is a Python-based application framework that crawls and extracts structured data from the web. It’s commonly used for data mining, information processing, and for archiving historical content. As well as web scraping (which it was specifically designed for) it can be used as a general-purpose web crawler, or to extract data through APIs.

Pandas is another multi-purpose Python library used for data manipulation and indexing. It can be used to scrape the web in conjunction with BeautifulSoup. The main benefit of using pandas is that analysts can carry out the entire data analytics process using one language (avoiding the need to switch to other languages, such as R).

A bonus tool, in case you’re not an experienced programmer! Parsehub is a free online tool (to be clear, this one’s not a Python library) that makes it easy to scrape online data. The only catch is that for full functionality you’ll need to pay. But the free tool is worth playing around with, and the company offers excellent customer support.

There are many other tools available, from general-purpose scraping tools to those designed for more sophisticated, niche tasks. The best thing to do is to explore which tools suit your interests and skill set, and then add the appropriate ones to your data analytics arsenal!

6. What else do you need to know about web scraping?

We already mentioned that web scraping isn’t always as simple as following a step-by-step process. Here’s a checklist of additional things to consider before scraping a website.

Have you refined your target data?

When you’re coding your web scraper, it’s important to be as specific as possible about what you want to collect. Keep things too vague and you’ll end up with far too much data (and a headache!) It’s best to invest some time upfront to produce a clear plan. This will save you lots of effort cleaning your data in the long run.

Have you checked the site’s robots.txt?

Each website has what’s called a robot.txt file. This must always be your first port of call. This file communicates with web scrapers, telling them which areas of the site are out of bounds. If a site’s robots.txt disallows scraping on certain (or all) pages then you should always abide by these instructions.

Have you checked the site’s terms of service?

In addition to the robots.txt, you should review a website’s terms of service (TOS). While the two should align, this is sometimes overlooked. The TOS might have a formal clause outlining what you can and can’t do with the data on their site. You can get into legal trouble if you break these rules, so make sure you don’t!

Are you following data protection protocols?

Just because certain data is available doesn’t mean you’re allowed to scrape it, free from consequences. Be very careful about the laws in different jurisdictions, and follow each region’s data protection protocols. For instance, in the EU, the General Data Protection Regulation (GDPR) protects certain personal data from extraction, meaning it’s against the law to scrape it without people’s explicit consent.

Are you at risk of crashing a website?

Big websites, like Google or Amazon, are designed to handle high traffic. Smaller sites are not. It’s therefore important that you don’t overload a site with too many HTTP requests, which can slow it down, or even crash it completely. In fact, this is a technique often used by hackers. They flood sites with requests to bring them down, in what’s known as a ‘denial of service’ attack. Make sure you don’t carry one of these out by mistake! Don’t scrape too aggressively, either; include plenty of time intervals between requests, and avoid scraping a site during its peak hours.

Be mindful of all these considerations, be careful with your code, and you should be happily scraping the web in no time at all.

7. In summary

In this post, we’ve looked at what data scraping is, how it’s used, and what the process involves. Key takeaways include:

- Web scraping can be used to collect all sorts of data types: From images to videos, text, numerical data, and more.

- Web scraping has multiple uses: From contact scraping and trawling social media for brand mentions to carrying out SEO audits, the possibilities are endless.

- Planning is important: Taking time to plan what you want to scrape beforehand will save you effort in the long run when it comes to cleaning your data.

- Python is a popular tool for scraping the web: Python libraries like Beautifulsoup, scrapy, and pandas are all common tools for scraping the web.

- Don’t break the law: Before scraping the web, check the laws in various jurisdictions, and be mindful not to breach a site’s terms of service.

- Etiquette is important, too: Consider factors such as a site’s resources—don’t overload them, or you’ll risk bringing them down. It’s nice to be nice!

Data scraping is just one of the steps involved in the broader data analytics process. To learn about data analytics, why not check out our free, five-day data analytics short course ? We can also recommend the following posts:

- Where to find free datasets for your next project

- What is data quality and why does it matter?

- Quantitative vs. qualitative data: What’s the difference?

Learn Web Scraping with Beautiful Soup

Can’t download the data you need? Learn how to pull data right from the page by web scraping with the Python library Beautiful Soup.

Skill level

Time to complete

Certificate of completion

Prerequisites

- Learn Python 3

- Learn Data Analysis with Pandas

About this course

Many of your coding projects may require you to pull a bunch of information from an HTML or XML page. This task can be really tedious and boring, that is until you learn how to scrape the web with an HTML Parser! That’s where Beautiful Soup comes in. This Python package allows you to parse HTML and XML pages with ease and pull all sorts of data off the web.

Say you want to pull all of the tweets from your favorite movie star and run some analysis on their word usage — scrape em! Maybe you want to make a digital collage all the images you’ve posted of your dog to Instagram — parse em! And if you want to pull a list of all of your friend’s favorite books from Goodreads — Beautiful Soup em!

Beautiful Soup

Learn how to take data that’s displayed on websites and put it into Python using the Beautiful Soup library!

Certificate of completion available with Plus or Pro

The platform

Hands-on learning

Projects in this course

Chocolate scraping with beautiful soup, earn a certificate of completion.

- Show proof Receive a certificate that demonstrates you've completed a course or path.

- Build a collection The more courses and paths you complete, the more certificates you collect.

- Share with your network Easily add certificates of completion to your LinkedIn profile to share your accomplishments.

Learn Web Scraping with Beautiful Soup course ratings and reviews

- 5 stars 51%

- 4 stars 31%

- 3 stars 11%

Our learners work at

- Google Logo

- Amazon Logo

- Microsoft Logo

- Reddit Logo

- Spotify Logo

- YouTube Logo

- Instagram Logo

Frequently asked questions about Web Scraping with Beautiful Soup

What is web scraping.

Web scraping is a way for programmers to learn more about websites and users. Sometimes you’ll find a website that has all the data you need for a project — but you can’t download it. Fortunately, there are tools like Beautiful Soup (which you’ll learn how to use in this course) that let you pull data from a web page in a usable format.

What does web scraping do?

What kind of jobs use web scraping, what else should i study if i am learning web scraping, join over 50 million learners and start learn web scraping with beautiful soup today, looking for something else, related resources, web scrape with selenium and beautiful soup, what is python, what is a web app, related courses and paths, build python web apps with flask, learn testing for web development: model testing, learn css: accessibility, browse more topics.

- Python 4,806,381 learners enrolled

- Data Science 6,129,540 learners enrolled

- Code Foundations 13,679,495 learners enrolled

- For Business 10,053,604 learners enrolled

- Computer Science 7,909,690 learners enrolled

- Web Development 7,199,264 learners enrolled

- Cloud Computing 4,374,534 learners enrolled

- Data Analytics 4,207,097 learners enrolled

- IT 4,160,789 learners enrolled

Unlock additional features with a paid plan

Practice projects, assessments, certificate of completion.

- Python Developers

- Pandas Developers

- SQL Developers

- Machine Learning Engineers

- PostgreSQL Developers

- MySQL Developers

- JavaScript Developers

- Java Developers

Modern Web Scraping With Python and Selenium

Web scraping has been around since the early days of the World Wide Web, but scraping modern sites that heavily rely on new technologies is anything but straightforward.

In this article, Toptal Software Developer Neal Barnett demonstrates how you can use Python and Selenium to scrape sites that employ a lot of JavaScript, iframes, and certificates.

By Neal Barnett

Neal is a senior consultant and database expert who brings a wealth of knowledge and more than two decades of experience to the table.

Web scraping has been used to extract data from websites almost from the time the World Wide Web was born. In the early days, scraping was mainly done on static pages – those with known elements, tags, and data.

More recently, however, advanced technologies in web development have made the task a bit more difficult. In this article, we’ll explore how we might go about scraping data in the case that new technology and other factors prevent standard scraping.

Traditional Data Scraping

As most websites produce pages meant for human readability rather than automated reading, web scraping mainly consisted of programmatically digesting a web page’s mark-up data (think right-click, View Source), then detecting static patterns in that data that would allow the program to “read” various pieces of information and save it to a file or a database.

If report data were to be found, often, the data would be accessible by passing either form variables or parameters with the URL. For example:

Python has become one of the most popular web scraping languages due in part to the various web libraries that have been created for it. When web scraping using Python, the popular library, Beautiful Soup , is designed to pull data out of HTML and XML files by allowing searching, navigating, and modifying tags (i.e., the parse tree).

Browser-based Scraping

Recently, I had a scraping project that seemed pretty straightforward and I was fully prepared to use traditional scraping to handle it. But as I got further into it, I found obstacles that could not be overcome with traditional methods.

Three main issues prevented me from my standard scraping methods:

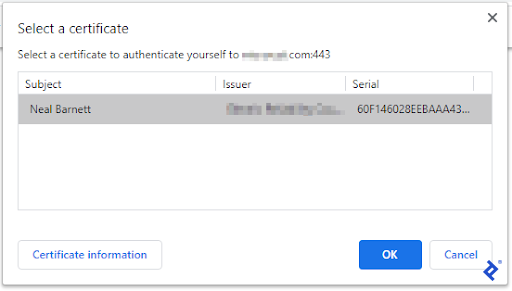

- Certificate. There was a certificate required to be installed to access the portion of the website where the data was. When accessing the initial page, a prompt appeared asking me to select the proper certificate of those installed on my computer, and click OK.

- Iframes. The site used iframes, which messed up my normal scraping. Yes, I could try to find all iframe URLs, then build a sitemap, but that seemed like it could get unwieldy.

- JavaScript. The data was accessed after filling in a form with parameters (e.g., customer ID, date range, etc.). Normally, I would bypass the form and simply pass the form variables (via URL or as hidden form variables) to the result page and see the results. But in this case, the form contained JavaScript, which didn’t allow me to access the form variables in a normal fashion.

So, I decided to abandon my traditional methods and look at a possible tool for browser-based scraping. This would work differently than normal – instead of going directly to a page, downloading the parse tree, and pulling out data elements, I would instead “act like a human” and use a browser to get to the page I needed, then scrape the data - thus, bypassing the need to deal with the barriers mentioned.

Web Scraping With Selenium

In general, Selenium is well-known as an open-source testing framework for web applications – enabling QA specialists to perform automated tests, execute playbacks, and implement remote control functionality (allowing many browser instances for load testing and multiple browser types). In my case, this seemed like it could be useful.

My go-to language for web scraping is Python, as it has well-integrated libraries that can generally handle all of the functionality required. And sure enough, a Selenium library exists for Python. This would allow me to instantiate a “browser” – Chrome, Firefox, IE, etc. – then pretend I was using the browser myself to gain access to the data I was looking for. And if I didn’t want the browser to actually appear, I could create the browser in “headless” mode, making it invisible to any user.

Project Setup

To start experimenting with a Python web scraper, I needed to set up my project and get everything I needed. I used a Windows 10 machine and made sure I had a relatively updated Python version (it was v. 3.7.3). I created a blank Python script, then loaded the libraries I thought might be required, using PIP (package installer for Python) if I didn’t already have the library loaded. These are the main libraries I started with:

- Requests (for making HTTP requests)

- URLLib3 (URL handling)

- Beautiful Soup (in case Selenium couldn’t handle everything)

- Selenium (for browser-based navigation)

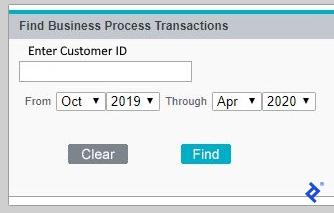

I also added some calling parameters to the script (using the argparse library) so that I could play around with various datasets, calling the script from the command line with different options. Those included Customer ID, from- month/year, and to-month/year.

Problem 1 – The Certificate

The first choice I needed to make was which browser I was going to tell Selenium to use. As I generally use Chrome, and it’s built on the open-source Chromium project (also used by Edge, Opera, and Amazon Silk browsers), I figured I would try that first.

I was able to start up Chrome in the script by adding the library components I needed, then issuing a couple of simple commands:

Since I didn’t launch the browser in headless mode, the browser actually appeared and I could see what it was doing. It immediately asked me to select a certificate (which I had installed earlier).

The first problem to tackle was the certificate. How to select the proper one and accept it in order to get into the website? In my first test of the script, I got this prompt:

This wasn’t good. I did not want to manually click the OK button each time I ran my script.

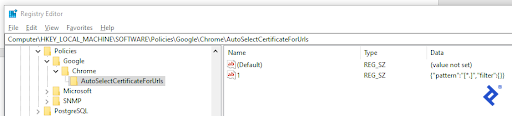

As it turns out, I was able to find a workaround for this - without programming. While I had hoped that Chrome had the ability to pass a certificate name on startup, that feature did not exist. However, Chrome does have the ability to autoselect a certificate if a certain entry exists in your Windows registry. You can set it to select the first certificate it sees, or else be more specific. Since I only had one certificate loaded, I used the generic format.

Thus, with that set, when I told Selenium to launch Chrome and a certificate prompt came up, Chrome would “AutoSelect” the certificate and continue on.

Problem 2 – Iframes

Okay, so now I was in the site and a form appeared, prompting me to type in the customer ID and the date range of the report.

By examining the form in developer tools (F12), I noticed that the form was presented within an iframe. So, before I could start filling in the form, I needed to “switch” to the proper iframe where the form existed. To do this, I invoked Selenium’s switch-to feature, like so:

Good, so now in the right frame, I was able to determine the components, populate the customer ID field, and select the date drop-downs:

Problem 3 – JavaScript

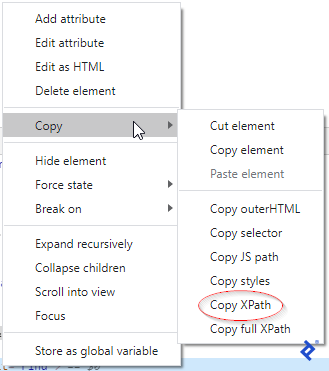

The only thing left on the form was to “click” the Find button, so it would begin the search. This was a little tricky as the Find button seemed to be controlled by JavaScript and wasn’t a normal “Submit” type button. Inspecting it in developer tools, I found the button image and was able to get the XPath of it, by right-clicking.

Then, armed with this information, I found the element on the page, then clicked it.

And voilà, the form was submitted and the data appeared! Now, I could just scrape all of the data on the result page and save it as required. Or could I?

Getting the Data

First, I had to handle the case where the search found nothing. That was pretty straightforward. It would display a message on the search form without leaving it, something like “No records found.” I simply searched for that string and stopped right there if I found it.

But if results did come, the data was presented in divs with a plus sign (+) to open a transaction and show all of its detail. An opened transaction showed a minus sign (-) which when clicked would close the div. Clicking a plus sign would call a URL to open its div and close any open one.

Thus, it was necessary to find any plus signs on the page, gather the URL next to each one, then loop through each to get all data for every transaction.

In the above code, the fields I retrieved were the transaction type and the status, then added to a count to determine how many transactions fit the rules that were specified. However, I could have retrieved other fields within the transaction detail, like date and time, subtype, etc.

For this web scraping Python project, the count was returned back to a calling application. However, it and other scraped data could have been stored in a flat file or a database as well.

Additional Possible Roadblocks and Solutions

Numerous other obstacles might be presented while scraping modern websites with your own browser instance, but most can be resolved. Here are a few:

Trying to find something before it appears

While browsing yourself, how often do you find that you are waiting for a page to come up, sometimes for many seconds? Well, the same can occur while navigating programmatically. You look for a class or other element – and it’s not there!

Luckily, Selenium has the ability to wait until it sees a certain element, and can timeout if the element doesn’t appear, like so:

Getting through a Captcha

Some sites employ Captcha or similar to prevent unwanted robots (which they might consider you). This can put a damper on web scraping and slow it way down.

For simple prompts (like “what’s 2 + 3?”), these can generally be read and figured out easily. However, for more advanced barriers, there are libraries that can help try to crack it. Some examples are 2Captcha , Death by Captcha , and Bypass Captcha .

Website structural changes

Websites are meant to change – and they often do. That’s why when writing a scraping script, it’s best to keep this in mind. You’ll want to think about which methods you’ll use to find the data, and which not to use. Consider partial matching techniques, rather than trying to match a whole phrase. For example, a website might change a message from “No records found” to “No records located” – but if your match is on “No records,” you should be okay. Also, consider whether to match on XPATH, ID, name, link text, tag or class name, or CSS selector – and which is least likely to change.

Summary: Python and Selenium

This was a brief demonstration to show that almost any website can be scraped, no matter what technologies are used and what complexities are involved. Basically, if you can browse the site yourself, it generally can be scraped.

Now, as a caveat, it does not mean that every website should be scraped. Some have legitimate restrictions in place, and there have been numerous court cases deciding the legality of scraping certain sites. On the other hand, some sites welcome and encourage data to be retrieved from their website and in some cases provide an API to make things easier.