Developing an Asynchronous Task Queue in Python

Share this tutorial.

- Hacker News

This tutorial looks at how to implement several asynchronous task queues using Python's multiprocessing library and Redis .

Queue Data Structures

Following along, multiprocessing pool, multiprocessing queue.

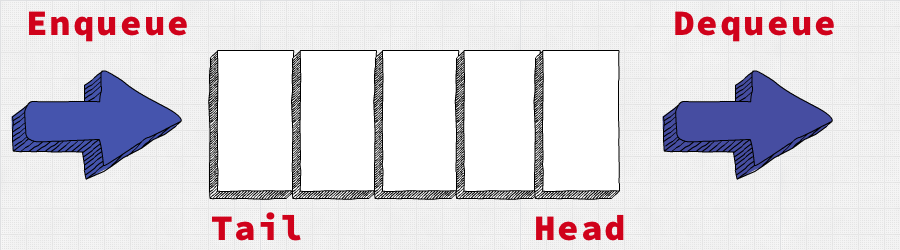

A queue is a First-In-First-Out ( FIFO ) data structure.

- an item is added at the tail ( enqueue )

- an item is removed at the head ( dequeue )

You'll see this in practice as you code out the examples in this tutorial.

Let's start by creating a basic task:

So, get_word_counts finds the twenty most frequent words from a given text file and saves them to an output file. It also prints the current process identifier (or pid) using Python's os library.

Create a project directory along with a virtual environment. Then, use pip to install NLTK :

Once installed, invoke the Python shell and download the stopwords corpus :

If you experience an SSL error refer to this article. Example fix: >>> import nltk >>> nltk.download ( 'stopwords' ) [ nltk_data ] Error loading stopwords: <urlopen error [ SSL: [ nltk_data ] CERTIFICATE_VERIFY_FAILED ] certificate verify failed: [ nltk_data ] unable to get local issuer certificate ( _ssl.c:1056 ) > False >>> import ssl >>> try: ... _create_unverified_https_context = ssl._create_unverified_context ... except AttributeError: ... pass ... else : ... ssl._create_default_https_context = _create_unverified_https_context ... >>> nltk.download ( 'stopwords' ) [ nltk_data ] Downloading package stopwords to [ nltk_data ] /Users/michael.herman/nltk_data... [ nltk_data ] Unzipping corpora/stopwords.zip. True

Add the above tasks.py file to your project directory but don't run it quite yet.

We can run this task in parallel using the multiprocessing library:

Here, using the Pool class, we processed four tasks with two processes.

Did you notice the map_async method? There are essentially four different methods available for mapping tasks to processes. When choosing one, you have to take multi-args, concurrency, blocking, and ordering into account:

| Method | Multi-args | Concurrency | Blocking | Ordered-results |

|---|---|---|---|---|

| No | Yes | Yes | Yes | |

| No | No | No | Yes | |

| Yes | No | Yes | No | |

| Yes | Yes | No | No |

Without both close and join , garbage collection may not occur, which could lead to a memory leak.

- close tells the pool not to accept any new tasks

- join tells the pool to exit after all tasks have completed

Following along? Grab the Project Gutenberg sample text files from the "data" directory in the simple-task-queue repo, and then add an "output" directory. Your project directory should look like this: ├── data │ ├── dracula.txt │ ├── frankenstein.txt │ ├── heart-of-darkness.txt │ └── pride-and-prejudice.txt ├── output ├── simple_pool.py └── tasks.py

It should take less than a second to run:

This script ran on a i9 Macbook Pro with 16 cores.

So, the multiprocessing Pool class handles the queuing logic for us. It's perfect for running CPU-bound tasks or really any job that can be broken up and distributed independently. If you need more control over the queue or need to share data between multiple processes, you may want to look at the Queue class.

For more on this along with the difference between parallelism (multiprocessing) and concurrency (multithreading), review the Speeding Up Python with Concurrency, Parallelism, and asyncio article.

Let's look at a simple example:

The Queue class, also from the multiprocessing library, is a basic FIFO (first in, first out) data structure. It's similar to the queue.Queue class, but designed for interprocess communication. We used put to enqueue an item to the queue and get to dequeue an item.

Check out the Queue source code for a better understanding of the mechanics of this class.

Now, let's look at more advanced example:

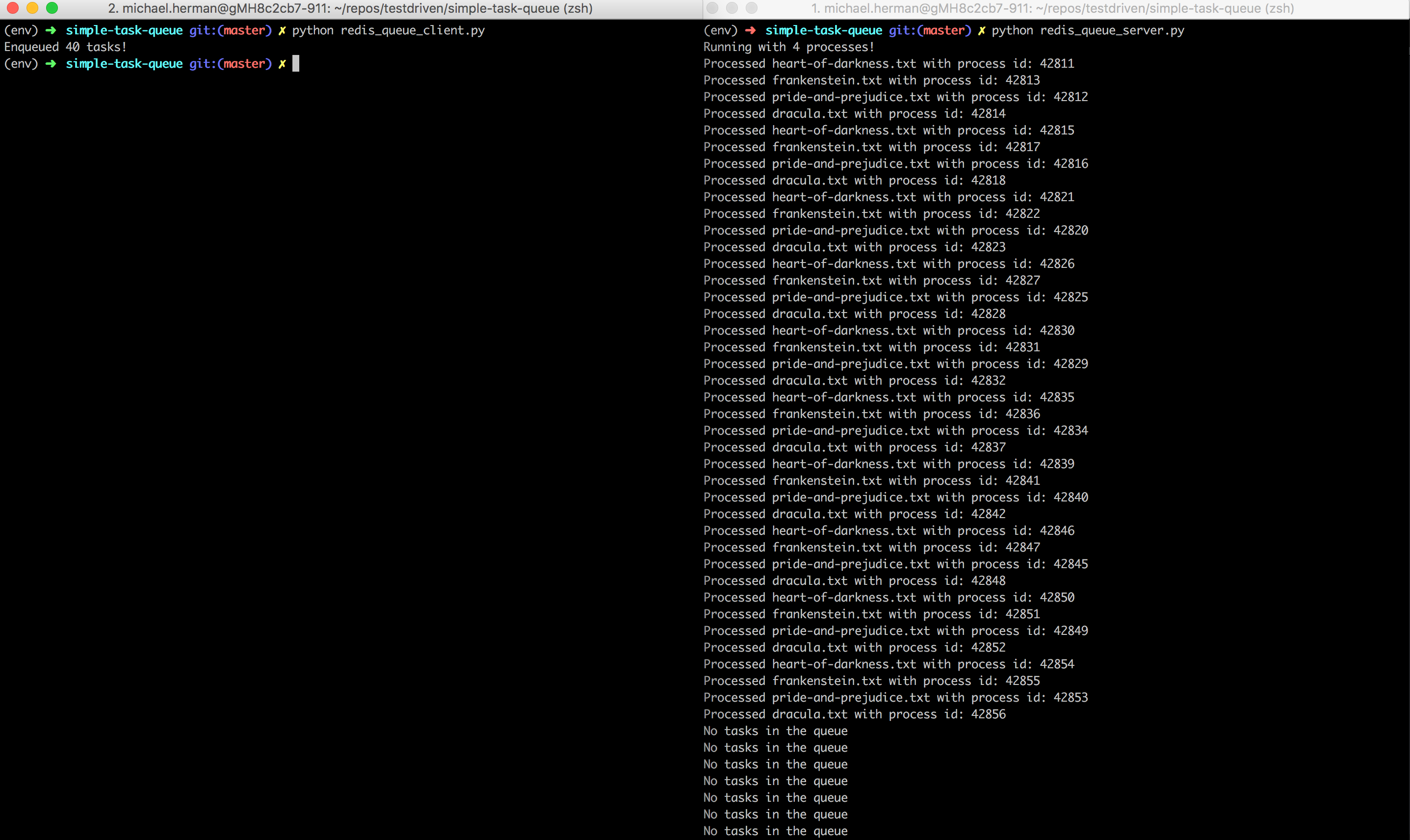

Here, we enqueued 40 tasks (ten for each text file) to the queue, created separate processes via the Process class, used start to start running the processes, and, finally, used join to complete the processes.

It should still take less than a second to run.

Challenge : Check your understanding by adding another queue to hold completed tasks. You can enqueue them within the process_tasks function.

The multiprocessing library provides support for logging as well:

To test, change task_queue.put("dracula.txt") to task_queue.put("drakula.txt") . You should see the following error outputted ten times in the terminal:

Want to log to disc?

Again, cause an error by altering one of the file names, and then run it. Take a look at process.log . It's not quite as organized as it should be since the Python logging library does not use shared locks between processes. To get around this, let's have each process write to its own file. To keep things organized, add a logs directory to your project folder:

Moving right along, instead of using an in-memory queue, let's add Redis into the mix.

Following along? Download and install Redis if you do not already have it installed. Then, install the Python interface : (env)$ pip install redis == 4 .5.5

We'll break the logic up into four files:

- redis_queue.py creates new queues and tasks via the SimpleQueue and SimpleTask classes, respectively.

- redis_queue_client enqueues new tasks.

- redis_queue_worker dequeues and processes tasks.

- redis_queue_server spawns worker processes.

Here, we defined two classes, SimpleQueue and SimpleTask :

- SimpleQueue creates a new queue and enqueues, dequeues, and gets the length of the queue.

- SimpleTask creates new tasks, which are used by the instance of the SimpleQueue class to enqueue new tasks, and processes new tasks.

Curious about lpush() , brpop() , and llen() ? Refer to the Command reference page. ( The brpop() function is particularly cool because it blocks the connection until a value exists to be popped!)

This module will create a new instance of Redis and the SimpleQueue class. It will then enqueue 40 tasks.

If a task is available, the dequeue method is called, which then de-serializes the task and calls the process_task method (in redis_queue.py ).

The run method spawns four new worker processes.

You probably don’t want four processes running at once all the time, but there may be times that you will need four or more processes. Think about how you could programmatically spin up and down additional workers based on demand.

To test, run redis_queue_server.py and redis_queue_client.py in separate terminal windows:

Check your understanding again by adding logging to the above application.

In this tutorial, we looked at a number of asynchronous task queue implementations in Python. If the requirements are simple enough, it may be easier to develop a queue in this manner. That said, if you're looking for more advanced features -- like task scheduling, batch processing, job prioritization, and retrying of failed tasks -- you should look into a full-blown solution. Check out Celery , RQ , or Huey .

Grab the final code from the simple-task-queue repo.

Full-text Search in Django with Postgres and Elasticsearch

Learn how to add full-text search to Django with both Postgres and Elasticsearch.

Recommended Tutorials

Stay sharp with course updates.

Join our mailing list to be notified about updates and new releases.

Send Us Feedback

Flask by Example – Implementing a Redis Task Queue

Table of Contents

Install Requirements

Set up the worker, update app.py, get results, what’s next.

This part of the tutorial details how to implement a Redis task queue to handle text processing.

- 02/12/2020: Upgraded to Python version 3.8.1 as well as the latest versions of Redis, Python Redis, and RQ. See below for details. Mention a bug in the latest RQ version and provide a solution. Solved the http before https bug.

- 03/22/2016: Upgraded to Python version 3.5.1 as well as the latest versions of Redis, Python Redis, and RQ. See below for details.

- 02/22/2015: Added Python 3 support.

Free Bonus: Click here to get access to a free Flask + Python video tutorial that shows you how to build Flask web app, step-by-step.

Remember: Here’s what we’re building—A Flask app that calculates word-frequency pairs based on the text from a given URL.

- Part One : Set up a local development environment and then deploy both a staging and a production environment on Heroku.

- Part Two : Set up a PostgreSQL database along with SQLAlchemy and Alembic to handle migrations.

- Part Three : Add in the back-end logic to scrape and then process the word counts from a webpage using the requests, BeautifulSoup, and Natural Language Toolkit (NLTK) libraries.

- Part Four: Implement a Redis task queue to handle the text processing. ( current )

- Part Five : Set up Angular on the front-end to continuously poll the back-end to see if the request is done processing.

- Part Six : Push to the staging server on Heroku - setting up Redis and detailing how to run two processes (web and worker) on a single Dyno.

- Part Seven : Update the front-end to make it more user-friendly.

- Part Eight : Create a custom Angular Directive to display a frequency distribution chart using JavaScript and D3.

Need the code? Grab it from the repo .

Tools used:

- Redis ( 5.0.7 )

- Python Redis ( 3.4.1 )

- RQ ( 1.2.2 ) - a simple library for creating a task queue

Start by downloading and installing Redis from either the official site or via Homebrew ( brew install redis ). Once installed, start the Redis server:

Next install Python Redis and RQ in a new terminal window:

Let’s start by creating a worker process to listen for queued tasks. Create a new file worker.py , and add this code:

Here, we listened for a queue called default and established a connection to the Redis server on localhost:6379 .

Fire this up in another terminal window:

Now we need to update our app.py to send jobs to the queue…

Add the following imports to app.py :

Then update the configuration section:

q = Queue(connection=conn) set up a Redis connection and initialized a queue based on that connection.

Move the text processing functionality out of our index route and into a new function called count_and_save_words() . This function accepts one argument, a URL, which we will pass to it when we call it from our index route.

Take note of the following code:

Note: We need to import the count_and_save_words function in our function index as the RQ package currently has a bug, where it won’t find functions in the same module.

Here we used the queue that we initialized earlier and called the enqueue_call() function. This added a new job to the queue and that job ran the count_and_save_words() function with the URL as the argument. The result_ttl=5000 line argument tells RQ how long to hold on to the result of the job for - 5,000 seconds, in this case. Then we outputted the job id to the terminal. This id is needed to see if the job is done processing.

Let’s setup a new route for that…

Let’s test this out.

Fire up the server, navigate to http://localhost:5000/ , use the URL https://realpython.com , and grab the job id from the terminal. Then use that id in the ‘/results/’ endpoint - i.e., http://localhost:5000/results/ef600206-3503-4b87-a436-ddd9438f2197 .

As long as less than 5,000 seconds have elapsed before you check the status, then you should see an id number, which is generated when we add the results to the database:

Now, let’s refactor the route slightly to return the actual results from the database in JSON:

Make sure to add the import:

Test this out again. If all went well, you should see something similar to in your browser:

In Part 5 we’ll bring the client and server together by adding Angular into the mix to create a poller , which will send a request every five seconds to the /results/<job_key> endpoint asking for updates. Once the data is available, we’ll add it to the DOM.

This is a collaboration piece between Cam Linke, co-founder of Startup Edmonton , and the folks at Real Python

🐍 Python Tricks 💌

Get a short & sweet Python Trick delivered to your inbox every couple of days. No spam ever. Unsubscribe any time. Curated by the Real Python team.

About The Team

Each tutorial at Real Python is created by a team of developers so that it meets our high quality standards. The team members who worked on this tutorial are:

Master Real-World Python Skills With Unlimited Access to Real Python

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas:

What Do You Think?

What’s your #1 takeaway or favorite thing you learned? How are you going to put your newfound skills to use? Leave a comment below and let us know.

Commenting Tips: The most useful comments are those written with the goal of learning from or helping out other students. Get tips for asking good questions and get answers to common questions in our support portal . Looking for a real-time conversation? Visit the Real Python Community Chat or join the next “Office Hours” Live Q&A Session . Happy Pythoning!

Keep Learning

Related Topics: databases flask web-dev

Keep reading Real Python by creating a free account or signing in:

Already have an account? Sign-In

Almost there! Complete this form and click the button below to gain instant access:

Free Flask Video Tutorial: Build a Python + Flask Web App, From Scratch

🔒 No spam. We take your privacy seriously.

Use Python to build your side business with the Python for Entrepreneurs video course!

Task queues

Task queues manage background work that must be executed outside the usual HTTP request-response cycle.

Why are task queues necessary?

Tasks are handled asynchronously either because they are not initiated by an HTTP request or because they are long-running jobs that would dramatically reduce the performance of an HTTP response.

For example, a web application could poll the GitHub API every 10 minutes to collect the names of the top 100 starred repositories. A task queue would handle invoking code to call the GitHub API, process the results and store them in a persistent database for later use.

Another example is when a database query would take too long during the HTTP request-response cycle. The query could be performed in the background on a fixed interval with the results stored in the database. When an HTTP request comes in that needs those results a query would simply fetch the precalculated result instead of re-executing the longer query. This precalculation scenario is a form of caching enabled by task queues.

Other types of jobs for task queues include

spreading out large numbers of independent database inserts over time instead of inserting everything at once

aggregating collected data values on a fixed interval, such as every 15 minutes

scheduling periodic jobs such as batch processes

Task queue projects

The defacto standard Python task queue is Celery. The other task queue projects that arise tend to come from the perspective that Celery is overly complicated for simple use cases. My recommendation is to put the effort into Celery's reasonable learning curve as it is worth the time it takes to understand how to use the project.

The Celery distributed task queue is the most commonly used Python library for handling asynchronous tasks and scheduling.

The RQ (Redis Queue) is a simple Python library for queueing jobs and processing them in the background with workers. RQ is backed by Redis and is designed to have a low barrier to entry. The intro post contains information on design decisions and how to use RQ.

Taskmaster is a lightweight simple distributed queue for handling large volumes of one-off tasks.

Huey is a simple task queue that uses Redis on the backend but otherwise does not depend on other libraries. The project was previously known as Invoker and the author changed the name.

Huey is a Redis-based task queue that aims to provide a simple, yet flexible framework for executing tasks. Huey supports task scheduling, crontab-like repeating tasks, result storage and automatic retry in the event of failure.

Hosted message and task queue services

Task queue third party services aim to solve the complexity issues that arise when scaling out a large deployment of distributed task queues.

Iron.io is a distributed messaging service platform that works with many types of task queues such as Celery. It also is built to work with other IaaS and PaaS environments such as Amazon Web Services and Heroku.

Amazon Simple Queue Service (SQS) is a set of five APIs for creating, sending, receiving, modifying and deleting messages.

CloudAMQP is at its core managed servers with RabbitMQ installed and configured. This service is an option if you are using RabbitMQ and do not want to maintain RabbitMQ installations on your own servers.

Open source examples that use task queues

Take a look at the code in this open source Flask application and this Django application for examples of how to use and deploy Celery with a Redis broker to send text messages with these frameworks.

flask-celery-example is a simple Flask application with Celery as a task queue and Redis as the broker.

Task queue resources

Getting Started Scheduling Tasks with Celery is a detailed walkthrough for setting up Celery with Django (although Celery can also be used without a problem with other frameworks).

International Space Station notifications with Python and Redis Queue (RQ) shows how to combine the RQ task queue library with Flask to send text message notifications every time a condition is met - in this blog post's case that the ISS is currently flying over your location on Earth.

Evaluating persistent, replicated message queues is a detailed comparison of Amazon SQS, MongoDB, RabbitMQ, HornetQ and Kafka's designs and performance.

Queues.io is a collection of task queue systems with short summaries for each one. The task queues are not all compatible with Python but ones that work with it are tagged with the "Python" keyword.

Why Task Queues is a presentation for what task queues are and why they are needed.

Flask by Example Implementing a Redis Task Queue provides a detailed walkthrough of setting up workers to use RQ with Redis.

How to use Celery with RabbitMQ is a detailed walkthrough for using these tools on an Ubuntu VPS.

Heroku has a clear walkthrough for using RQ for background tasks .

Introducing Celery for Python+Django provides an introduction to the Celery task queue.

Celery - Best Practices explains things you should not do with Celery and shows some underused features for making task queues easier to work with.

The "Django in Production" series by Rob Golding contains a post specifically on Background Tasks .

Asynchronous Processing in Web Applications Part One and Part Two are great reads for understanding the difference between a task queue and why you shouldn't use your database as one.

Celery in Production on the Caktus Group blog contains good practices from their experience using Celery with RabbitMQ, monitoring tools and other aspects not often discussed in existing documentation.

A 4 Minute Intro to Celery is a short introductory task queue screencast.

Heroku wrote about how to secure Celery when tasks are otherwise sent over unencrypted networks.

Miguel Grinberg wrote a nice post on using the task queue Celery with Flask . He gives an overview of Celery followed by specific code to set up the task queue and integrate it with Flask.

3 Gotchas for Working with Celery are things to keep in mind when you're new to the Celery task queue implementation.

Deferred Tasks and Scheduled Jobs with Celery 3.1, Django 1.7 and Redis is a video along with code that shows how to set up Celery with Redis as the broker in a Django application.

Setting up an asynchronous task queue for Django using Celery and Redis is a straightforward tutorial for setting up the Celery task queue for Django web applications using the Redis broker on the back end.

Background jobs with Django and Celery shows the code and a simple explanation of how to use Celery with Django .

Asynchronous Tasks With Django and Celery shows how to integrate Celery with Django and create Periodic Tasks.

Three quick tips from two years with Celery provides some solid advice on retry delays, the -Ofair flag and global task timeouts for Celery.

Task queue learning checklist

Pick a slow function in your project that is called during an HTTP request.

Determine if you can precompute the results on a fixed interval instead of during the HTTP request. If so, create a separate function you can call from elsewhere then store the precomputed value in the database.

Read the Celery documentation and the links in the resources section below to understand how the project works.

Install a message broker such as RabbitMQ or Redis and then add Celery to your project. Configure Celery to work with the installed message broker.

Use Celery to invoke the function from step one on a regular basis.

Have the HTTP request function use the precomputed value instead of the slow running code it originally relied upon.

What's next to learn after task queues?

How do I log errors that occur in my application?

I want to learn more about app users via web analytics.

What tools exist for monitoring a deployed web app?

Sign up here to receive a monthly email with major updates to this site, tutorials and discount codes for Python books.

Searching for a complete, step-by-step deployment walkthrough? Learn more about The Full Stack Python Guide to Deployments book .

Email Updates

Sign up to get a monthly email with python tutorials and major updates to this site., table of contents, task queues.

- Contributing

RQ ( Redis Queue ) is a simple Python library for queueing jobs and processing them in the background with workers. It is backed by Redis and it is designed to have a low barrier to entry. It can be integrated in your web stack easily.

RQ requires Redis >= 3.0.0.

Getting started

First, run a Redis server. You can use an existing one. To put jobs on queues, you don’t have to do anything special, just define your typically lengthy or blocking function:

Then, create a RQ queue:

And enqueue the function call:

Scheduling jobs are similarly easy:

You can also ask RQ to retry failed jobs:

To start executing enqueued function calls in the background, start a worker from your project’s directory:

That’s about it.

Installation

Simply use the following command to install the latest released version:

If you want the cutting edge version (that may well be broken), use this:

Project history

This project has been inspired by the good parts of Celery , Resque and this snippet , and has been created as a lightweight alternative to existing queueing frameworks, with a low barrier to entry.

Using Python RQ for Task Queues in Python

This is a getting started on python-rq tutorial and I will demonstrate how to work with asynchronous tasks using python redis queue (python-rq).

What will we be doing

We want a client to submit 1000's of jobs in a non-blocking asynchronous fashion, and then we will have workers which will consume these jobs from our redis queue, and process those tasks at the rate of what our consumer can handle.

The nice thing about this is that, if our consumer is unavailable for processing the tasks will remain in the queue and once the consumer is ready to consume, the tasks will be executed. It's also nice that its asynchronous, so the client don't have to wait until the task has finished.

We will run a redis server using docker, which will be used to queue all our jobs, then we will go through the basics in python and python-rq such as:

- Writing a Task

- Enqueueing a Job

- Getting information from our queue, listing jobs, job statuses

- Running our workers to consume from the queue and action our tasks

- Basic application which queues jobs to the queue, consumes and action them and monitors the queue

Redis Server

You will require docker for this next step, to start the redis server:

Install python-rq:

Create the task which will be actioned by our workers, in our case it will just be a simple function that adds all the numbers from a given string to a list, then adds them up and return the total value.

This is however a very basic task, but its just for demonstration.

Our tasks.py :

To test this locally:

Now, lets import redis and redis-queue, with our tasks and instantiate a queue object:

Submit a Task to the Queue

Let's submit a task to the queue:

We have a couple of properties from result which we can inspect, first let's have a look at the id that we got back when we submitted our task to the queue:

We can also get the status from our task:

We can also view our results in json format:

If we dont have context of the job id, we can use get_jobs to get all the jobs which is queued:

Then we can loop through the results and get the id like below:

Or to get the job id's in a list:

Since we received the job id, we can use fetch_job to get more info about the job:

And as before we can view it in json format:

We can also view the key in redis by passing the job_id:

To view how many jobs are in our queue, we can either do:

Consuming from the Queue

Now that our task is queued, let's fire of our worker to consume the job from the queue and action the task:

Now, when we get the status of our job, you will see that it finished:

And to get the result from our worker:

And like before, if you dont have context of your job id, you can get the job id, then return the result:

Naming Queues

We can namespace our tasks into specific queues, for example if we want to create queue1 :

To verify the queue name:

As we can see our queue is empty:

Let's submit 10 jobs to our queue:

To verify the number of jobs in our queue:

And to count them:

Cleaning the Queue

Cleaning the queue can either be done with:

Then to verify that our queue is clean:

Naming Workers

The same way that we defined a name for our queue, we can define a name for our workers:

Which means you can have different workers consuming jobs from specific queues.

Documentation:

- https://python-rq.org/docs/

- https://python-rq.org/docs/workers/

- https://python-rq.org/docs/monitoring/

Thanks for reading, feel free to check out my website , and subscrube to my newsletter or follow me at @ruanbekker on Twitter.

- Linktree: https://go.ruan.dev/links

- Patreon: https://go.ruan.dev/patreon

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Python task queue using Redis

closeio/tasktiger

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 351 Commits | ||||

Repository files navigation

TaskTiger is a Python task queue using Redis.

(Interested in working on projects like this? Close is looking for great engineers to join our team)

Quick start

Configuration, task decorator, task options, custom retrying, inspect, requeue and delete tasks, pause queue processing, rollbar error handling, cleaning up error'd tasks, running the test suite, releasing a new version.

Per-task fork or synchronous worker

By default, TaskTiger forks a subprocess for each task, This comes with several benefits: Memory leaks caused by tasks are avoided since the subprocess is terminated when the task is finished. A hard time limit can be set for each task, after which the task is killed if it hasn't completed. To ensure performance, any necessary Python modules can be preloaded in the parent process.

TaskTiger also supports synchronous workers, which allows for better performance due to no forking overhead, and tasks have the ability to reuse network connections. To prevent memory leaks from accumulating, workers can be set to shutdown after a certain amount of time, at which point a supervisor can restart them. Workers also automatically exit on on hard timeouts to prevent an inconsistent process state.

Unique queues

TaskTiger has the option to avoid duplicate tasks in the task queue. In some cases it is desirable to combine multiple similar tasks. For example, imagine a task that indexes objects (e.g. to make them searchable). If an object is already present in the task queue and hasn't been processed yet, a unique queue will ensure that the indexing task doesn't have to do duplicate work. However, if the task is already running while it's queued, the task will be executed another time to ensure that the indexing task always picks up the latest state.

TaskTiger can ensure to never execute more than one instance of tasks with similar arguments by acquiring a lock. If a task hits a lock, it is requeued and scheduled for later executions after a configurable interval.

Task retrying

TaskTiger lets you retry exceptions (all exceptions or a list of specific ones) and comes with configurable retry intervals (fixed, linear, exponential, custom).

Flexible queues

Tasks can be easily queued in separate queues. Workers pick tasks from a randomly chosen queue and can be configured to only process specific queues, ensuring that all queues are processed equally. TaskTiger also supports subqueues which are separated by a period. For example, you can have per-customer queues in the form process_emails.CUSTOMER_ID and start a worker to process process_emails and any of its subqueues. Since tasks are picked from a random queue, all customers get equal treatment: If one customer is queueing many tasks it can't block other customers' tasks from being processed. A maximum queue size can also be enforced.

Batch queues

Batch queues can be used to combine multiple queued tasks into one. That way, your task function can process multiple sets of arguments at the same time, which can improve performance. The batch size is configurable.

Scheduled and periodic tasks

Tasks can be scheduled for execution at a specific time. Tasks can also be executed periodically (e.g. every five seconds).

Structured logging

TaskTiger supports JSON-style logging via structlog, allowing more flexibility for tools to analyze the log. For example, you can use TaskTiger together with Logstash, Elasticsearch, and Kibana.

The structlog processor tasktiger.logging.tasktiger_processor can be used to inject the current task id into all log messages.

Reliability

TaskTiger atomically moves tasks between queue states, and will re-execute tasks after a timeout if a worker crashes.

Error handling

If an exception occurs during task execution and the task is not set up to be retried, TaskTiger stores the execution tracebacks in an error queue. The task can then be retried or deleted manually. TaskTiger can be easily integrated with error reporting services like Rollbar.

Admin interface

A simple admin interface using flask-admin exists as a separate project ( tasktiger-admin ).

It is easy to get started with TaskTiger.

Create a file that contains the task(s).

Queue the task using the delay method.

Run a worker (make sure the task code can be found, e.g. using PYTHONPATH ).

A TaskTiger object keeps track of TaskTiger's settings and is used to decorate and queue tasks. The constructor takes the following arguments:

Redis connection object. The connection should be initialized with decode_responses=True to avoid encoding problems on Python 3.

Dict with config options. Most configuration options don't need to be changed, and a full list can be seen within TaskTiger 's __init__ method.

Here are a few commonly used options:

ALWAYS_EAGER

If set to True , all tasks except future tasks ( when is a future time) will be executed locally by blocking until the task returns. This is useful for testing purposes.

BATCH_QUEUES

Set up queues that will be processed in batch, i.e. multiple jobs are taken out of the queue at the same time and passed as a list to the worker method. Takes a dict where the key represents the queue name and the value represents the batch size. Note that the task needs to be declared as batch=True . Also note that any subqueues will be automatically treated as batch queues, and the batch value of the most specific subqueue name takes precedence.

ONLY_QUEUES

If set to a non-empty list of queue names, a worker only processes the given queues (and their subqueues), unless explicit queues are passed to the command line.

setup_structlog

If set to True, sets up structured logging using structlog when initializing TaskTiger. This makes writing custom worker scripts easier since it doesn't require the user to set up structlog in advance.

TaskTiger provides a task decorator to specify task options. Note that simple tasks don't need to be decorated. However, decorating the task allows you to use an alternative syntax to queue the task, which is compatible with Celery:

Tasks support a variety of options that can be specified either in the task decorator, or when queueing a task. For the latter, the delay method must be called on the TaskTiger object, and any options in the task decorator are overridden.

When queueing a task, the task needs to be defined in a module other than the Python file which is being executed. In other words, the task can't be in the __main__ module. TaskTiger will give you back an error otherwise.

The following options are supported by both delay and the task decorator:

Name of the queue where the task will be queued.

hard_timeout

If the task runs longer than the given number of seconds, it will be killed and marked as failed.

Boolean to indicate whether the task will only be queued if there is no similar task with the same function, arguments, and keyword arguments in the queue. Note that multiple similar tasks may still be executed at the same time since the task will still be inserted into the queue if another one is being processed. Requeueing an already scheduled unique task will not change the time it was originally scheduled to execute at.

If set, this implies unique=True and specifies the list of kwargs to use to construct the unique key. By default, all args and kwargs are serialized and hashed.

Boolean to indicate whether to hold a lock while the task is being executed (for the given args and kwargs). If a task with similar args/kwargs is queued and tries to acquire the lock, it will be retried later.

If set, this implies lock=True and specifies the list of kwargs to use to construct the lock key. By default, all args and kwargs are serialized and hashed.

max_queue_size

A maximum queue size can be enforced by setting this to an integer value. The QueueFullException exception will be raised when queuing a task if this limit is reached. Tasks in the active , scheduled , and queued states are counted against this limit.

Takes either a datetime (for an absolute date) or a timedelta (relative to now). If given, the task will be scheduled for the given time.

Boolean to indicate whether to retry the task when it fails (either because of an exception or because of a timeout). To restrict the list of failures, use retry_on . Unless retry_method is given, the configured DEFAULT_RETRY_METHOD is used.

If a list is given, it implies retry=True . The task will be only retried on the given exceptions (or its subclasses). To retry the task when a hard timeout occurs, use JobTimeoutException .

retry_method

If given, implies retry=True . Pass either:

- a function that takes the retry number as an argument, or,

- a tuple (f, args) , where f takes the retry number as the first argument, followed by the additional args.

The function needs to return the desired retry interval in seconds, or raise StopRetry to stop retrying. The following built-in functions can be passed for common scenarios and return the appropriate tuple:

fixed(delay, max_retries)

Returns a method that returns the given delay (in seconds) or raises StopRetry if the number of retries exceeds max_retries .

linear(delay, increment, max_retries)

Like fixed , but starts off with the given delay and increments it by the given increment after every retry.

exponential(delay, factor, max_retries)

Like fixed , but starts off with the given delay and multiplies it by the given factor after every retry.

For example, to retry a task 3 times (for a total of 4 executions), and wait 60 seconds between executions, pass retry_method=fixed(60, 3) .

runner_class

If given, a Python class can be specified to influence task running behavior. The runner class should inherit tasktiger.runner.BaseRunner and implement the task execution behavior. The default implementation is available in tasktiger.runner.DefaultRunner . The following behavior can be achieved:

Execute specific code before or after the task is executed (in the forked child process), or customize the way task functions are called in either single or batch processing.

Note that if you want to execute specific code for all tasks, you should use the CHILD_CONTEXT_MANAGERS configuration option.

Control the hard timeout behavior of a task.

Execute specific code in the main worker process after a task failed permanently.

This is an advanced feature and the interface and requirements of the runner class can change in future TaskTiger versions.

The following options can be only specified in the task decorator:

If set to True , the task will receive a list of dicts with args and kwargs and can process multiple tasks of the same type at once. Example: [{"args": [1], "kwargs": {}}, {"args": [2], "kwargs": {}}] Note that the list will only contain multiple items if the worker has set up BATCH_QUEUES for the specific queue (see the Configuration section).

If given, makes a task execute periodically. Pass either:

- a function that takes the current datetime as an argument.

- a tuple (f, args) , where f takes the current datetime as the first argument, followed by the additional args.

The schedule function must return the next task execution datetime, or None to prevent periodic execution. The function is executed to determine the initial task execution date when a worker is initialized, and to determine the next execution date when the task is about to get executed.

For most common scenarios, the below mentioned built-in functions can be passed:

periodic(seconds=0, minutes=0, hours=0, days=0, weeks=0, start_date=None, end_date=None)

Use equal, periodic intervals, starting from start_date (defaults to 2000-01-01T00:00Z , a Saturday, if not given), ending at end_date (or never, if not given). For example, to run a task every five minutes indefinitely, use schedule=periodic(minutes=5) . To run a task every every Sunday at 4am UTC, you could use schedule=periodic(weeks=1, start_date=datetime.datetime(2000, 1, 2, 4)) .

cron_expr(expr, start_date=None, end_date=None)

start_date , to specify the periodic task start date. It defaults to 2000-01-01T00:00Z , a Saturday, if not given. end_date , to specify the periodic task end date. The task repeats forever if end_date is not given. For example, to run a task every hour indefinitely, use schedule=cron_expr("0 * * * *") . To run a task every Sunday at 4am UTC, you could use schedule=cron_expr("0 4 * * 0") .

In some cases the task retry options may not be flexible enough. For example, you might want to use a different retry method depending on the exception type, or you might want to like to suppress logging an error if a task fails after retries. In these cases, RetryException can be raised within the task function. The following options are supported:

Specify a custom retry method for this retry. If not given, the task's default retry method is used, or, if unspecified, the configured DEFAULT_RETRY_METHOD . Note that the number of retries passed to the retry method is always the total number of times this method has been executed, regardless of which retry method was used.

original_traceback

If RetryException is raised from within an except block and original_traceback is True, the original traceback will be logged (i.e. the stacktrace at the place where the caught exception was raised). False by default.

If set to False and the task fails permanently, a warning will be logged instead of an error, and the task will be removed from Redis when it completes. True by default.

Example usage:

The tasktiger command is used on the command line to invoke a worker. To invoke multiple workers, multiple instances need to be started. This can be easily done e.g. via Supervisor. The following Supervisor configuration file can be placed in /etc/supervisor/tasktiger.ini and runs 4 TaskTiger workers as the ubuntu user. For more information, read Supervisor's documentation.

Workers support the following options:

-q , --queues

If specified, only the given queue(s) are processed. Multiple queues can be separated by comma. Any subqueues of the given queues will be also processed. For example, -q first,second will process items from first , second , and subqueues such as first.CUSTOMER1 , first.CUSTOMER2 .

-e , --exclude-queues

If specified, exclude the given queue(s) from processing. Multiple queues can be separated by comma. Any subqueues of the given queues will also be excluded unless a more specific queue is specified with the -q option. For example, -q email,email.incoming.CUSTOMER1 -e email.incoming will process items from the email queue and subqueues like email.outgoing.CUSTOMER1 or email.incoming.CUSTOMER1 , but not email.incoming or email.incoming.CUSTOMER2 .

-m , --module

Module(s) to import when launching the worker. This improves task performance since the module doesn't have to be reimported every time a task is forked. Multiple modules can be separated by comma.

Another way to preload modules is to set up a custom TaskTiger launch script, which is described below.

-h , --host

Redis server hostname (if different from localhost ).

-p , --port

Redis server port (if different from 6379 ).

-a , --password

Redis server password (if required).

Redis server database number (if different from 0 ).

-M , --max-workers-per-queue

Maximum number of workers that are allowed to process a queue.

--store-tracebacks/--no-store-tracebacks

Store tracebacks with execution history (config defaults to True ).

Can be fork (default) or sync . Whether to execute tasks in a separate process via fork, or execute them synchronously in the same proces. See "Features" section for the benefits of either approach.

--exit-after

Exit the worker after the time in minutes has elapsed. This is mainly useful with the synchronous executor to prevent memory leaks from accumulating.

In some cases it is convenient to have a custom TaskTiger launch script. For example, your application may have a manage.py command that sets up the environment and you may want to launch TaskTiger workers using that script. To do that, you can use the run_worker_with_args method, which launches a TaskTiger worker and parses any command line arguments. Here is an example:

TaskTiger provides access to the Task class which lets you inspect queues and perform various actions on tasks.

Each queue can have tasks in the following states:

- queued : Tasks that are queued and waiting to be picked up by the workers.

- active : Tasks that are currently being processed by the workers.

- scheduled : Tasks that are scheduled for later execution.

- error : Tasks that failed with an error.

To get a list of all tasks for a given queue and state, use Task.tasks_from_queue . The method gives you back a tuple containing the total number of tasks in the queue (useful if the tasks are truncated) and a list of tasks in the queue, latest first. Using the skip and limit keyword arguments, you can fetch arbitrary slices of the queue. If you know the task ID, you can fetch a given task using Task.from_id . Both methods let you load tracebacks from failed task executions using the load_executions keyword argument, which accepts an integer indicating how many executions should be loaded.

Tasks can also be constructed and queued using the regular constructor, which takes the TaskTiger instance, the function name and the options described in the Task options section. The task can then be queued using its delay method. Note that the when argument needs to be passed to the delay method, if applicable. Unique tasks can be reconstructed using the same arguments.

The Task object has the following properties:

- id : The task ID.

- data : The raw data as a dict from Redis.

- executions : A list of failed task executions (as dicts). An execution dict contains the processing time in time_started and time_failed , the worker host in host , the exception name in exception_name and the full traceback in traceback .

- serialized_func , args , kwargs : The serialized function name with all of its arguments.

- func : The imported (executable) function

The Task object has the following methods:

- cancel : Cancel a scheduled task.

- delay : Queue the task for execution.

- delete : Remove the task from the error queue.

- execute : Run the task without queueing it.

- n_executions : Queries and returns the number of past task executions.

- retry : Requeue the task from the error queue for execution.

- update_scheduled_time : Updates a scheduled task's date to the given date.

The current task can be accessed within the task function while it's being executed: In case of a non-batch task, the current_task property of the TaskTiger instance returns the current Task instance. In case of a batch task the current_tasks property must be used which returns a list of tasks that are currently being processed (in the same order as they were passed to the task).

Example 1: Queueing a unique task and canceling it without a reference to the original task.

Example 2: Inspecting queues and retrying a task by ID.

Example 3: Accessing the task instances within a batch task function to determine how many times the currently processing tasks were previously executed.

The --max-workers-per-queue option uses queue locks to control the number of workers that can simultaneously process the same queue. When using this option a system lock can be placed on a queue which will keep workers from processing tasks from that queue until it expires. Use the set_queue_system_lock() method of the TaskTiger object to set this lock.

TaskTiger comes with Rollbar integration for error handling. When a task errors out, it can be logged to Rollbar, grouped by queue, task function name and exception type. To enable logging, initialize rollbar with the StructlogRollbarHandler provided in the tasktiger.rollbar module. The handler takes a string as an argument which is used to prefix all the messages reported to Rollbar. Here is a custom worker launch script:

Error'd tasks occasionally need to be purged from Redis, so TaskTiger exposes a purge_errored_tasks method to help. It might be useful to set this up as a periodic task as follows:

Tests can be run locally using the provided docker compose file. After installing docker, tests should be runnable with:

Tests can be more granularly run using normal pytest flags. For example:

- Make sure the code has been thoroughly reviewed and tested in a realistic production environment.

- Update setup.py and CHANGELOG.md . Make sure you include any breaking changes.

- Run python setup.py sdist and twine upload dist/<PACKAGE_TO_UPLOAD> .

- Push a new tag pointing to the released commit, format: v0.13 for example.

- Mark the tag as a release in GitHub's UI and include in the description the changelog entry for the version. An example would be: https://github.com/closeio/tasktiger/releases/tag/v0.13 .

Releases 17

Contributors 30.

- Python 98.2%

- Dockerfile 0.1%

How to Run Your First Task with RQ, Redis, and Python

Time to read: 7 minutes

- Facebook logo

- Twitter Logo Follow us on Twitter

- LinkedIn logo

As a developer, it can be very useful to learn how to run functions in the background while being able to monitor the queue in another tab or different system. This is incredibly helpful when managing heavy workloads that might not work efficiently when called all at once, or when making large numbers of calls to a database that returns data slowly over time rather than all at once.

In this tutorial we will implement a RQ queue in Python with the help of Redis to schedule and execute tasks in a timely manner.

Tutorial Requirements

- Python 3.6 or newer. If your operating system does not provide a Python interpreter, you can go to python.org to download an installer.

Let’s talk about task queues

Task queues are a great way to allow tasks to work asynchronously outside of the main application flow. There are many task queues in Python to assist you in your project, however, we’ll be discussing a solution today known as RQ.

RQ, also known as Redis Queue , is a Python library that allows developers to enqueue jobs to be processed in the background with workers . The RQ workers will be called when it's time to execute the queue in the background. Using a connection to Redis , it’s no surprise that this library is super lightweight and offers support for those getting started for the first time.

By using this particular task queue, it is possible to process jobs in the background with little to no hassle.

Set up the environment

Create a project directory in your terminal called “rq-test” to follow along.

Install a virtual environment and copy and paste the commands to install rq and related packages. If you are using a Unix or MacOS system, enter the following commands:

If you are on a Windows machine, enter the following commands in a prompt window:

RQ requires a Redis installation on your machine which can be done using the following commands using wget . Redis is on version 6.0.6 at the time of this article publication.

If you are using a Unix or MacOS system, enter these commands to install Redis. This is my personal favorite way to install Redis, but there are alternatives below:

If you have Homebrew installed, you can type brew install redis in the terminal and refer to this GitHub gist to install Redis on the Mac . For developers using Ubuntu Linux, the command sudo apt-get install redis would get the job done as well.

Run the Redis server in a separate terminal window on the default port with the command src/redis-server from the directory where it's installed.

For Windows users, you would have to follow a separate tutorial to run Redis on Windows . Download the latest zip file on GitHub and extract the contents. Run the redis-server.exe file that was extracted from the zip file to start the Redis server.

The output should look similar to the following after running Redis:

Build out the tasks

In this case, a task for Redis Queue is merely a Python function. For this article, we’ll tell the task to print a message to the terminal for a “x” amount of seconds to demonstrate the use of RQ.

Copy and paste the following code to a file named “tasks.py” in your directory.

These are simple tasks that print out numbers and text on the terminal so that we can see if the tasks are executed properly. Using the time.sleep(1) function from the Python time library will allow your task to be suspended for the given number of seconds and overall extend the time of the task so that we can examine their progress.

Feel free to alter this code after the tutorial and create your own tasks. Some other popular tasks are sending a fax message or email by connecting to your email client.

Create your queue

Create another file in the root directory and name it “app.py”. Copy and paste the following code:

The queue object sets up a connection to Redis and initializes a queue based on that connection. This queue can hold all the jobs required to run in the background with workers.

As seen in the code, the tasks.print_task function is added using the enqueue function. This means that the task added to the queue will be executed immediately

The enqueue_in function is another nifty RQ function because it expects a timedelta in order to schedule the specified job. In this case, seconds is specified, but this variable can be changed according to the time schedule expected for your usage. Check out other ways to schedule a job on this GitHub README.

Since we are testing out the RQ queue, I have enqueued both the tasks.print_task and tasks.print_numbers functions so that we can see their output on the terminal. The third argument passed in is a "5" which also stands for the argument passed into the respective functions. In this case, we are expecting to see print_task() print "Hello World!" five times and for print_numbers() to print 5 numbers in order.

If you have created any additional task, be sure to import your tasks at the top of the file so that all the tasks in your Python file can be accessed.

Run the queue

For the purposes of this article, the gif demo below will show a perfect execution of the tasks in queue so no exceptions will be raised.

The Redis server should still be running in a tab from earlier in the tutorial at this point. If it stopped, run the command src/redis-server inside the redis-6.0.6 folder on one tab, or for developers with a Windows machine, start redis-cli.exe . Open another tab solely to run the RQ scheduler with the command rq worker --with-scheduler .

This should be the output after running the command above.

The worker command activated a worker process in order to connect to Redis and look for any jobs assigned to the queue from the code in app.py .

Lastly, open a third tab in the terminal for the root project directory. Start up the virtual environment again with the command source venv/bin/activate . Then type python app.py to run the project.

Go back to the tab that is running rq worker --with-scheduler . Wait 5 more seconds after the first task is executed to see the next task. Although the live demo gif below wasn’t able to capture the best timing due to having to run the program and record, it is noticeable that there was a pause between tasks until execution and that both tasks were completed within 15 seconds.

Here’s the sample output inside of the rqworker tab:

As seen in the output above, if the tasks written in task.py had a line to return anything, then the result of both tasks are kept for 500 seconds which is the default. A developer can alter the return value's time to live by passing in a result_ttl parameter when adding tasks to the queue.

Handle exceptions and try again

If a job were to fail, you can always set up a log to keep track of the error messages, or you can use the RQ queue to enqueue and retry failed jobs. By using RQ's FailedJobRegistry package, you can keep track of the jobs that failed during runtime. The RQ documentation discusses how it handles the exceptions and how data regarding the job can help the developer figure out how to resubmit the job.

However, RQ also supports developers in handling exceptions in their own way by injecting your own logic to the rq workers . This may be a helpful option for you if you are executing many tasks in your project and those that failed are not worth retrying.

Force a failed task to retry

Since this is an introductory article to run your first task with RQ, let's try to purposely fail one of the tasks from earlier to test out RQ's retry object.

Go to the tasks.py file and alter the print_task() function so that random numbers can be generated and determine if the function will be executed or not. We will be using the random Python library to assist us in generating numbers. Don't forget to include the import random at the top of the file.

Copy and paste the following lines of code to change the print_task() function in the tasks.py file.

Go back to the app.py file to change the queue. Instead of using the enqueue_in function to execute the tasks.print_task function, delete the line and replace it with queue.enqueue(tasks.print_task, 5, retry=Retry(max=2)) .

The retry object is imported with rq so make sure you add from rq import Retry at the top of the file as well in order to use this functionality. This object accepts max and interval arguments to specify when the particular function will be retried. In the newly changed line, the tasks.print_task function will pass in the function we want to retry, the argument parameter "5" which stands for the seconds of execution, and lastly the maximum amount of times we want the queue to retry.

The tasks in queue should now look like this:

When running the print_task task, there is a 50/50 chance that tasks.print_task() will execute properly since we're only generating a 1 or 2, and the print statement will only happen if you generate a 1. A RuntimeError will be raised otherwise and the queue will retry the task immediately as many times as it takes to successfully print "Hello World!".

What’s next for task queues?

Congratulations! You have successfully learned and implemented the basics of scheduling tasks in the RQ queue. Perhaps now you can tell the worker command to add a task that prints out an infinite number of "Congratulations" messages in a timely manner!

Otherwise, check out these different tasks that you can build in to your Redis Queue:

- Schedule Twilio SMS to a list of contacts quickly!

- Use Redis Queue to generate a fan fiction with OpenAI GPT-3

- Queue Emails with Twilio SendGrid using Redis Queue

Let me know what you have been building by reaching out to me over email!

Diane Phan is a developer on the Developer Voices team. She loves to help programmers tackle difficult challenges that might prevent them from bringing their projects to life. She can be reached at dphan [at] twilio.com or LinkedIn .

Related Posts

Related Resources

Twilio docs, from apis to sdks to sample apps.

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars.

Learn from customer engagement experts to improve your own communication.

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.

Celery is a task queue implementation for Python web applications used to asynchronously execute work outside the HTTP request-response cycle.

Why is Celery useful?

Task queues and the Celery implementation in particular are one of the trickier parts of a Python web application stack to understand.

If you are a junior developer it can be unclear why moving work outside the HTTP request-response cycle is important. In short, you want your WSGI server to respond to incoming requests as quickly as possible because each request ties up a worker process until the response is finished. Moving work off those workers by spinning up asynchronous jobs as tasks in a queue is a straightforward way to improve WSGI server response times.

What's the difference between Celeryd and Celerybeat?

Celery can be used to run batch jobs in the background on a regular schedule. A key concept in Celery is the difference between the Celery daemon (celeryd), which executes tasks, Celerybeat, which is a scheduler. Think of Celeryd as a tunnel-vision set of one or more workers that handle whatever tasks you put in front of them. Each worker will perform a task and when the task is completed will pick up the next one. The cycle will repeat continously, only waiting idly when there are no more tasks to put in front of them.

Celerybeat on the other hand is like a boss who keeps track of when tasks should be executed. Your application can tell Celerybeat to execute a task at time intervals, such as every 5 seconds or once a week. Celerybeat can also be instructed to run tasks on a specific date or time, such as 5:03pm every Sunday. When the interval or specific time is hit, Celerybeat will hand the job over to Celeryd to execute on the next available worker.

Celery tutorials and advice

Celery is a powerful tool that can be difficult to wrap your mind around at first. Be sure to read up on task queue concepts then dive into these specific Celery tutorials.

A 4 Minute Intro to Celery is a short introductory task queue screencast.

This blog post series on Celery's architecture , Celery in the wild: tips and tricks to run async tasks in the real world and dealing with resource-consuming tasks on Celery provide great context for how Celery works and how to handle some of the trickier bits to working with the task queue.

How to use Celery with RabbitMQ is a detailed walkthrough for using these tools on an Ubuntu VPS.

Celery - Best Practices explains things you should not do with Celery and shows some underused features for making task queues easier to work with.

Celery Best Practices is a different author's follow up to the above best practices post that builds upon some of his own learnings from 3+ years using Celery.

Common Issues Using Celery (And Other Task Queues) contains good advice about mistakes to avoid in your task configurations, such as database transaction usage and retrying failed tasks.

Asynchronous Processing in Web Applications Part One and Part Two are great reads for understanding the difference between a task queue and why you shouldn't use your database as one.

My Experiences With A Long-Running Celery-Based Microprocess gives some good tips and advice based on experience with Celery workers that take a long time to complete their jobs.

Checklist to build great Celery async tasks is a site specifically designed to give you a list of good practices to follow as you design your task queue configuration and deploy to development, staging and production environments.

Heroku wrote about how to secure Celery when tasks are otherwise sent over unencrypted networks.

Unit testing Celery tasks explains three strategies for testing code within functions that Celery executes. The post concludes that calling Celery tasks synchronously to test them is the best strategy without any downsides. However, keep in mind that any testing method that is not the same as how the function will execute in a production environment can potentially lead to overlooked bugs. There is also an open source Git repository with all of the source code from the post.

Rollbar monitoring of Celery in a Django app explains how to use Rollbar to monitor tasks. Super useful when workers invariably die for no apparent reason.

3 Gotchas for Working with Celery are things to keep in mind when you're new to the Celery task queue implementation.

Dask and Celery compares Dask.distributed with Celery for Python projects. The post gives code examples to show how to execute tasks with either task queue.

Python+Celery: Chaining jobs? explains that Celery tasks should be dependent upon each other using Celery chains, not direct dependencies between tasks.

Celery with web frameworks

Celery is typically used with a web framework such as Django , Flask or Pyramid . These resources show you how to integrate the Celery task queue with the web framework of your choice.

How to Use Celery and RabbitMQ with Django is a great tutorial that shows how to both install and set up a basic task with Django.

Miguel Grinberg wrote a nice post on using the task queue Celery with Flask . He gives an overview of Celery followed by specific code to set up the task queue and integrate it with Flask.

Setting up an asynchronous task queue for Django using Celery and Redis is a straightforward tutorial for setting up the Celery task queue for Django web applications using the Redis broker on the back end.

A Guide to Sending Scheduled Reports Via Email Using Django And Celery shows you how to use django-celery in your application. Note however there are other ways of integrating Celery with Django that do not require the django-celery dependency.

Flask asynchronous background tasks with Celery and Redis combines Celery with Redis as the broker and Flask for the example application's framework.

Celery and Django and Docker: Oh My! shows how to create Celery tasks for Django within a Docker container. It also provides some

Asynchronous Tasks With Django and Celery shows how to integrate Celery with Django and create Periodic Tasks.

Getting Started Scheduling Tasks with Celery is a detailed walkthrough for setting up Celery with Django (although Celery can also be used without a problem with other frameworks).

Asynchronous Tasks with Falcon and Celery configures Celery with the Falcon framework, which is less commonly-used in web tutorials.

Custom Celery task states is an advanced post on creating custom states, which is especially useful for transient states in your application that are not covered by the default Celery configuration.

Asynchronous Tasks with Django and Celery looks at how to configure Celery to handle long-running tasks in a Django app.

Celery deployment resources

Celery and its broker run separately from your web and WSGI servers so it adds some additional complexity to your deployments . The following resources walk you through how to handle deployments and get the right configuration settings in place.

- How to run celery as a daemon? is a short post with the minimal code for running the Celery daemon and Celerybeat as system services on Linux.

Do you want to learn more about task queues, or another topic?

How do I execute code outside the HTTP request-response cycle?

I've built a Python web app, now how do I deploy it?

What tools exist for monitoring a deployed web app?

Table of Contents

Full stack python.

- Skip to main content

- Skip to primary sidebar

- Skip to footer

Additional menu

Super Fast Python

making you awesome at concurrency

Queue task_done() and join() in Python

April 14, 2022 by Jason Brownlee in Python Threading

Last Updated on September 12, 2022

You can mark queue tasks done via task_done() and be notified when all tasks are done via join() .

In this tutorial you will discover how to use queue task done and join in Python .

Let’s get started.

Table of Contents

Need To Know When All Tasks are Done

A thread is a thread of execution in a computer program.

Every Python program has at least one thread of execution called the main thread. Both processes and threads are created and managed by the underlying operating system.

Sometimes we may need to create additional threads in our program in order to execute code concurrently.

Python provides the ability to create and manage new threads via the threading module and the threading.Thread class .

You can learn more about Python threads in the guide:

- Threading in Python: The Complete Guide

Threads can share data with each other using thread-safe queues, such as the queue.Queue class .

A problem when using queues is knowing when all items in the queue have been processed by consumer threads.

How can we know when all items have been processed in a queue?

Run loops using all CPUs, download your FREE book to learn how.

Why Care When All Tasks Are Done

There are many reasons why a thread may want to know when all tasks in a queue have been processed.

For example:

- A producer thread may want to wait until all work is done before adding new work.

- A producer thread may want to wait for all tasks to be done before sending a shutdown signal.

- A main thread may want to wait for all tasks to be done before terminating the program.

There are two aspects to this, they are:

- A thread blocking until tasks are done.

- A task being done is more than being retrieved from the queue.

Specifically, waiting means that the thread is blocked until the condition is met.

The condition of all tasks being processed is not only the case that all items put on the queue have been retrieved, but have been processed by the thread that retrieved them.

Next, let’s look at how we might do this in Python.

Download Now: Free Threading PDF Cheat Sheet

How to Know When All Tasks Are Done

Python provides thread-safe queue data structures in the queue module, such as the queue.Queue , queue.LifoQueue and queue.PriorityQueue classes.

Objects can be added to the queue via calls to Queue.put() and removed from the queue via calls to Queue.get() .

Thread-safe means that multiple threads may put and get items from the queue concurrently without fear of a race condition or corruption of the internal data structure.

A thread can block and be notified when all current items or tasks on the queue are done by calling the Queue.join() function .

| .. .join() |

Block means that the calling thread will wait until the condition is met, specifically that all tasks are done. The Queue.join() function will not return until then.

Notified means that the blocked thread will be woken up and allowed to proceed when all tasks are done. This means that the join() function will return at this time allowing the thread to continue on with the next instructions.

Multiple different threads may join the queue and await the state that all tasks are marked done.

If there are no tasks in the queue, e.g. the queue is empty, then the join() function will return immediately.

The count of unfinished tasks goes up whenever an item is added to the queue. The count goes down whenever a consumer thread calls task_done() to indicate that the item was retrieved and all work on it is complete. When the count of unfinished tasks drops to zero, join() unblocks. — queue — A synchronized queue class

The Queue.join() function only works if threads retrieving items or tasks from the queue via Queue.get() also call the Queue.task_done() function .

| .. = queue.get() .task_done() |

The Queue.task_done() function is called by the consumer thread (e.g. the thread that calls Queue.get() ) only after the thread has finished processing the task retrieved from the queue.

This will be application specific, but is more than simply retrieving the task from the queue.

Indicate that a formerly enqueued task is complete. Used by queue consumer threads. For each get() used to fetch a task, a subsequent call to task_done() tells the queue that the processing on the task is complete. — queue — A synchronized queue class

If there are multiple consumer threads, then for join() to function correctly, each consumer thread must mark tasks as done.

If processing the task may fail with an unexpected Error or Exception , it is a good practice to wrap the processing of the task in a try-finally pattern.

| .. = queue.get() : # process the item # ... : # mark the task as done or processed queue.task_done() |

This ensures that the task is marked done in the queue, even if processing the task fails.

In turn, it allows any threads blocked by calling Queue.join() to be appropriately notified when all items are retrieved from the queue, avoiding a possible deadlock concurrency failure mode.

Now that we are familiar with the Queue.task_done() and Queue.join() functions, let’s look at some worked examples.

Free Python Threading Course

Download your FREE threading PDF cheat sheet and get BONUS access to my free 7-day crash course on the threading API.

Discover how to use the Python threading module including how to create and start new threads and how to use a mutex locks and semaphores

Learn more

Example of Queue Join and Task Done

We can explore an example of how to use join() and task_done() .

In this example we will have a producer thread that will add ten tasks to the queue and then signal that no further tasks are to be expected. The consumer thread will get the tasks, process them and mark them as done. When the signal to exit is received, the consumer thread will terminate. The main thread will wait for the producer thread to add all items to the queue, and will wait for all items on the queue to be processed before moving on.

Let’s dive in.

Producer Thread

First, we can define the function to be executed by the producer thread.

The task will iterate ten times in a loop.

| .. ('Producer starting') i in range(10): # ... |

Each iteration, it will generate a new random value between 0 and 1 via the random.random() function. It will then pair the generated value with an integer timestamp from 0 to 9 into a tuple and put the value on the queue.

| .. = (i, random()) (f'.producer added {task}') .put(task) |

Finally, the producer will put the value None on the queue to signal to the consumer that there are no further tasks.

This is called a Sentinel Value and is a common way for threads to communicate via queues to signal an important event, like a shutdown.

| .. .put(None) ('Producer finished') |

The producer() function below implements this by taking the queue instance as an argument.

| producer(queue): print('Producer starting') # add tasks to the queue for i in range(10): # generate a task task = (i, random()) print(f'.producer added {task}') # add it to the queue queue.put(task) # send a signal that no further tasks are coming queue.put(None) print('Producer finished') |

Consumer Thread

Next, we can define the function to be executed by the consumer thread.

The consumer thread will loop forever.

| .. ('Consumer starting') True: # ... |

Each iteration, it will get an item from the queue and block if there is no item yet available.

| .. = queue.get() |

If the item retrieved from the queue is the value None , then the task will break the loop and terminate the thread.

| .. task is None: break |

Otherwise, the fractional value is used to block with a call to time.sleep() and is then reported. The item is then marked as processed via a call to task_done() .

| .. (task[1]) (f'.consumer got {task}') .task_done() |

Finally, just prior to the thread exiting, it will mark the signal to terminate as processed.

| .. .task_done() ('Consumer finished') |

The consumer() function below implements this and takes the queue instance as an argument.

| consumer(queue): print('Consumer starting') # process items from the queue while True: # get a task from the queue task = queue.get() # check for signal that we are done if task is None: break # process the item sleep(task[1]) print(f'.consumer got {task}') # mark the unit of work as processed queue.task_done() # mark the signal as processed queue.task_done() print('Consumer finished') |

Create Queue and Threads

In the main thread we can create the shared queue instance.

| .. = Queue() |

Then we can configure and start the producer thread, which will generate tasks and add them to the queue for the consumer to retrieve.

| .. = Thread(target=producer, args=(queue,)) .start() |

We can then configure and start the consumer thread, which will patiently wait for work to arrive on the queue.

| .. = Thread(target=consumer, args=(queue,)) .start() |

The main thread will then block until the producer thread has added all work to the queue and the thread has terminated.

| .. .join() ('Main found that the producer has finished') |

The main thread will then block on the queue with a call to join() until the consumer has retrieved all values from the queue and processed them appropriately. This includes the final signal that there are no further task items to process.

| .. .join() ('Main found that all tasks are processed') |

It is important that the main thread blocks on the producer thread first, before blocking on the queue. This is to avoid a possible race condition.

For example, if the main thread blocked on the queue directly, it is possible that at that time for the queue to be empty, in which case the call would return immediately. Alternatively, it may join at a time when there are only a few tasks on the queue, they are consumed by the consumer thread and the join call returns.

The problem is that in both of these cases, we don’t know if the call to join returned because all tasks were marked done or just a subset of tasks that had been added to the queue at the time join was called.