DHCP Static Binding on Cisco IOS

Lesson Contents

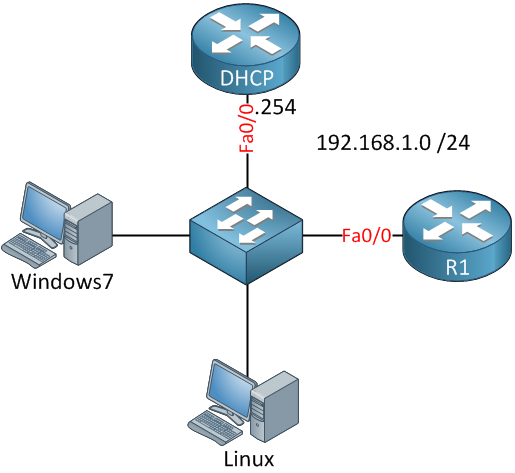

Cisco IOS devices can be configured as DHCP servers and it’s also possible to configure a static binding for certain hosts. This might sound easy but there’s a catch to it…in this lesson, I’ll show you how to configure this for a Cisco router and Windows 7 and Linux host. This is the topology I’ll be using:

The router called “DHCP” will be the DHCP server, R1, and the two computers will be DHCP clients. Everything is connected to a switch and we’ll use the 192.168.1.0 /24 subnet. The idea is to create a DHCP pool and use static bindings for the two computers and R1:

- R1: 192.168.1.100

- Windows 7: 192.168.1.110

- Linux: 192.168.1.120

First, we will create a new DHCP pool for the 192.168.1.0 /24 subnet:

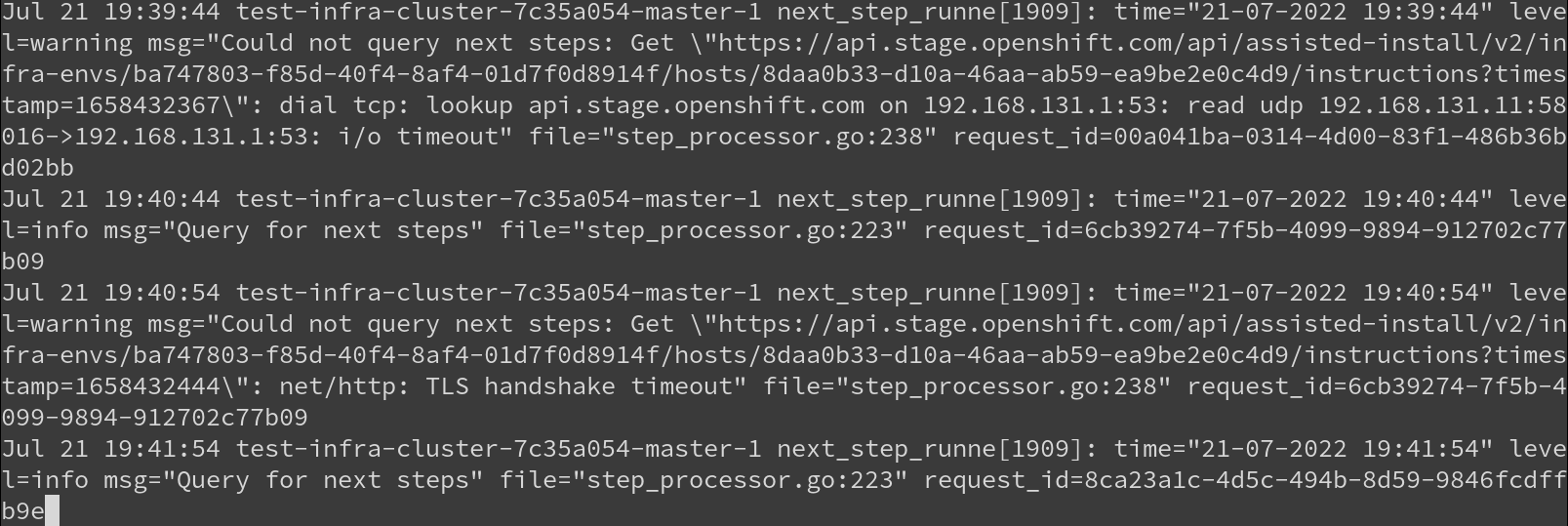

Whenever a DHCP client sends a DHCP discover it will send its client identifier or MAC address. We can see this if we enable a debug on the DHCP server:

Cisco Router DHCP Client

Now we’ll configure R1 to request an IP address:

In a few seconds you will see the following message on the DHCP server:

When a Cisco router sends a DHCP Discover, message, it will include a client identifier to identify the device uniquely. We can use this value to configure a static binding, here’s what it looks like:

We create a new pool called “R1-STATIC” with the IP address we want to use for R1 and its client identifier. We’ll renew the IP address on R1 to see what happens:

Use the renew dhcp command or do a ‘shut’ and ‘no shut’ on the interface of R1 and you’ll see this on the DHCP server:

As you can see above the DHCP server uses the client identifier for the static binding and assigns IP address 192.168.1.100 to R1. If you don’t like these long numbers, you can also configure R1 to use the MAC address as the client identifier instead:

This tells the router to use the MAC address of its FastEthernet 0/0 interface as the client identifier. You’ll see this change on the DHCP server:

Of course, now we have to change the binding on the DHCP server to match the MAC address:

Do another release on R1:

And you’ll see that R1 gets its correct IP address from the DHCP server and is being identified with its MAC address:

So that’s how the Cisco router requests an IP address. Let’s look at the Windows 7 host now to see if there’s a difference.

Windows 7 DHCP Client

This is what you’ll find on the DHCP server:

Windows 7 uses its MAC address as the client identifier. We can verify this by looking at ipconfig:

That’s easy enough. We’ll create another static binding on the DHCP server so that our Windows 7 computer receives IP address 192.168.1.110:

Let’s verify our work:

This is what the debug on the DHCP server will tell us:

There you go, Windows 7 has received the correct IP address. Last but not least is our Linux computer, which acts a little differently.

Linux DHCP Client

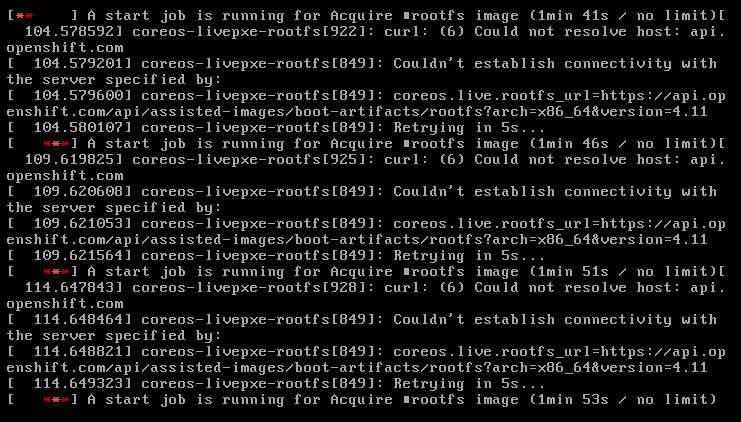

Linux (Ubuntu), in my example, acts a little differently when it comes to DHCP client. Let me show you:

The DHCP server shows this:

We see the MAC address of the Linux server so we’ll create a static binding that matches this:

We’ll release the IP address on our Linux host:

Now take a good look at the debug:

That’s not good, even though we configured the client identifier, it’s not working. Let’s double-check the MAC address:

We're Sorry, Full Content Access is for Members Only...

- Learn any CCNA, CCNP and CCIE R&S Topic . Explained As Simple As Possible.

- The Best Investment You’ve Ever Spent on Your Cisco Career!

- Full Access to our 790 Lessons . More Lessons Added Every Week!

- Content created by Rene Molenaar (CCIE #41726)

1057 Sign Ups in the last 30 days

Tags: DHCP , Network Services

Forum Replies

Thanks for the info Rene As always complete posts!

how to reserve a single ip for pc in router ? ?

That is exactly what this lesson is about…

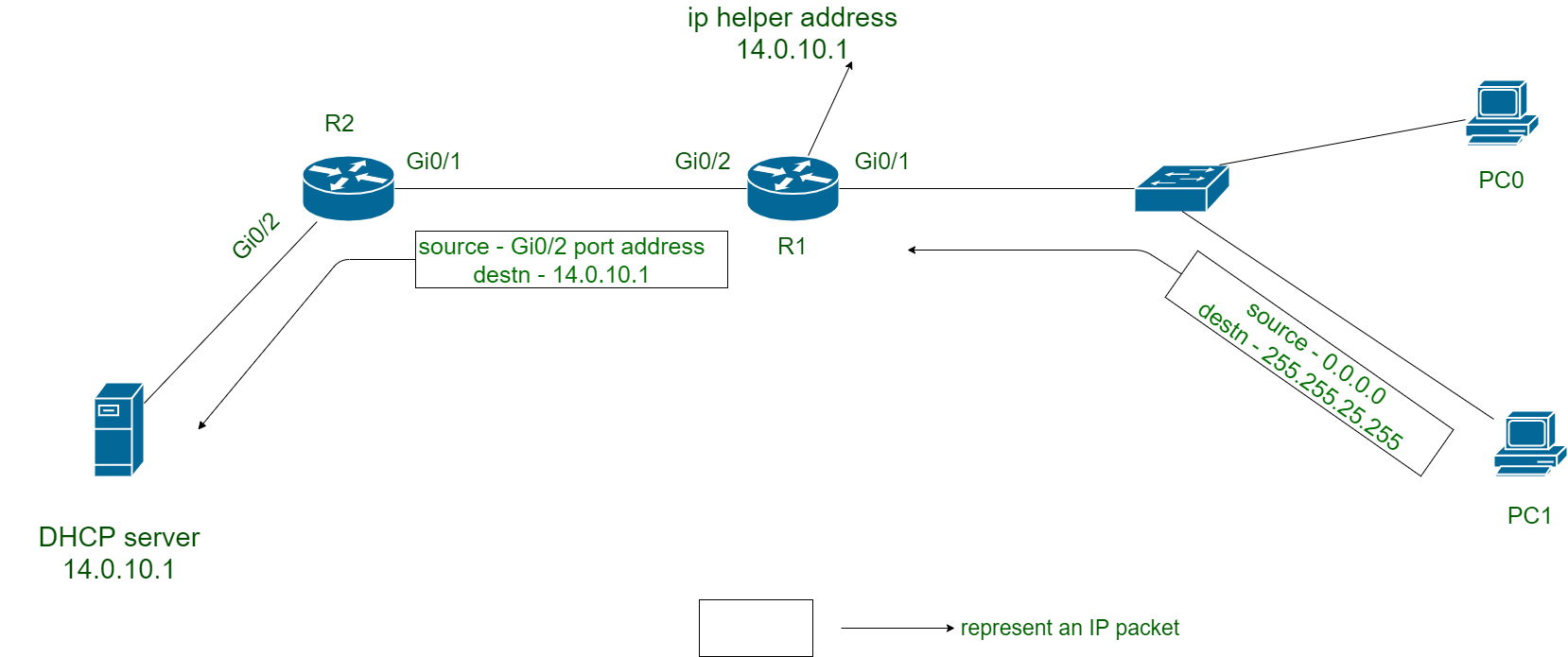

I have some challenges with CCNA R&S lab about DHCP/DHCP relay.The lab number is 10.1.3.3 assuming that you have access to the new CCNA R&S oficial course… The lab has two clients, two intermediary routers and another router connected to the intermediary routers via serials. Intermediary router are R1 and R3, the central router is R2. To R2 are connected via Ethernet GIgabit interfaces a DNS Seriver and the ISP The lab tell me to do the R2 a dhcp server for the two PC’s connected to the intermediary routers R1 and R2 so they receive an IP… The challenge

Hi Catalin,

Your message wasn’t deleted but not approeved before, I do this manually because of spam. I think this example should help you:

https://networklessons.com/cisco/ccie-routing-switching/cisco-ios-dhcp-relay-agent

If not, let me know.

17 more replies! Ask a question or join the discussion by visiting our Community Forum

- Log in / create account

- Privacy Policy

- Community portal

- Current events

- Recent changes

- Random page

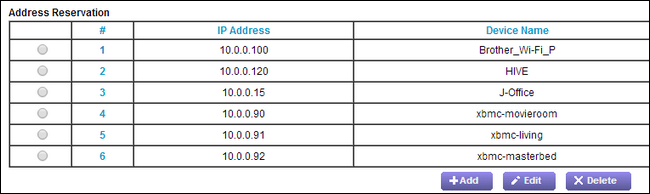

Static DHCP

From dd-wrt wiki.

| • • • • • • • • • • • • |

| Introduction Configuration DHCP Options Example How to add static leases into dhcp by command Troubleshooting |

[ edit ] Introduction

Static DHCP (aka DHCP reservation) is a useful feature which makes the DHCP server on your router always assign the same IP address to a specific computer on your LAN . To be more specific, the DHCP server assigns this static IP to a unique MAC address assigned to each NIC on your LAN. Your computer boots and requests its IP from the router's DHCP server. The DHCP server recognizes the MAC address of your device's NIC and assigns the static IP address to it. (Note also that, currently, each reserved IP address must also be unique. Therefore, e.g., one cannot reserve the same IP address for both the wired and wireless interfaces of a device, even though the device may be configured such that only one interface is active at any given time.)

Static DHCP will be needed if you want an interface to always have the same IP address. Sometimes required for certain programs, this feature is useful if other people on your LAN know your IP and access your PC using this IP. Static DHCP should be used in conjuction with Port Forwarding . If you forward an external WAN TCP/UDP port to a port on a server running inside your LAN, you have to give that server a static IP, and this can be achieved easily through Static DHCP.

[ edit ] Configuration

- Log into the DD-WRT Web GUI

- Go to the Services tab

- DHCP Daemon should be enabled

- If there is no blank slot under "Static Leases", click Add

- Enter the MAC address of the client interface, the hostname of the machine, and the desired IP address for this machine. Note that you cannot reserve the same IP address for two different MAC addresses (e.g. both the wired and wireless interfaces of a device).

- Scroll to the bottom of the page and save your changes.

Note: You must either Save or Apply the page each time you've added and filled out a new static lease. Clicking the Add button refreshes the page without saving what you entered. If you try to add multiple blank leases and fill them all out at once then you will encounter a bug that the GUI thinks they are duplicate entries.

Note: A blank lease duration means it will be an infinite lease (never expires). Setting a lease duration will allow you to change the static lease information later on and have the host automatically get the new information without having to manually release/renew the lease on the host.

[ edit ] DHCP Options

The DHCP system assigns IP addresses to your local devices. While the main configuration is on the setup page you can program some nifty special functions here.

DHCP Daemon : Disabling here will disable DHCP on this router irrespective of the settings on the Setup screen.

Used domain : You can select here which domain the DHCP clients should get as their local domain. This can be the WAN domain set on the Setup screen or the LAN domain which can be set here.

LAN Domain : You can define here your local LAN domain which is used as local domain for DNSmasq and DHCP service if chosen above.

Static Leases : Assign certain hosts specific addresses here. This is also a way to add hosts to the router's local DNS service (DNSmasq).

Note: It is recommended but not necessary to set your static leases outside of your automatic DHCP address range. This range is 192.168.1.100-192.168.1.149 by default and can be configured under Setup -> Basic Setup : Network Address Server Settings (DHCP) .

[ edit ] Example

To assign the IP address 192.168.1.12 and the hostname "mypc" to a PC with a network card having the MAC address 00:AE:0D:FF:BE:56 you should press Add then enter 00:AE:0D:FF:BE:56 into the MAC field, mypc into the HOST field and 192.168.1.12 into the IP field.

Remember: If you press the 'Add' button before saving the entries you just made, they will be cleared. This is normal behavior.

[ edit ] How to add static leases into dhcp by command

If more than two...just keep adding them to the static_leases variable with a space between each.

Don't forget the double quotes...if you have any spaces (more than one lease) you must include the quotes to the variable.

NOTE: Starting with build 13832 the format has changed to the following:

Note the '=' sign at the end of each lease. If you want to set static lease time then put a number after the last '=' to set time in minutes...in the second cases above a blank after the = sign means its an indefinite lease, 1440=24 hours.

If you have success setting a couple of entries, but have difficulty setting lots of entries, then you may be encountering a length limitation for your given method and firmware combination. A workaround for this is to ssh or telnet to the router and enter it there. If you still run into a length problem then you could use vi (or other method) to enter your configuration into a file and then run the file. Here is what I saved into a file and ran with success (I needed single quotes instead of double quotes in my case):

I then ran the file (which is named myiplist.sh) like so:

Also remember tools such as "nvram show | grep static" as well as "nvram commit".

[ edit ] Troubleshooting

If you cannot ping a hostname, append a period to the end. I.e. instead of "ping server" try "ping server."

If the static reservations are visible, but your machines continue to get a normal DHCP IP try going to the Setup page. Hit Save and then Apply settings. The DHCP daemon should restart and you may lose connection briefly. Try renewing your DHCP lease and you should be getting the correct IP at this point.

Categories : DHCP | Basic tutorials

- Discussion |

- What links here |

- Related changes |

- Upload file |

- Special pages

- | Permanent link

- About DD-WRT Wiki |

- Disclaimers |

- Powered by MediaWiki |

- Design by Paul Gu

How to Configure Static IP Address on Ubuntu 20.04

Published on Sep 15, 2020

This article explains how to set up a static IP address on Ubuntu 20.04.

Typically, in most network configurations, the IP address is assigned dynamically by the router DHCP server. Setting a static IP address may be required in different situations, such as configuring port forwarding or running a media server .

Configuring Static IP address using DHCP #

The easiest and recommended way to assign a static IP address to a device on your LAN is to configure a Static DHCP on your router. Static DHCP or DHCP reservation is a feature found on most routers which makes the DHCP server to automatically assign the same IP address to a specific network device, each time the device requests an address from the DHCP server. This works by assigning a static IP to the device’s unique MAC address.

The steps for configuring a DHCP reservation vary from router to router. Consult the vendor’s documentation for more information.

Ubuntu 17.10 and later uses Netplan as the default network management tool. The previous Ubuntu versions were using ifconfig and its configuration file /etc/network/interfaces to configure the network.

Netplan configuration files are written in YAML syntax with a .yaml file extension. To configure a network interface with Netplan, you need to create a YAML description for the interface, and Netplan will generate the required configuration files for the chosen renderer tool.

Netplan supports two renderers, NetworkManager and Systemd-networkd. NetworkManager is mostly used on Desktop machines, while the Systemd-networkd is used on servers without a GUI.

Configuring Static IP address on Ubuntu Server #

On Ubuntu 20.04, the system identifies network interfaces using ‘predictable network interface names’.

The first step toward setting up a static IP address is identifying the name of the ethernet interface you want to configure. To do so, use the ip link command, as shown below:

The command prints a list of all the available network interfaces. In this example, the name of the interface is ens3 :

Netplan configuration files are stored in the /etc/netplan directory. You’ll probably find one or more YAML files in this directory. The name of the file may differ from setup to setup. Usually, the file is named either 01-netcfg.yaml , 50-cloud-init.yaml , or NN_interfaceName.yaml , but in your system it may be different.

If your Ubuntu cloud instance is provisioned with cloud-init, you’ll need to disable it. To do so create the following file:

To assign a static IP address on the network interface, open the YAML configuration file with your text editor :

Before changing the configuration, let’s explain the code in a short.

Each Netplan Yaml file starts with the network key that has at least two required elements. The first required element is the version of the network configuration format, and the second one is the device type. The device type can be ethernets , bonds , bridges , or vlans .

The configuration above also has a line that shows the renderer type. Out of the box, if you installed Ubuntu in server mode, the renderer is configured to use networkd as the back end.

Under the device’s type ( ethernets ), you can specify one or more network interfaces. In this example, we have only one interface ens3 that is configured to obtain IP addressing from a DHCP server dhcp4: yes .

To assign a static IP address to ens3 interface, edit the file as follows:

- Set DHCP to dhcp4: no .

- Specify the static IP address. Under addresses: you can add one or more IPv4 or IPv6 IP addresses that will be assigned to the network interface.

- Specify the gateway.

- Under nameservers , set the IP addresses of the nameservers.

When editing Yaml files, make sure you follow the YAML code indent standards. If the syntax is not correct, the changes will not be applied.

Once done, save the file and apply the changes by running the following command:

Verify the changes by typing:

That’s it! You have assigned a static IP to your Ubuntu server.

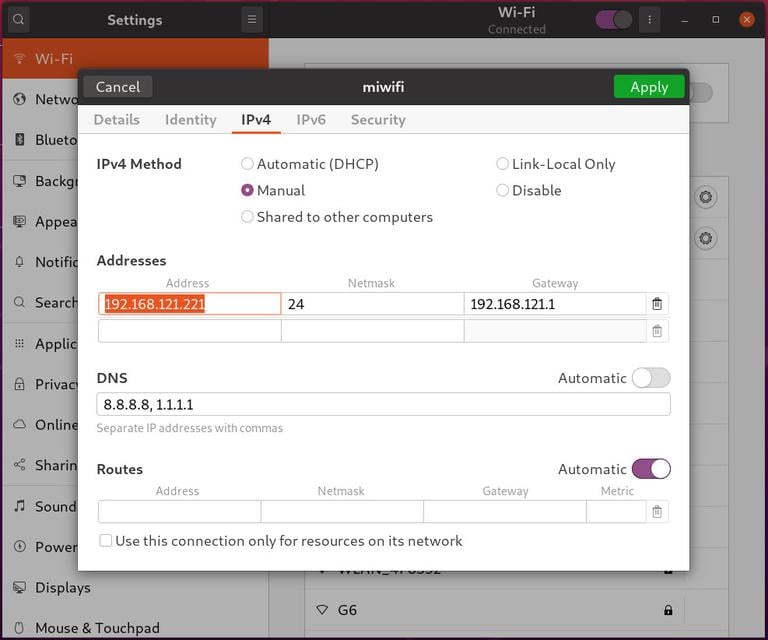

Configuring Static IP address on Ubuntu Desktop #

Setting up a static IP address on Ubuntu Desktop computers requires no technical knowledge.

In the Activities screen, search for “settings” and click on the icon. This will open the GNOME settings window. Depending on the interface you want to modify, click either on the Network or Wi-Fi tab. To open the interface settings, click on the cog icon next to the interface name.

In “IPV4” Method" tab, select “Manual” and enter your static IP address, Netmask and Gateway. Once done, click on the “Apply” button.

To verify the changes, open your terminal either by using the Ctrl+Alt+T keyboard shortcut or by clicking on the terminal icon and run:

The output will show the interface IP address:

Conclusion #

We’ve shown you how to configure a static IP address on Ubuntu 20.04.

If you have any questions, please leave a comment below.

Related Tutorials

How to Configure Static IP Address on Ubuntu 18.04

How to install php 8 on ubuntu 20.04, how to install flask on ubuntu 20.04.

- How to Install Python 3.9 on Ubuntu 20.04

- How to Install Nvidia Drivers on Ubuntu 20.04

- How to Set Up WireGuard VPN on Ubuntu 20.04

- How to Install and Configure Squid Proxy on Ubuntu 20.04

If you like our content, please consider buying us a coffee. Thank you for your support!

Sign up to our newsletter and get our latest tutorials and news straight to your mailbox.

We’ll never share your email address or spam you.

Related Articles

Mar 9, 2020

Dec 5, 2020

Nov 21, 2020

- Articles Automation Career Cloud Containers Kubernetes Linux Programming Security

Static and dynamic IP address configurations: DHCP deployment

%t min read | by Damon Garn

In my Static and dynamic IP address configurations for DHCP article, I discussed the pros and cons of static versus dynamic IP address allocation. Typically, sysadmins will manually configure servers and network devices (routers, switches, firewalls, etc.) with static IP address configurations. These addresses don’t change (unless the administrator changes them), which is important for making services easy to find on the network.

With dynamic IP configurations, client devices lease an IP configuration from a Dynamic Host Configuration Protocol (DHCP) server. This server is configured with a pool of available IPs and other settings. Clients contact the server and temporarily borrow an IP address configuration.

In this article, I demonstrate how to configure DHCP on a Linux server.

[ You might also like: Using systemd features to secure services ]

Manage the DHCP service

First, install the DHCP service on your selected Linux box. This box should have a static IP address. DHCP is a very lightweight service, so feel free to co-locate other services such as name resolution on the same device.

Note : By using the -y option, yum will automatically install any dependencies necessary.

Configure a DHCP scope

Next, edit the DHCP configuration file to set the scope. However, before this step, you should make certain you understand the addressing scheme in your network segment. In my courses, I recommend establishing the entire range of addresses, then identifying the static IPs within the range. Next, determine the remaining IPs that are available for DHCP clients to lease. The following information details this process.

How many static IP addresses?

Figure out how many servers, routers, switches, printers, and other network devices will require static IP addresses. Add some additional addresses to this group to account for network growth (it seems like we’re always deploying more print devices).

What are the static and dynamic IP address ranges?

Set the range of static IPs in a distinct group. I like to use the front of the available address range. For example, in a simple Class C network of 192.168.2.0/24, I might set aside 192.168.2.1 through 192.168.2.50 for static IPs. If that’s true, you may assume I have about 30 devices that merit static IP addresses, and I have left about twenty addresses to grow into. Therefore, the available address space for DHCP is 192.168.2.51 through 192.168.2.254 (remember, 192.168.2.255 is the subnet broadcast address).

This screenshot from the part one article is a reminder:

Note : Some administrators include the static IPs in the scope and then manually mark them as excluded or unavailable to the DHCP service for leasing. I’m not a fan of this approach. I prefer that the DHCP not even be aware of the addresses that are statically assigned.

What is the router’s IP address?

Document the router’s IP address because this will be the default gateway value. Administrators tend to choose either the first or the last address in the static range. In my case, I’d configure the router’s IP address as 192.168.2.1/24, so the default gateway value in DHCP is 192.168.2.1.

Where are the name servers?

Name resolution is a critical network service. You should configure clients for at least two DNS name servers for fault tolerance. When set manually, this configuration is in the /etc/resolv.conf file.

Note that the DNS name servers don’t have to be on the same subnet as the DNS clients.

Lease duration

In the next section, I’ll go over the lease generation process whereby clients receive their IP address configurations. For now, suffice it to say that the IP address configuration is temporary. Two values are configured on the DHCP server to govern this lease time:

default-lease-time - How long the lease is valid before renewal attempts begin.

max-lease-time - The point at which the IP address configuration is no longer valid and the client is no longer considered a lease-holder.

Configure the DHCP server

Now that you understand the IP address assignments in the subnet, you can configure the DHCP scope. The scope is the range of available IP addresses, as well as options such as default gateway. There is good documentation here .

Create the DHCP scope

Begin by editing the dhcp.conf configuration file (you’ll need root privileges to do so). I prefer Vim :

Next, add the values you identified in the previous section. Here is a subnet declaration (scope):

Remember, that spelling counts and typos can cause you a lot of trouble. Check your entries carefully. A mistake in this file can prevent many workstations from having valid network identities.

Reserved IP addresses

It is possible to reserve an IP address for a specific host. This is not the same thing as a statically-assigned IP address. Static IP addresses are configured manually, directly on the client. Reserved IP addresses are leased from the DHCP server, but the given client will always receive the same IP address. The DHCP service identifies the client by MAC address, as seen below.

Start the DHCP service

Start and enable the DHCP service. RHEL 7 and 8 rely on systemd to manage services, so you’ll type the following commands:

See this article I wrote for a summary on successfully deploying services.

Don’t forget to open the DHCP port in the firewall:

Explore the DORA process

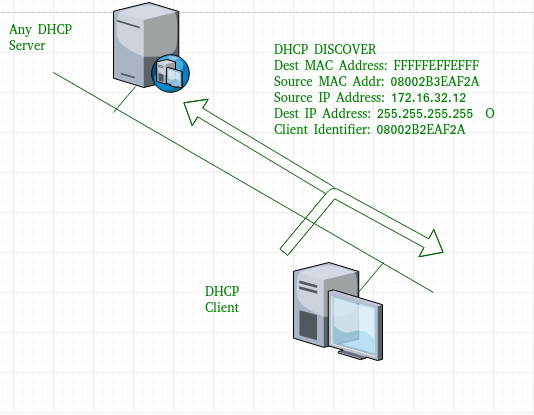

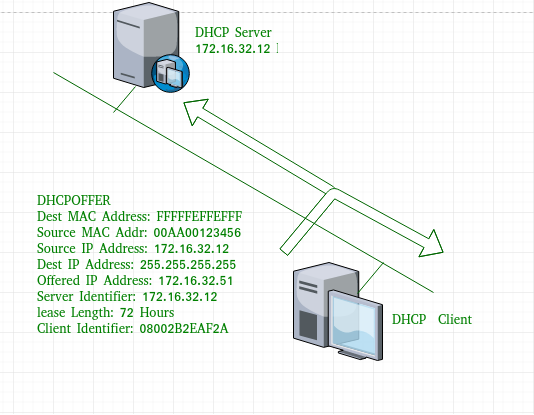

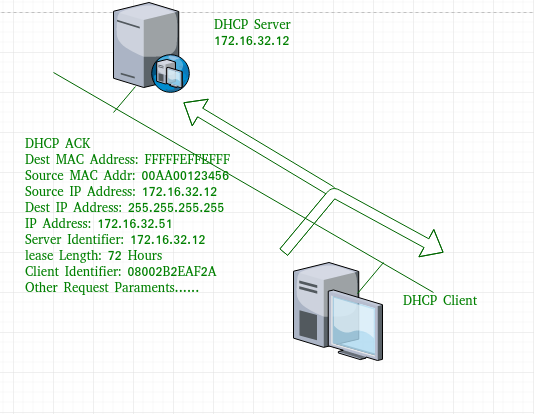

Now that the DHCP server is configured, here is the lease generation process. This is a four-step process, and I like to point out that it is entirely initiated and managed by the client, not the server. DHCP is a very passive network service.

The process is:

- Acknowledge

Which spells the acronym DORA .

- The client broadcasts a DHCPDiscover message on the subnet, which the DHCP server hears.

- The DHCP server broadcasts a DHCPOffer on the subnet, which the client hears.

- The client broadcasts a DHCPRequest message, formally requesting the use of the IP address configuration.

- The DHCP server broadcasts a DHCPAck message that confirms the lease.

The lease must be renewed periodically, based on the DHCP Lease Time setting. This is particularly important in today’s networks that often contain many transient devices such as laptops, tablets, and phones. The lease renewal process is steps three and four. Many client devices, especially desktops, will maintain their IP address settings for a very long time, renewing the configuration over and over.

Updating the IP address configuration

You may need to obtain a new IP address configuration with updated settings. This can be an important part of network troubleshooting.

Manually generate a new lease with nmcli

You can manually force the lease generation process by using the nmcli command. You must know the connection name and then down and up the card.

Manually force lease generation with dhclient

You can also use the dhclient command to generate a new DHCP lease manually. Here are the commands:

dhclient -r to release it

dhclient (no option) to lease a new one

dhclient -r eth0 for specific NIC

Note : use -v for verbose output

Remember, if the client’s IP address is 169.254.x.x, it could not lease an IP address from the DHCP server.

Other DHCP considerations

There are many ways to customize DHCP to suit your needs. This article only covers the most common options. Two settings to keep in mind are lease times and dealing with routers.

Managing lease times

There is a good trick to be aware of. Use short lease durations on networks with many portable devices or virtual machines that come and go quickly from the network. These short leases will allow IP addresses to be recycled regularly. Use longer durations on unchanging networks (such as a subnet containing mostly desktop computers). In theory, the longer durations reduced network traffic by requiring fewer renewals, but on today’s networks, that traffic is inconsequential.

Routers and DHCP

There is one other aspect of DHCP design to consider. The DORA process covered above occurs entirely by broadcast. Routers, as a general rule, are configured to stop broadcasts. That’s just part of what they do. There are three approaches you can take to managing this problem:

- Place a DHCP server on each subnet (no routers between the DHCP server and its clients).

- Place a DHCP relay agent on each subnet that sends DHCP lease generation traffic via unicast to the DHCP server on a different subnet.

- Use RFC 1542-compliant routers, which can be configured to recognize and pass DHCP broadcast traffic.

[ Getting started with containers? Check out this free course. Deploying containerized applications: A technical overview . ]

DHCP is a simple service but an absolutely critical one. Understanding the lease generation process helps with network troubleshooting. Proper planning and tracking are essential to ensuring you don’t permit duplicate IP address problems to enter your network environment.

Damon Garn owns Cogspinner Coaction, LLC, a technical writing, editing, and IT project company based in Colorado Springs, CO. Damon authored many CompTIA Official Instructor and Student Guides (Linux+, Cloud+, Cloud Essentials+, Server+) and developed a broad library of interactive, scored labs. He regularly contributes to Enable Sysadmin, SearchNetworking, and CompTIA article repositories. Damon has 20 years of experience as a technical trainer covering Linux, Windows Server, and security content. He is a former sysadmin for US Figure Skating. He lives in Colorado Springs with his family and is a writer, musician, and amateur genealogist. More about me

Try Red Hat Enterprise Linux

Download it at no charge from the red hat developer program., related content.

How-To Geek

How to set static ip addresses on your router.

Your changes have been saved

Email Is sent

Please verify your email address.

You’ve reached your account maximum for followed topics.

How-To Get More Free ChatGPT 4o Access

Get rugged, reliable storage for under $100, change these hidden settings to speed up your android phone, quick links, dhcp versus static ip assignment, when to use static ip addresses, assigning static ip addresses the smart way.

Routers both modern and antiquated allow users to set static IP addresses for devices on the network, but what's the practical use of static IP addresses for a home user? Read on as we explore when you should, and shouldn't, assign a static IP.

Dear How-To Geek, After reading over your five things to do with a new router article , I was poking around in the control panel of my router. One of the things I found among all the settings is a table to set static IP addresses. I'm pretty sure that section is self explanatory in as much as I get that it allows you to give a computer a permanent IP address, but I don't really understand why? I've never used that section before and everything on my home network seems to work fine. Should I be using it? It's obviously there for some reason, even if I'm not sure what that reason is! Sincerely, IP Curious

To help you understand the application of static IP addresses, let's start with the setup you (and most readers for that matter) have. The vasty majority of modern computer networks, including the little network in your home controlled by your router, use DHCP (Dynamic Host Configuration Protocol). DHCP is a protocol that automatically assigns a new device an IP address from the pool of available IP addresses without any interaction from the user or a system administrator. Let's use an example to illustrate just how wonderful DHCP is and how easy it makes all of our lives.

Related: How to Set Up Static DHCP So Your Computer's IP Address Doesn't Change

Imagine that a friend visits with their iPad. They want to get on your network and update some apps on the iPad. Without DHCP, you would need to hop on a computer, log into your router's admin panel, and manually assign an available address to your friend's device, say 10.0.0.99. That address would be permanently assigned to your friend's iPad unless you went in later and manually released the address.

With DHCP, however, life is so much easier. Your friend visits, they want to jump on your network, so you give them the Wi-Fi password to login and you're done. As soon as the iPad connected to the router, the router's DHCP server checks the available list of IP addresses, and assigns an address with an expiration date built in. Your friend's iPad is given an address, connected to the network, and then when your friend leaves and is no longer using the network that address will return to the pool for available addresses ready to be assigned to another device.

All that happens behind the scenes and, assuming there isn't a critical error in the router's software, you'll never even need to pay attention to the DHCP process as it will be completely invisible to you. For most applications, like adding mobile devices to your network, general computer use, video game consoles, etc., this is a more than satisfactory arrangement and we should all be happy to have DHCP and not be burdened with the hassle of manually managing our IP assignment tables.

Although DHCP is really great and makes our lives easier, there are situations where using a manually assigned static IP address is quite handy. Let's look at a few situations where you would want to assign a static IP address in order to illustrate the benefits of doing so.

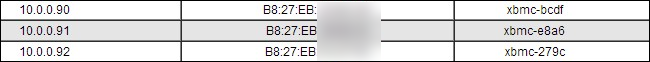

You need reliable name resolution on your network for computers that need to be consistently and accurately found. Although networking protocols have advanced over the years, and the majority of the time using a more abstract protocol like SMB (Server Message Block) to visit computers and shared folders on your network using the familiar //officecomputer/shared_music/ style address works just fine, for some applications it falls apart. For example, when setting up media syncing on XBMC it's necessary to use the IP address of your media source instead of the SMB name.

Any time you rely on a computer or a piece of software to accurately and immediately locate another computer on your network (as is the case with our XBMC example - the client devices need to find the media server hosting the material) with the least chance of error, assigning a static IP address is the way to go. Direct IP-based resolution remains the most stable and error free method of communicating on a network.

You want to impose a human-friendly numbering scheme onto your network devices. For network assignments like giving an address to your friend's iPad or your laptop, you probably don't care where in the available address block the IP comes from because you don't really need to know (or care). If you have devices on your network that you regularly access using command line tools or other IP-oriented applications, it can be really useful to assignment permanent addresses to those devices in a scheme that is friendly to the human memory.

For example, if left to its own devices our router would assign any available address to our three Raspberry Pi XBMC units. Because we frequently tinker with those units and access them by their IP addresses, it made sense to permanently assign addresses to them that would be logical and easy to remember:

The .90 unit is in the basement, the .91 unit is on the first floor, and the .92 unit is on the second floor.

You have an application the expressly relies on IP addresses. Some applications will only allow you to supple an IP address to refer to other computers on the network. In such cases it would be extremely annoying to have to change the IP address in the application every time the IP address of the remote computer was changed in the DHCP table. Assigning a permanent address to the remote computer prevents you from the hassle of frequently updating your applications. This is why it's quite useful to assign any computer that functions as a server of any sort to a permanent address.

Before you just start assigning static IP addresses left and right, let's go over some basic network hygiene tips that will save you from a headache down the road.

First, check what the IP pool available on your router is. Your router will have a total pool and a pool specifically reserved for DHCP assignments. The total pool available to home routers is typically 10.0.0.0 through 10.255.255.255 or 192.168.0.0 through 192.168.255.255 . Then, within those ranges a smaller pool is reserved for the DHCP server, typically around 252 addresses in a range like 10.0.0.2 through 10.0.0.254. Once you know the general pool, you should use the following rules to assign static IP addresses:

- Never assign an address that ends in .0 or .255 as these addresses are typically reserved for network protocols. This is the reason the example IP address pool above ends at .254.

- Never assign an address to the very start of the IP pool, e.g. 10.0.0.1 as the start address is always reserved for the router. Even if you've changed the IP address of your router for security purposes , we'd still suggest against assigning a computer.

- Never assign an address outside of the total available pool of private IP addresses. This means if your router's pool is 10.0.0.0 through 10.255.255.255 every IP you assign (keeping in mind the prior two rules) should fall within that range. Given that there are nearly 17 million addresses in that pool, we're sure you can find one you like.

Some people prefer to only use addresses outside of the DHCP range (e.g. they leave the 10.0.0.2 through 10.0.0.254 block completely untouched) but we don't feel strongly enough about that to consider it an outright rule. Given the improbability of a home user needing 252 device addresses simultaneously, it's perfectly fine to assign a device to one of those addresses if you'd prefer to keep everything in, say, the 10.0.0.x block.

Related: How and Why All Devices in Your Home Share One IP Address

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Assigning a fixed IP address to a machine in a DHCP network

I want to assign a fixed private IP address to a server so that local computers can always access it.

Currently, the DHCP address of the server is something like 192.168.1.66 .

Should I simply assign the server this same IP as fixed and configure the router so that it will exclude this IP from the ones available for DHCP? Or are there some ranges of IP that are traditionally reserved for static addresses?

My beginner's question doesn't relate to commands but to general principles and good practices.

Practical case (Edit 1 of 2)

Thank you for the many good answers, especially the very detailed one from Liam.

I could access the router's configuration.

When booting any computer, it obtains its IPv4 address in DHCP.

The IP and the MAC addresses that I can see with the ipconfig all command in Windows match those in the list of connected devices that the router displays, so that I can confirm who is who.

The list of connected devices is something like

Things that I don't understand:

- Although all IP addresses are all obtained in DCHP, they are displayed as by the router as if they are static addresses.

- The router's setting "Enable DHCP on LAN" is set on "Off" but the IP addresses are obtained in DHCP.

- IP addresses attributed to the computers are outside of the very narrow DHCP range of 192.168.1.33 to 192.68.1.35

On any Windows computer connected in DCHP, ipconfig /all shows something like:

I'm missing something, but what?

Practical case (Edit 2 of 2)

Solution found.

For details, see my answer to Michal's comment at the bottom of this message.

I must admit that the way the router display things keeps some parts a mystery. The router seems to be using DHCP by default, but remembers the devices that were connected to it (probably using their mac address). It could be the reason why it lists the IPs as static although they're dynamic. There was also Cisco router at 192.168.1.4 which appeared for some business communications service, but I had no credentials to access it.

- There's no standard governing DHCP reservation ranges, but it would be kinda nice. – LawrenceC Commented Apr 5, 2018 at 2:43

- Some routers allow you to define an IP for a chosen mac-address. Use that and DHCP will keep that address for your server. You could also set a DHCP range to e.g. 192.168.0.128 - 192.168.0.254 in a 192.168.0.1/255.255.255.0 network and set all static addresses on the "static" servers from within 192.168.0.2 - 192.168.0.127 range. – Michal B. Commented Apr 5, 2018 at 7:29

- @Michal B.: I agree and did it meanwhile.: 1. Obtain the server's mac address. 2. Observe which IPs the router assigns to computers (eg. 192.168.0.50 to 192.168.1.70 ) 3. Start the server in DHCP. In the router panel, name it, basing on its mac address so that the router will remember it. 4. In the server switch IP from DHCP mode to manual and assign an IP that is beyond the ones that the router would assign to other devices (eg. 192.168.1.100 ). You can use nmtui and then edit the config file where you can replace PREFIX=32 by NETMASK=255.255.255.0 . 6. Restart the network service. – OuzoPower Commented Apr 6, 2018 at 9:58

7 Answers 7

Determine the IP address that is assigned to your server and then go onto the DHCP and set a DHCP reservation for that server.

- 1 Reservations are essentially self-documenting. ++ – mfinni Commented Apr 4, 2018 at 21:30

- 5 @mfinni ++ only works for programmers. -- for your comment :P – Canadian Luke Commented Apr 4, 2018 at 23:59

- ..and yes he should also use a fixed IP, and label it. Document it. Maybe even reserve a range for this. In an enterprise using internal VPN it is common for these IP's to be hard coded in HOSTS files and SSH config files so it is a big deal when they suddenly change. – mckenzm Commented Apr 5, 2018 at 1:30

DHCP services differ across many possible implementations, and there are no ranges of IP that are traditionally reserved for static addresses; it depends what is configured in your environment. I'll assume we're looking at a typical home / SOHO setup since you mention your router is providing the DHCP service.

Should I simply assign the server this same IP as fixed and configure the router so that it will exclude this IP from the ones available for DHCP?

I would say that is not best practice. Many consumer routers will not have the ability to exclude a single address from within the DHCP range of addresses for lease (known as a 'pool'). In addition, because DHCP is not aware that you have "fixed" the IP address at the server you run the risk of a conflict. You would normally either:

- set a reservation in DHCP configuration so that the server device is always allocated the same address by the DHCP service, or

- set the server device with a static address that is outside the pool of addresses allocated by the DHCP service.

To expand on these options:

Reservation in DHCP

If your router allows reservations, then the first, DHCP reservation option effectively achieves what you have planned. Note the significant difference: address assignment is still managed by the DHCP service, not "fixed" on the server. The server still requests a DHCP address, it just gets the same one every time.

Static IP address

If you prefer to set a static address, you should check your router's (default) configuration to determine the block of addresses used for DHCP leases. You will normally be able to see the configuration as a first address and last address, or first address and a maximum number of clients. Once you know this, you can pick a static address for your server.

An example would be: the router is set to allow a maximum of 128 DHCP clients with a first DHCP IP address of 192.168.1.32. Therefore a device could be assigned any address from 192.168.1.32 up to and including 192.168.1.159. Your router will use a static address outside this range (generally the first or last address .1 or .254) and you can now pick any other available address for your server.

It depends on the configuration of your DHCP service. Check the settings available to you for DHCP then either reserve an address in DHCP or pick a static address that is not used by DHCP - don't cross the streams.

- 1 Double++ on this. – ivanivan Commented Apr 5, 2018 at 3:26

- 1 Thank you Liam for your very detailed and useful answer. After accessing the router's configuration, other issues arised that I added in the original message. – OuzoPower Commented Apr 5, 2018 at 9:45

- @OuzoPower I'm new to responding here so don't have enough rep to comment on the question. Your update shows your router is not providing the DHCP service. The setting is off on the router, and your Windows ipconfig output shows the DHCP service is provided from a device at 192.168.1.5 . Do you have Pi-Hole or another similar device providing DHCP? That's where you'll find your DHCP configuration. NB: This also explains why the router shows the addresses as static and why DHCP assigned addresses are outside the range configured on the router. – Liam Commented Apr 6, 2018 at 9:52

- @Liam: No Pi-Hole or similar thing as far as I know. Solution found: As I could not set DHCP ranges in the router but could register the mac address of the server in the router and then attribute to the server a fixed IP address that is far beyond the range that the router is naturally assigning to existing devices. Thanks to the registration of the server's mac address, the router keeps it in memory and shows the server as missing when thus is off. For details, see my answer to Michal B. in the original post. This solution seems working like a charm. – OuzoPower Commented Apr 6, 2018 at 10:11

- @OuzoPower That approach may work in the short term but how do you know that the address you have picked is outside the DHCP range? Many DHCP systems pick addresses at random from the available pool. At some point you will need to know what your DHCP configuration actually is, rather than estimating by observation (!) otherwise you will experience some conflict. Your question asked about best practice. Here, best practice would be to know what system is handling DHCP for your LAN. I would start by visiting 192.168.1.5 or https://192.168.1.5/ for clues. – Liam Commented Apr 6, 2018 at 10:48

It's not a bad habit to divide your subnet to DHCP pool range and static ranges, but of course you can do what JohnA wrote - use reservation for your server, but first case is IMHO clearer, because you are not messing up your DHCP server with unused extra settings (it could be confusing then for another admins who are not aware of that the server is static). if using DHCP pool + static pool, then just don't forget to add your static server to DNS (create A/AAAA record for it).

- I would like to add that the downside of DHCP reservations for servers is that if your DHCP environment is not sufficient fault tolerant, a DHCP server outage could cause all manner of problems. Monitor the DHCP closely and set leases that are long enough to be able respond to problems even after a long weekend. – JohnA Commented Apr 5, 2018 at 2:06

I prefer to set my network devices, servers, printers, etc. that require a static IP address out of range of the DHCP pool. For example, xx.xx.xx.0 to xx.xx.xx.99 would be set aside for fixed IP assignments and xx.xx.xx.100 to xx.xx.xx.250 would be set as the DHCP pool.

- I like this approach as well. This way I can still access the servers even if the DHCP server takes the morning off or decides to start handing out invalid leases! – ErikF Commented Apr 5, 2018 at 1:24

- Using isc-dhcp-server this is required (this is what my pi does, along with DNS caching, a fake domain for my LAN, and some traffic shaping for some wireless stuff). Unfortunately, I've seen browser based router config pages (both factory and replacement) that either require a reserved address to be in the dynamic pool... or out of it. – ivanivan Commented Apr 5, 2018 at 3:30

In addition to the other answers I want to concentrate on the fact that your router configuration does not seem to fit the IP address configuration on your server.

Please have a look on the output of ipconfig /all:

IPv4 Address ........ 192.168.1.xx(prefered)

Default Gateway ........ 192.168.1.1 (= IP of the router)

DHCP server ............ 192.168.1.5

The clients in the network don't get the IP address from the router, but a different DHCP server in the network (192.168.1.5 instead of 192.168.1.1). You have to find this server and check it's configuration instead of the router's DHCP server config, which is seemingly only used for Wireless.

My router ( OpenWRT ) allows for static DHCP leases.

Static leases are used to assign fixed IP addresses and symbolic hostnames to DHCP clients.

So, you supply the MAC address of the server and it's desired IP address as a "static lease", and DHCP will always allocate the same IP. The client machine (the server in this case) requires no configuration changes and still picks up its IP address (the configured address) from DHCP.

Note that you can't assign a fixed IP addresses in 192.168 so that clients can "always access it" unless you also give each client a fixed IP address and subnet. Because if the clients use DHCP, then they get whatever subnect the DHCP server gives them, and if they use automatic addressing, then they won't be in a 192.168 subnet.

Once you realise that the system can't be easily perfected, you can see that your best options depend on what you are trying to do. Upnp is a common way of making devices visible. DNS is a common way of making devices visible. WINS is a common way of making devices visible. DHCP is a common way of making devices visible.

All of my printers have reservations: my printers aren't critical infrastructure, I want to be able to manage them, many of the clients use UPNP or mDNS for discovery anyway.

My gateway and DNS servers have fixed IP address in a reserved range: My DHCP server provides gateway and DNS addresses, and my DHCP server does not have the capacity to do dynamic discovery or DNS lookup.

None of my streaming devices have fixed or reserved IP values at all: if the network is so broken that DHCP and DNS aren't working, there is no way that the clients will be able to connect to fixed IP addresses anyway.

- This literally makes no sense. Are you asserting that you can’t mix static and dynamic in a /16? – Gaius Commented Apr 5, 2018 at 12:59

- I have asserted that if you use static, you haven't gauaranteed that clients can "always access it"Not at all. I've just asserted that I've mixed static and dynamic in my setup. – user165568 Commented Apr 6, 2018 at 9:46

- @Gaius I have asserted that if you use static, you haven't guaranteed that clients can "always access it". I'm sorry that doesn't make sense to you: it's one of the primary reasons the world moved away from static. I've also asserted that I've mixed static and dynamic in my setup: see: "none of my streaming devices have fixed or reserved" and "DNS servers have fixed IP": the DNS servers are indeed in the same subnet as the clients. – user165568 Commented Apr 6, 2018 at 9:52

- Sorry, but I must admin not understanding most of your answer. As far as I know, DNS are domain name servers and are useful when you want to name servers, like when assigning domain names to web sites. As I don't need domain names, DNS appears me useless. Accessing the server is not an issue without DNS. See my answer to Michal B. in the original post for the solution that I found. – OuzoPower Commented Apr 6, 2018 at 10:18

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged dhcp ip-address ..

- Featured on Meta

- Upcoming sign-up experiments related to tags

Hot Network Questions

- Was BCD a limiting factor on 6502 speed?

- Is the FOCAL syntax for Alphanumeric Numbers ("0XYZ") documented anywhere?

- Geometry question about a six-pack of beer

- Is the zero vector necessary to do quantum mechanics?

- Remove assignment of [super] key from showing "activities" in Ubuntu 22.04

- What’s the highest salary the greedy king can arrange for himself?

- Why is it 'capacité d'observation' (without article) but 'sens de l'observation' (with article)?

- Con permiso to enter your own house?

- Were there engineers in airship nacelles, and why were they there?

- Why depreciation is considered a cost to own a car?

- Integration of the product of two exponential functions

- Correlation for Small Dataset?

- Why can't LaTeX (seem to?) Support Arbitrary Text Sizes?

- Is it possible to complete a Phd on your own?

- Typing Fractions in Wolfram Cloud

- Is there any legal justification for content on the web without an explicit licence being freeware?

- How is Victor Timely a variant of He Who Remains in the 19th century?

- Was Paul's Washing in Acts 9:18 a Ritual Purification Rather Than a Christian Baptism?

- Where does someone go with Tzara'as if they are dwelling in a Ir Miklat?

- Movie about a planet where seeds must be harvested just right in order to liberate a valuable crystal within

- What could explain that small planes near an airport are perceived as harassing homeowners?

- Are there alternatives to alias I'm not aware of?

- Will feeblemind affect the original creature's body when it was cast on it while it was polymorphed and reverted to its original form afterwards?

- Where can I access records of the 1947 Superman copyright trial?

- Help center

- Chinese (traditional)

Learn more

- Case Study

- Knowledge Center

- Glossary

Product Updates (143)

Getting Started (141)

News & Announcements (45)

Self Service (10)

Configuration Guide (6)

Troubleshooting Guide (6)

Client Reviews (4)

Installation Guide (4)

Product Testing (4)

Hear It from Experts (2)

Switches (132)

Optics and Transceivers (100)

Network Cabling and Wiring (78)

Networking Devices (75)

Optical Networking (24)

Networking (104)

Fiber Optic Communication (57)

Data Center (39)

General (31)

Wireless and Mobility (9)

Business Type (7)

Routing and Switching (6)

Australia (5)

United Kingdom (3)

Singapore (2)

Central & Northern Europe (1)

- Product Updates

- Networking Devices

- Case Study

- Knowledge Center

DHCP vs Static IP: Which One Is Better?

Nowadays, most networking devices such as routers or network switches use IP protocol as the standard to communicate over the network. In the IP protocol, each device on a network has a unique identifier that is called IP address. The easiest method of achieving this was configuring a fixed IP address or static IP address. Since there are limitations to static IP, some administrators seek to use dynamic IP instead. DHCP (Dynamic Host Configuration Protocol) is a protocol for assigning dynamic IP addresses to devices that are connected to the network. So DHCP vs static IP, what's the difference?

What Is a Static IP Address?

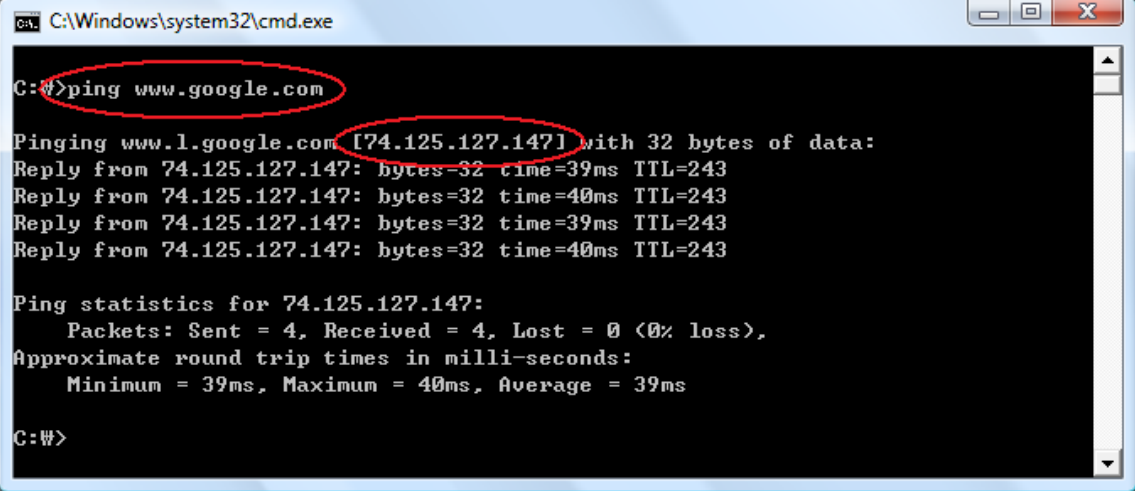

A static IP address is an address that is permanently assigned to your network devices by your ISP, and does not change even if your device reboots. Static IP addresses typically have two versions: IPv4 and IPv6. A static IP address is usually assigned to a server hosting websites and provides email, VPN and FTP services. In static IP addressing, each device on the network has its own address with no overlap and you'll have to configure the static IP addresses manually. When new devices are connected to a network, you would have to select the "manual" configuration option and input the IP address, the subnet mask, the default gateway and the DNS server.

A typical example of using static IP address is web server. From the Window on your computer, go to START -> RUN -> type "cmd" -> OK. Then type "ping www.google.com" on the Command Window, the interface will pop up as you can see below. The four-byte number 74.125.127.147 is the current IP for www.google.com. If it is a static IP, you would be able to connect Google at any time by using this static IP address in the web browser if you want to visit Google.

What Is DHCP?

What is in contrast with the static IP address is the dynamic IP address. Static vs dynamic IP topic is hotly debated among many IT technicians. Dynamic IP address is an address that keeps on changing. To create dynamic IP addresses, the network must have a DHCP server configured and operating. The DHCP server assigns a vacant IP address to all devices connected to the network. DHCP is a way of dynamically and automatically assigning IP addresses to network devices on a physical network. It provides an automated way to distribute and update IP addresses and other configuration information over a network. To know how DHCP works, read this article: DHCP and DNS: What Are They, What’s Their Difference?

Proper IP addressing is essential for establishing communications among devices on a network. Then DHCP vs static IP, which one is better? This part will discuss it.

Static IP addresses allow network devices to retain the same IP address all the time, A network administrator must keep track of each statically assigned device to avoid using that IP address again. Since static IP address requires manual configurations, it can create network issues if you use it without a good understanding of TCP/IP.

While DHCP is a protocol for automating the task of assigning IP addresses. DHCP is advantageous for network administrators because it removes the repetitive task of assigning multiple IP addresses to each device on the network. It might only take a minute but when you are configuring hundreds of network devices, it really gets annoying. Wireless access points also utilize DHCP so that administrators would not need to configure their devices by themselves. For wireless access points, PoE network switches , which support dynamic binding by users' definition, are commonly used to allocate IP addresses for each device that is connected together. Besides, what makes DHCP appealing is that it is cheaper than static IP addresses with less maintenance required. You can easily find their advantages and disadvantages from the following table.

| IP address | Advantages | Disadvantages |

|---|---|---|

| DHCP | DHCP does not need any manual configuration to connect to local devices or gain access to the Web. | Since DHCP is a "hands-off" technology, there is a danger that someone may implant an unauthorized DHCP server, making it possible to invade the network for illegal purposes or result in random access to the network without explicit permission. |

| Static IP | The address does not change over time unless it is changed manually - good for web servers, and email servers. | It's more expensive than a dynamic IP address because ISP often charges an additional fee for static IP addresses. Also, it requires additional security and manual configuration, which adds complexity when large numbers of devices are connected. |

After comparing DHCP vs static IP, it is undoubtedly that DHCP is the more popular option for most users as they are easier and cheaper to deploy. Having a static IP and guessing which IP address is available is really bothersome and time-consuming, especially for those who are not familiar with the process. However, static IP is still in demand and useful if you host a website from home, have a file server in your network, use networked printers, or if you use a remote access program. Because a static IP address never changes so that other devices can always know exactly how to contact a device that utilizes a static IP.

Related Article: IPv4 vs IPv6: What’s the Difference?

You might be interested in

Email Address

Please enter your email address.

Please make sure you agree to our Privacy Policy and Terms of Use.

PicOS® Switch Software

AmpCon™ Management Platform

- Network Cabling and Wiring

- Buying Guide

- Fiber Optic Communication

- Optics and Transceivers

- Data Center

- Network Switch

- Ethernet Patch Cords

- Business Type

- Routing and Switching

- Optical Networking

Fiber Optic Cable Types: Single Mode vs Multimode Fiber Cable

May 10, 2022

Layer 2 vs Layer 3 Switch: Which One Do You Need?

Oct 6, 2021

Multimode Fiber Types: OM1 vs OM2 vs OM3 vs OM4 vs OM5

Sep 22, 2021

Running 10GBASE-T Over Cat6 vs Cat6a vs Cat7 Cabling?

Mar 18, 2024

PoE vs PoE+ vs PoE++ Switch: How to Choose?

May 30, 2024

- Command Line Interface (CLI)

- Denial-of-Service Attack (DoS)

- Desktop Managers

- Linux Administration

- Virtual Private Network (VPN)

- Wireless LAN (Wi-Fi)

- Privacy Policy

blackMORE Ops Learn one trick a day ….

Setup dhcp or static ip address from command line in linux.

March 26, 2015 Command Line Interface (CLI) , How to , Linux , Linux Administration , Networking 25 Comments

This guide will guide you on how to setup DHCP or static IP address from command Line in Linux. It saved me when I was in trouble, hopefully you will find it useful as well. In case you’ve only got Wireless, you can use this guide to connect to WiFi network from command line in Linux .

Note that my network interface is eth0 for this whole guide. Change eth0 to match your network interface.

Static assignment of IP addresses is typically used to eliminate the network traffic associated with DHCP/DNS and to lock an element in the address space to provide a consistent IP target.

Step 1 : STOP and START Networking service

Some people would argue restart would work, but I prefer STOP-START to do a complete rehash. Also if it’s not working already, why bother?

Step 2 : STOP and START Network-Manager

If you have some other network manager (i.e. wicd, then start stop that one).

Just for the kicks, following is what restart would do:

Step 3 : Bring up network Interface

Now that we’ve restarted both networking and network-manager services, we can bring our interface eth0 up. For some it will already be up and useless at this point. But we are going to fix that in next few steps.

The next command shows the status of the interface. as you can see, it doesn’t have any IP address assigned to it now.

Step 4 : Setting up IP address – DHCP or Static?

Now we have two options. We can setup DHCP or static IP address from command Line in Linux. If you decide to use DHCP address, ensure your Router is capable to serving DHCP. If you think DHCP was the problem all along, then go for static.

Again, if you’re using static IP address, you might want to investigate what range is supported in the network you are connecting to. (i.e. some networks uses 10.0.0.0/8, some uses 172.16.0.0/8 etc. ranges). For some readers, this might be trial and error method, but it always works.

Step 4.1 – Setup DHCP from command Line in Linux

Assuming that you’ve already completed step 1,2 and 3, you can just use this simple command

The first command updates /etc/network/interfaces file with eth0 interface to use DHCP.

The next command brings up the interface.

With DHCP, you get IP address, subnet mask, broadcast address, Gateway IP and DNS ip addresses. Go to step xxx to test your internet connection.

Step 4.2 – Setup static IP, subnet mask, broadcast address in Linux

Use the following command to setup IP, subnet mask, broadcast address in Linux. Note that I’ve highlighted the IP addresses in red . You will be able to find these details from another device connected to the network or directly from the router or gateways status page. (i.e. some networks uses 10.0.0.0/8, some uses 172.16.0.0/8 etc. ranges)

Next command shows the IP address and details that we’ve set manually.

Because we are doing everything manually, we also need to setup the Gateway address for the interface. Use the following command to add default Gateway route to eth0 .

We can confirm it using the following command:

Step 4.3 – Alternative way of setting Static IP in a DHCP network

If you’re connected to a network where you have DHCP enabled but want to assign a static IP to your interface, you can use the following command to assign Static IP in a DHCP network, netmask and Gateway.

At this point if your network interface is not up already, you can bring it up.

Step 4.4 – Fix missing default Gateway

Looks good to me so far. We’re almost there.

Try to ping http://google.com/ (cause if www.google.com is down, Internet is broken!):

Step 5 : Setting up nameserver / DNS

For most users step 4.4 would be the last step. But in case you get a DNS error you want to assign DNS servers manually, then use the following command:

This will add Google Public DNS servers to your resolv.conf file. Now you should be able to ping or browse to any website.

Losing internet connection these days is just painful because we are so dependent on Internet to find usable information. It gets frustrating when you suddenly lose your GUI and/or your Network Manager and all you got is either an Ethernet port or Wireless card to connect to the internet. But then again you need to memorize all these steps.

I’ve tried to made this guide as much generic I can, but if you have a suggestion or if I’ve made a mistake, feel free to comment. Thanks for reading. Please share & RT.

Enabling AMD GPU for Hashcat on Kali Linux: A Quick Guide

If you’ve encountered an issue where Hashcat initially only recognizes your CPU and not the …

Boot Ubuntu Server 22.04 LTS from USB SSD on Raspberry Pi 4

This is a guide for configuring Raspberry Pi4 to boot Ubuntu from external USB SSD …

25 comments

Just wanted to say, your guides are amazing and should be included into kali’s desktop help manual. Thanks for your awesome work!

Hi Matt, That’s very kind, thank you. I’m happy that my little contributions are helping others. Cheers, -BMO

I’ve gone through the steps listed in Step 4.2 and when I check my settings are correct, until I reboot. After I reboot all my settings have reverted back to the original settings. Any ideas?

The only problem with this is that nowadays Linux machines aren’t always shipped with the tools you use. They are now shipped with the systemd virus so the whole init.d doens’t work anymore and ifconfig isn’t shipped on a large number of distro’s.

Hi, The intention was to show what to do when things are broken badly. In my case, I’ve lost Network Manager and all of Gnome Desktop. I agree this is very old school but I’m sure it’s better than reinstalling. Not sure what distro you’re talking about. I use Debian based Kali (and Debian Wheezy), CentOS(5,6,7) and Ubuntu for work, personal and testing. ifconfig is present is every one of them. ifconfig also exists in all variants of server distro, even in all Big-IP F5’s or CheckPoint Firewalls. Hope that explains my inspiration for this article. Cheers, -BMO

Hi , I want to say Thank you for your Guide, it’s very useful. and want to add another method for Step 5 : Setting up nameserver / DNS: add nameserver directly to resolv.conf file

nano /etc/resolv.conf

Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

DO NOT EDIT THIS FILE BY HAND — YOUR CHANGES WILL BE OVERWRITTEN

nameserver 8.8.8.8 nameserver 8.8.4.4 search Home

nano or vi is not requiered, use “printf” instead “echo”… e.g:

printf “nameserver 8.8.8.8\nnameserver 8.8.4.4\n” >> /etc/resolv.conf

double-check with:

grep nameserver /etc/resolv.conf

Hey I’m new to VM my eth0 inet addr is 10.0.02.15 but every video I watch their inet addr always starts with 192. I was wondering what I can do to change my inet addr to start with 192. Is this guide a solution

Hi Billy Bob, Is 10.x address coming from VBox internal or from your router? You possibly selected Bridged network. Try juggling between Bridged and NAT. Also look up VBox IP addressing in Google. Cheers -BMO

Please help, I’ve done all these steps and still I don’t have internet connection with bridged adapter. When I set NAT I have internet connection but with bridged adapter i don’t. I checked with ifconfig eth0 command and I have ip, netmask and broadcast ip. What could be the problem?

Excellent guide. I haven’t been using any debian based linux distros in a while and forgot where the entries go manually. I was actually kind of surprised how long it took to find your page in google, there is a lot of pages that don’t actually answer the question, but yours was spot on.

I did all the commands but my IP address doesn’t show up, and now my internet server on Linux iceweasel is down. It’s telling me that “Server not found” I really need help.

Hi Blackmoreops Thanks for the tutorial. I do have a question tho, in kalisana, I have followed your advice step by step to configure a static ip on my kali VM. But when I check with ifconfig, I still get the ip assigned by my modem? I run the kali vm on fedora 22 host… Is there a way around this? Regards Adexx

Hey Blackmoreops, Thanks for the great article. Being a total NOOB, I’m wondering if these are the last steps in getting my correct lab setting to enumerate De-Ice 1.100 with nmap. My current setting on Kali 2016 machine are: add:192.168.1.5 , mask: 255.255.255.0, default gw 192.168.1.1. Both machine set to NAT in Virtualbox 5. I’ve tried numerous scans ie., ping, list proctocol verify, and stealth and I’m unable to find any open ports. Help!!!!!!!

Best Regards. C

i tried on my kali linux but i lost my internet connection

hello everyone i have got problem on my kali linux with internet. Kali is connected to my wifi but iceweasel can’t open any site. Can you help me solve this problem please ?

check mtu and DNS

Followed through all the steps, and it worked. Then I restarted the router, and everything is back to the earlier configuration?

thanks for tutorial.bu how change the ip that blocked by google :D

Hello sorry but wasnt able to configure my network. I installed kali into my hdd and im using it as my main OS on this pc(idk if thats recommended or not) . I am curently connected to the internet with an ethernet cable and somehow in th top-right corner it says that is curently connected but when i try to open ice weasel i get a message that tells me “server not found” can someone please tell me how to fix this issue and also i followed your tutorial until the end but i had trouble in the end because i get this message bash: /etc/resolv.conf: no such file or directory . If you can help me i would be so gratefull. Sorry for butchering the english language and its grammar

Sir, How can we change or spoof dns server in kali Linux.

I can’t get my static IP address to ping google.

This is what I am trying to do:

ping google.com using a server created with static IP address using Linux Redhat VM Ware,

please help!

For setting up DHCP using the Command : ifconfig eth0 inet dhcp Also works

For setting up DHCP using command : ifconfig eth0 inet dhcp Also works

Leave your solution or comment to help others. Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Discover more from blackMORE Ops

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

Privacy Policy on Cookies Usage

Some services used in this site uses cookies to tailor user experience or to show ads.

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

DHCP Reservation vs Static IP address

So after browsing some websites, some people are telling me that static IP address is the best. But others say the DHCP Reservation is just as good if not the same.

So what is better? Or are they pretty much the same?

Well to help clarify some more. I reserved my PS3 and Wii U IP address in my router. Is that all right?

- 1 What websites have you been reading, and what points did they make? It's a ridiculous argument really. If you can assign via DHCP, do it. If you can't, you're stuck assigning an manual address on the device. – Brad Commented Sep 13, 2014 at 6:03

- One of the factors to take into account when evaluating articles written on this subject is that many learned to use static IPs because early consumer routers didn't have a mechanism for DHCP reservations. Of the 3 answers available now @Wes Sayeed saysit the best... but I hate his first sentence. I absolutely agree with the 3rd paragraph tho... – Tyson Commented Sep 13, 2014 at 13:06

5 Answers 5

Using DHCP reservations offers you a sort of poor-man's IP address management solution. You can see and change IP addresses from a single console and makes it so you can see what addresses are available without having to resort to an Excel spreadsheet (or worse, a ping and pray system).

That being said, many applications require a static IP. If the server is configured to use DHCP, the application has no way of knowing that a reservation exists and may refuse to install. Also some applications tie their license to an IP address and therefore must be static as well.

Personally I prefer to use reservations when I can, and statics when I have to. But when I do use a static, I make a reservation for that address anyway so that A) it can be within the scope with the rest of the servers, and B) still provides the visual accounting of the address.

NOTE: If you're referring to network devices like IP cameras and printers, reservations are definitely the way to go because you can add a comment in the reservation as to what the device is and where it's located. Depending on the device, this may be your only means of documenting that information within the system.

- 1 Setting a reservation for a computer that has a static IP is also a good way of preventing IP address clashes. – Michael Frank Commented Sep 13, 2014 at 5:48

- 1 I am very curious to know what software you run that requires a fixed IP address and is unable to know what that address is if that IP is assigned via DHCP. I've also never seen any application permanently tying a license to a single IP address. – Brad Commented Sep 13, 2014 at 6:04

- 2 This is a great answer... the first line stinks tho, You start out by implying that it's the poor man's solution, but go on to sell the merits. – Tyson Commented Sep 13, 2014 at 13:08

- 3 To call the the Poor-man's solution, you should explain what the more elegant rich-man's solution would be. (Sorry for the double comment, the edit button for the one above is already gone.) – Tyson Commented Sep 13, 2014 at 13:16

- 1 I'm not knocking it at all. I just meant "poor-man's solution" as in it's built-in and therefore free. There are IPAM solutions out there that cost money -- some lots of money -- and offer all kinds of features beyond your basic DHCP functions. – Wes Sayeed Commented Sep 13, 2014 at 18:56

As a printer tech, DHCP reservations are preferable to static IP assignments. You can manage them centrally as well as ensure that the device always has the current DNS and other network info.

However, DHCP reservations require you to have access to the router/DHCP server, which as an outside vendor isn't always possible. If you can't do DHCP reservations, use a static IP (being sure to manually enter subnet, DNS, etc.) but try to make it outside the DHCP scope if possible.

I have never ran into a situation that I NEEDED to use a static but was more profitable to use one such as office laser jet printers (when you do always block the ip address from DHCP).

In my opinion laptops, phones, and any "mobile" devices should be reserved not static. It requires no set up on the device and the server will reserve that address for that device.

When it comes to printers and in certain cases workstations (if you need to know the address... for remote desktop ect.) always go static but remember to block the address from DHCP.

Remember though if you need to re-configure your subnet mask for any reason any and all static devices must be changed. Always think about future needs.

I have several devices at home, that need fixed IP addresses and many others where I desire fixed IP addresses. Over the years I have discovered that the choice between static IP addresses or DHCP reservations depends on the nature of the application and convenience (how many and how often do you have to set them).

For devices whose configuration does not change often (NAS, desktops, VDI machines, print server, routers, switches) and where it takes little to no effort to change IP addresses, I prefer static addresses. For everything else (IP Cams, printers, thin clients, IoT devices), I use DHCP reservations. Setting a static IP on a computer is extremely easy; once set, I don't have to visit them for years. On the other hand, I may reset printers, IP cams, Raspberry Pi devices, UPS etc. several times. It is much easier to make DHCP reservations on the DHCP server for these devices, and expect to find the reset device at the same IP every time.

Regardless of how I set the IP, I always have a reservation on the DHCP server (for consistency sake) and I track them on a spreadsheet.

A manual IP allocation is always more worthy. As an administrator, it is very important to keep a track on users' activities and DHCP gives a new IP after every 8 days by default. In such cases you cannot maintain any record for your IP addresses. Also if you want to permit different internet access authority to different departments, manual IP allocation is the best and most reliable option.

Static techniques take time but its always better to go for a static IP address if you have a big network.

- 5 But DHCP reservation also ensure the same device will always get the same IP address.. so besides the above answers (where a static IP is a MUST and no option otherwise), you can still manage your devices IP address using DHCP reservation (and all done via a central console, without configuring every single client devices). Or am I missing something? – Darius Commented Sep 13, 2014 at 13:09

- 4 I agree with @Darius... and sorry Stephen, but your answer shows that you don't understand the concept of DHCP reservations. – Tyson Commented Sep 13, 2014 at 13:24

- Well thanks Tyson! yes I think I went on another track. Hope to serve better next time. – Stephen Commented Sep 17, 2014 at 7:45

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged networking ip dhcp static-ip ..

- Featured on Meta

- Upcoming sign-up experiments related to tags

Hot Network Questions

- Does it matter if a fuse is on a positive or negative voltage?

- Cloud masking ECOSTRESS LST data

- I wanna start making scripts for my own Indie animation series, but can't find a good way to start it

- What kind of sequence is between an arithmetic and a geometric sequence?

- Rear shifter cable wont stay in anything but the highest gear

- Where can I access records of the 1947 Superman copyright trial?

- Why depreciation is considered a cost to own a car?

- What does ‘a grade-hog’ mean?

- How many steps are needed to turn one "a" into at least 100,000 "a"s using only the three functions of "select all", "copy" and "paste"?

- Does the Ogre-Faced Spider regenerate part of its eyes daily?

- Different outdir directories in one Quantum ESPRESSO run

- Con permiso to enter your own house?

- Predictable Network Interface Names: ensX vs enpXsY

- Is it unfair to retroactively excuse a student for absences?

- How is Victor Timely a variant of He Who Remains in the 19th century?

- Examples of distribution for which first-order condition is not enough for MLE

- \newrefsegment prints title of bib file

- Is arxiv strictly for new stuff?

- What to do if you disagree with a juror during master's thesis defense?

- What's the point of Dream Chaser?

- Can you arrange 25 whole numbers (not necessarily all different) so that the sum of any three successive terms is even but the sum of all 25 is odd?

- Can I get a refund for ICE due to cancelled regional bus service?